Integral imaging is a three-dimensional imaging technology that can provide full parallax and continuous viewing points [1]. More notably, integral imaging provides naturally colored images without the use of any special glasses [2]. Since integral imaging was first proposed in 1908, it has drawn a great deal of attention from researchers [3-7]. To implement three-dimensional imaging and display, integral imaging systems require multiple perspectives on three-dimensional objects. These recorded perspective images are called elemental images, each of which plays an important role in integral imaging.

Real-world scenes have complex arrangements of objects with multiple occlusions. Occlusions can be present in all but the most constrained environments. In the digital image processing field, researchers often consider the occluded region to be a missing region and use imaging restoration methods to restore the missing region [8-15]. The most representative method in [8] is the exemplar-based image restoration algorithm; however, depth information is not considered in this method. In attempts to expand upon previous research, one pixel restoration method was proposed in a computational integral imaging system [16] to address this depth problem. In this method, some of the invisible pixels of the occluded target region in an elemental image can be restored by using the corresponding visible pixels from the others due to different perspectives on the elemental images. However, if the foreground object happens to be located very close to the occluded target, it can cause major information loss of the target object in the elemental images. It is difficult to reconstruct the occluded target region with such limited information. Therefore, this pixel restoration method can be affected by the distance between the occluded target object and the foreground object.

In this paper, we propose an image restoration method to overcome the limitation of the distance between the occluded target object and the foreground objects. In the proposed method, a minimum spanning tree (MST) is used to estimate the occluded target region of each elemental image, and these occluded target regions are marked based upon the estimated depth maps. Then the proposed pixel restoration method is used to fill in the region left behind after occlusion removal. Our method combines the exemplar-based image restoration algorithm with the pixel restoration scheme to enhance the visual quality of three-dimensional integral imaging reconstruction for partially occluded objects. In Section II, the traditional image restoration methods are reviewed. The proposed method is presented in Section III. In Section IV, we report on carrying out several experiments and confirming the feasibility of our method. Finally, we conclude this paper in Section V.

II. REVIEW OF PIXEL RESTORATION SCHEMES

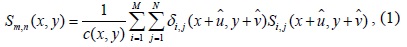

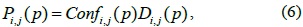

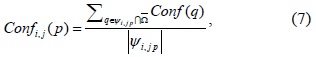

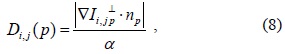

In previous works, several methods have been suggested to solve the occlusion problem [16-18]. Most approaches have attempted to develop specific image processing algorithms based on statistical or contour analysis to alleviate the occlusion problem. Besides the image processing algorithms, another method was proposed in which the occluded target region was directly removed for visibility-enhanced reconstruction [17]. However, in this approach, the vacant target area caused by occlusion removal may lead to visual quality degradation of the reconstructed target image. To overcome this problem, Piao et al. [16] proposed an effective approach for visibility-enhanced recons-truction of a partially occluded three-dimensional scene by using the pixel-restoration method in a computational integral imaging system. This scheme can be expressed as follows:

where,

As is described in Section I, the pixel restoration scheme depends on the distance between the occluded target region and the foreground objects. By analyzing whether the distance

Where

In synthetic aperture integral imaging, every elemental image represents a slightly different viewpoint on a three-dimensional scene. Thus a number of invisible pixels of the occluded region of an elemental image viewed from one viewpoint may be visible in other elemental images due to their viewpoint differences. As is shown in the above description, using a pixel restoration scheme can restore a partially occluded region, but it fails for the region where the distance between the occluded target region and the foreground objects is less than Δ

>

A. MST-Based Stereo Matching

In previous work, a depth estimation method using MST-based stereo matching in integral imaging was proposed to detect occlusions; it is suitable for both simple and complex three-dimensional scenes [19].

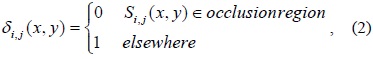

Zhong et al. [19] presents a non-local stereo matching method that can produce an accurate disparity map between two elemental images. According to the principle of measurement by triangulation, the disparity map can be transformed to a depth map, and we can use the depth map to detect the occlusions. In [19], the reference elemental image

where

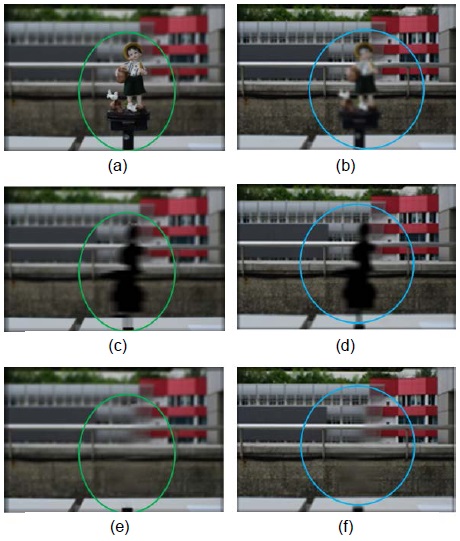

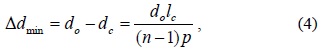

Fig. 1(a) and (b) show the input elemental images used to extract the disparity map, Fig. 1(c) shows the disparity map that is generated by the non-local aggregation with non-local refinement. Fig. 1(d) shows the occlusion detection results of the first elemental images, which is replaced with a green color.

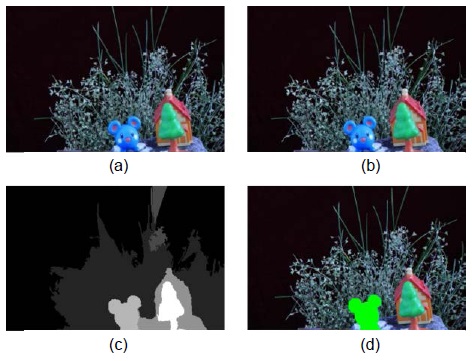

As shown in Fig. 2, five elemental images are selected as the source region. As shown in Fig. 2(a), we find the best patch from the source region to restore the missing region, which is enclosed by a solid red rectangle. The principle for selecting the elemental images is defined in Eq. (12).

1) Computing Patch Priorities

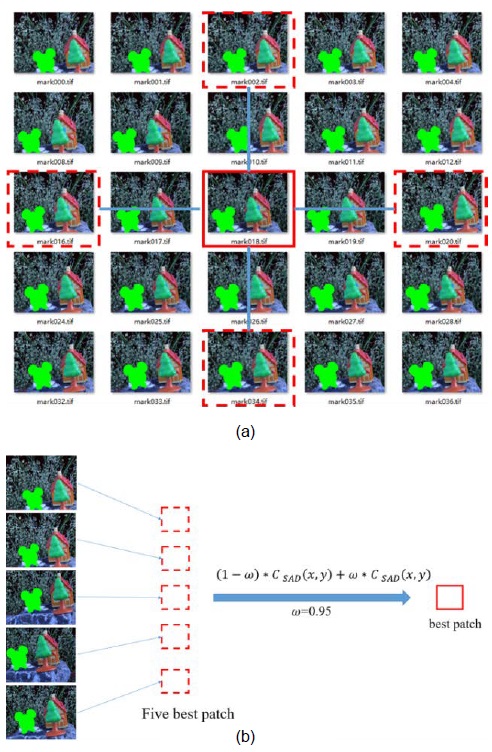

The filling order is crucial to non-parametric texture synthesis, and designing a fill order that explicitly encourages propagation of linear structure together with texture should produce a better image restoration [8]. Criminisi’s work performs this task through a best-first filling algorithm that depends entirely on the priority values that are assigned to each patch on the fill border. As shown in Fig. 3, we define the priorities for each point to determine the filling order.

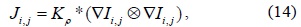

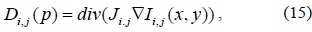

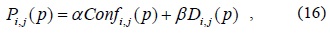

For each point

where the subscript

We call

where, |

2) Propagating Texture and Structure

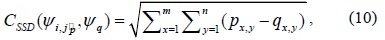

Once all priorities on the fill border have been computed, the patch with highest priority is found. We use Eq. (9) to find the most similar patch to fill the patch

where

In step 1, we find the five best patches {

where

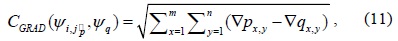

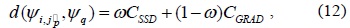

In step 2, we find the most similar patch from the five best patches by combining the

where

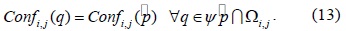

3) Updating Confidence Values

After the patch has been filled with new pixel values, the confidence

Repeat the above steps, until the whole region is filled. Ultimately, we achieve the image in which the occlusion is removed, as shown in Fig. 4.

4) Filling Order Control Using a Structure Tensor

The exemplar-based image restoration algorithm performs well in filling large missing regions. However, discontinuous structures can be problematic, and these discontinuous structures will influence patch priorities.

In view of this problem, we use the structure tensor to recompute the data term in Eq. (8) [20].The structure tensor is also referred to as the second-moment matrix. It summarizes the predominant directions of the gradient, and the degree along those directions. The structure tensors

where

Eq. (8) can be replaced as follows:

where

We also modify the priority

where

In this section, image restoration and three-dimensional image reconstruction in a synthetic aperture integral imaging system is implemented. The distance between the building and the pickup devices is approximately 20 m, and a toy human figure is located at approximately 1 m from the pickup devices. The camera in use has an image sensor array of 2400×1600 pixels, and each pixel size is 8.2 μm. In order to improve the operation speed, every elemental image is resized to 480×320 pixels. The moving step of the camera is Δ

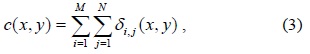

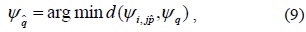

As shown in Fig. 5, the occlusion regions are marked as a green circle by using MST-based stereo matching, the image restoration algorithm used for the marked region. The result of Criminisi’s algorithm is shown in Fig. 5(a); we found that some errors occurred, which are marked by green circles. In our experiment, some errors exist in almost every restored element image, and these errors will reduce the visual quality of the reconstructed images. Fig. 5(b) shows the result proposed in [16]. It can be seen that only partially occluded regions are restored. This is because the distance between the occluded target region and the foreground objects is less than Δ

Fig. 6 illustrates two sets of images reconstructed with the computation integral imaging reconstruction technique at the distance of 500 mm and 760 mm. Fig. 6(a) and (d) show the images reconstructed at

In the comparisons shown in Fig. 6(a)–(f), we were easily able to show that the visual quality of the reconstructed images is improved by using the proposed restoration method. Fig. 6(f) shows the reconstructed image, in which the restoration region is marked with a green circle. Even though blurring occurs in a small region of the reconstructed image due to missing depth information, the proposed method outperforms the one in [16] in terms of visual quality. We are continuing to develop extensions of the proposed method to address the issue of the missing depth information.

In this paper, we proposed an exemplar-based image restoration method to solve the occlusion problem in synthetic aperture integral imaging. Our proposed method successfully overcame the limitation of the distance between the target and the occluding object. Experimental results confirm the feasibility of the restoration method when applied to three-dimensional image reconstructions. However, some depth information is missed by use of our method. In future works, we intend to extend the proposed method to address the issue of missing depth information.

![Restored elemental images using (a) algorithm in [8], (b) algorithm in [16]. (c) The proposed method. (d) The proposed method with a structure tensor.](http://oak.go.kr/repository/journal/20473/E1ICAW_2016_v14n1_57_f005.jpg)