Involuntary hand tremor has been a serious challenge in micromanipulation tasks and thus draws a significant amount of attention from related fields. To minimize the effect of the hand tremor, a variety of mechanically assistive solutions have been proposed. However, approaches increasing human awareness of their own hand tremor have not been extensively studied. In this paper, a head mount display based virtual reality (VR) system to increase human self-awareness of hand tremor is proposed. It shows a user a virtual image of a handheld device with emphasized hand tremor information. Provided with this emphasized tremor information, we hypothesize that subjects will control their hand tremor more effectively. Two methods of emphasizing hand tremor information are demonstrated: (1) direct amplification of tremor and (2) magnification of virtual object, in comparison to the controlled condition without emphasized tremor information. A human-subject study with twelve trials was conducted, with four healthy participants who performed a task of holding a handheld gripper device in a specific direction. The results showed that the proposed methods achieved a reduced level of hand tremor compared with the control condition.

Micromanipulation technology plays a significant role in precision manufacturing, mainly by assisting workers in manipulating micro-machines and biological cells, as well as performing micro-surgery, with precise control techniques such as feedback sensing and motion control [1, 2]. There are several micromanipulation technologies that use various tiny glass tools connected to electric motor-driven robot arms, handheld devices, and microelectro-mechanical systems [3-5]. In the case of handheld micromanipulation, manipulation performance is limited by the physiological capacity of the user. For example, a physiological hand tremor with a frequency of 6-12 Hz and an amplitude of 100 μm [6] hinders accurate micromanipulation. To address this challenge, a variety of handheld systems have been proposed and developed. For example, a handheld microsurgical instrument for six degree of freedom tremor cancellation, Micron [7], has been suggested by introducing miniaturized 6-DOF manipulator. It shows effective hand tremor reduction up to 89.3%, however, there would be motion limitation achieved by the optical tracking system. In addition, a force sensing micro-gripper integrated with a handheld micro-manipulator has been designed to perform accurate membrane peeling in vitreoretinal surgery [8, 9]. However, it can compensate a certain level of hand tremor in the limited workspace. Furthermore, another active hand-held tremor compensation instrument, ITrem, has been presented by introducing the accelerometer and piezoelectric motors as a motion sensor and the actuators, respectively [10]. In the gripping test, it shows the root mean square error of 44.7 μm in the hand tremor which is not small enough in comparison with other handheld manipulators [7-9]. Also, SMART microsurgical instruments with active tremor cancellation technology have been implemented [11-14]. SMART systems introduce the fiber-based common path optical coherence tomography as a distal sensor. It can precisely measure and compensate axial hand tremor within 3 mm height. In summary, these handheld devices have the advantages of ease of handling, low weight and volume, and increased mobility. Not only hardware, but a software-based multi-modal system for tremor analysis also has been reported [15].

Virtual reality (VR) technology has been highlighted recently in many industrial and biomedical fields. It has previously been applied to robotics micromanipulation [16], military skills in soldiers [17], phobia and post-traumatic stress disorder therapy [18], motor skill training on athletes [19], and surgical skill improvement [20]. The potential of VR has specifically been explored for the purpose of training subjects such as teleoperators for micro-robotic cell injection [21], for assembly procedures for microelectro-mechanical system prototypes that allow a supervisor to guide the task remotely [22], and in simulators that teach a subject how to perform an industrial assembly task through realistic scenarios and virtual environments [23]. These applications enable the subject to gain valuable experience in accurate tasks. Subjects learn or improve skills and techniques without causing harm to themselves or damaging any real tools, and communication skills can also be taught through a range of scenarios with virtual subjects [24].

Eyesight, or vision, is one of the main sources of information that plays an important role in VR [25, 26]. VR can both provide real-time virtual images and simulate virtual challenges by adjusting difficulty levels represented in the virtual world [24, 27]. It is known that visual inputs and spatial models have a potential to improve subjects’ manipulation performance by managing the level of awareness of subjects of objects and other individuals in a VR environment [28]. Simulation software, such as DIVE (Distributed Interactive Virtual Environment) [29], provides a virtual experimental environment for multi-user virtual reality applications. It has distributed and flexible platforms where the awareness of users can be manipulated during the simulation. Also, VR environments are enjoyable and lead the subjects to have a better sense of self-control along the task [30]. By using the self-controlled long-term learning, VR could assist the subjects to overcome their phobias [31]. In relation to the visual feedback which is relevant to VR, manipulation skills can be learned through varying visual feedback with virtual fixtures [32]. Inspired by these findings, we envision that subjects could gain a better control over their hand tremor if they train themselves in a VR environment. Implementation of a vision-based control on handheld manipulator has already been demonstrated [33]. The performance of handheld manipulator, Micron, was evaluated by tracking virtual fixtures, however, it is only available for tracking the instrument. Moreover, haptics-based VR systems have been presented for surgical training simulator [34, 35]. These simulators allow the subjects to train themselves in the virtual surgical tasks such as bone dissection, through immediate force feedback provided by the system. Nevertheless, the simulators just present the surgical procedures to the trainees without any visual modifications, aiming for adjustment in the procedure itself.

In this study, we present a VR-based micromanipulation training system, focusing on enhancement of self-awareness of a subject’s hand tremor. The system employs a motion sensor-enabled handheld gripper. A virtual image of the gripper is displayed in virtual space, with two alternative methods used to emphasize hand tremor information: (1) tremor amplification, in which the user sees an image of the gripper with a doubled hand tremor compared to the original and (2) size magnification, in which the user sees a gripper image with a doubled size, to better show tremor information. We implemented and tested the two visualization methods, and compared these to a control condition without visual modification. Twelve trials were conducted on four healthy subjects who were asked to minimize their hand tremor while holding the gripper in a certain direction. The results indicate that the two visual modifications could reduce hand tremor, compared with the control condition.

The literature on sensorimotor system and control shows that the self-awareness of body movement plays a significant role in motor control performance. In addition, a recent study [36] shows that size manipulation of a virtual object can improve short-term motor skill acquisition. In the micromanipulation tasks, we assumed that suppressing hand tremor is the first consideration for successful handheld-based micromanipulation. We aim to assist a subject to develop better self-awareness of hand tremor by modifying visual stimuli. Here, we present two methods of visual modification in VR:

Modification 1: Tremor amplification. The VR system shows a virtual object of the same size as the real object, with hand tremor amplified by 200%. We hypothesize that the amplified hand tremor visualization will encourage a subject to develop better self-control, thereby reducing the tremor.

Modification 2: Object size magnification. The VR system shows a magnified virtual object, with 200% scale. We hypothesize that the magnified view will show hand tremor more effectively, thereby encouraging the subject to develop self-control of hand tremor.

In this research, we focus on the angular elements of hand tremor. A schematic diagram of the entire system, including hardware and software, is shown in Fig. 1. The hardware system consisted of a gripper with an attached nine degrees of freedom (9-DOF) motion sensor, alongside a workstation computer equipped with a GeForce GTX1080 graphics processing unit for VR rendering, and an Oculus Rift CV1 VR headset. A LSM9DS0 motion sensor with a digital interface (i.e., I2C/SPI) was used to track acceleration, rotation, and the magnetic field. The motion sensor was connected to an Arduino Due microcontroller board to send motion data via I2C communication. We programmed the Arduino board to calculate real-time angular orientation, represented by yaw, pitch, and roll angles. The angular orientation data were sent to the workstation via RS232 protocol over USB.

The software was programmed using Unity3D in the C# language. With this software, real-time orientation data from the motion sensor attached to the gripper is received and used to generate stereo three-dimensional (3D) images with a virtual representation of the gripper, reflecting real-time orientation changes; the images are then displayed in the Oculus Rift VR headset. The 3D model was designed using Autodesk TinkerCad, a web-based 3D modeling tool. The visualization of the virtual world was powered by an Oculus CV1 headset. Along with the virtual gripper, a semi-transparent green mesh gripper image was displayed to show the subject a target orientation, which provides a reference orientation for the experiment. The system setup and sample stereo VR images are shown in Figs. 2 and 3, respectively.

To test the hypotheses and further explore the potential of VR use for micromanipulation, we conducted an experiment with the proposed system. We recruited four male subjects from a university campus in the age group 23 to 27. They were all right-handed with normal vision and no reported cognitive deficits or neurological disorders.

We defined a gripper holding task, in which a subject was asked to match the orientation of a virtual gripper to a given reference orientation. We set the reference orientation as the one perpendicular to the ground, represented by a green mesh image. In addition, the following three conditions were defined for the task, to compare hand tremor control performance:

1. Control condition (C). This condition shows an image of the virtual gripper with identical size, shape, and orientation to the real gripper.

2. Manipulated condition 1 (M1). This condition applies the proposed tremor amplification method. The virtual gripper deviation of orientation is amplified by 200%, that is, a subject will see their hand tremor amplified.

3. Manipulated condition 2 (M2). This condition applies the proposed object size magnification method. The virtual gripper object size is magnified by 200%.

We set the following hypotheses:

H1. The proposed tremor amplification method (M1) will decrease hand tremor in comparison with the control condition.

H2. The proposed object size magnification method (M2) will reduce hand tremor in comparison with the control condition.

H3. The hand tremor will be decreased as subjects perform more trials (i.e., training effect).

During the tasks, the subjects were instructed to put their elbow and lower arm on a table, which is a typical posture in microsurgical scenarios. For each trial, the subjects were requested to hold the gripper at the reference angle shown on the VR screens (i.e., green mesh image) for 20 s. The reference target image was in the same position and orientation throughout the tasks (yaw = 90º).

A within-subjects design was used. The independent variable was the manipulation condition (C, M1, M2). The subjects performed three blocks of three tasks. In each block, the three conditions were presented in a pre-shuffled order, resulting in nine trials in total. We randomized the order of the conditions across subjects to minimize the training effect. Dependent variables include the average angle of deviation with degree unit, calculated from rotation angle data.

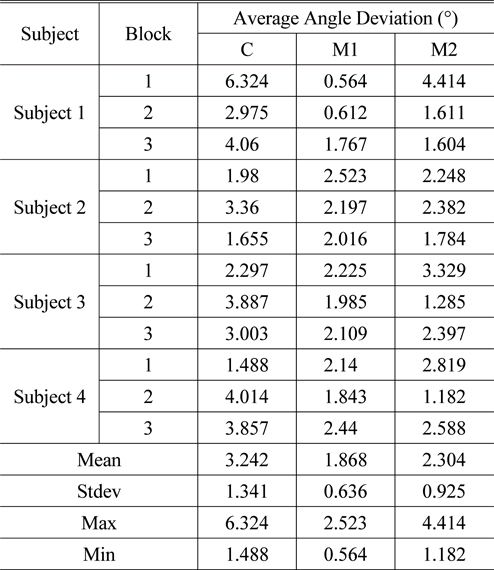

The calculated average angles of deviation from all trials are shown in Table 1. We analyzed the data using a two-way ANOVA with factors of condition and block, to check both the visual manipulation and learning effects, followed by post-hoc paired t-tests.

[TABLE 1.] Hand tremor control performance

Hand tremor control performance

Table 1 shows that both manipulations achieved a lower average angle of deviation, that is, reduced hand tremor, than that of the control condition. The main effect was statistically significant for all conditions (F2,33 = 5.80, p < 0.01); however, the block effect was not significant. H3 was not supported. The post-hoc paired t-tests showed that there was a significant difference in the angular deviation for the C (Mean = 3.242, Stdev = 1.341) and M1 (Mean = 1.868, Stdev = 0.636) conditions; t(11) = 2.678, p = 0.01, as well as for C and M2 (Mean = 2.304, Stdev = 0.925) conditions; t(11) = 2.318, p < 0.05. H1 and H2 were supported. Despite the mean differences between the M1 and M2 conditions, there was no significant difference from the post-hoc tests; t(11) = −1.233, p = 0.122.

Figure 4 shows a box plot of the average angle of deviation results for the three conditions. The vertical (i.e., Y) axis in Fig. 4 shows the average angular deviation in units of degrees. Consistent with the statistical analysis results, M1 and M2 show reduced angular deviation compared with the control condition. In addition, M1 shows a lower level of inter-trial and inter-personal differences in angular deviation than those of the other two conditions, which provides the potential for further experiments into whether the tremor amplification (M1) method is significantly more effective in regulating hand tremor while minimizing individual differences.

Figure 5 shows the detailed plots of the angular deviation trajectory of a subject under the three conditions. The (0, 0) point denotes the reference target orientation. The plots show the major trend of hand tremor, as well as how effectively the proposed modification techniques reduced the tremor. Interestingly, the major component of hand tremor was linear, and this may originate from the pronation-supination movement of the forearm, that is, the rotational movement along its longitudinal axis, involving two joints which are mechanically linked through the elbow joint [37].

Compared with related studies addressing the challenge of hand tremor in micromanipulation tasks, the proposed approach in this paper highlights self-awareness as a key component. Our experiment with the gripper holding task has exemplified that the increase in self-awareness gained using VR technology could help users to reduce the angular element of hand tremor by 42% (from 3.242 to 1.868) and 29% (from 3.242 to 2.304), on average, using tremor amplification (M1) and object magnification (M2), respectively, without use of active tremor compensation technology such as Micron [7] and SMART [11]. The proposed approach has the potential for integration with these technologies, with careful consideration. For example, we envision an integrated VR-based micromanipulation task environment with the object magnification (M2) approach to help users control their hand tremor, alongside use of active tremor compensation technologies.

It is worthwhile to note that the tremor amplification (M1) approach exaggerates users’ hand tremor, which may show the user’s handheld object (e.g., gripper or forceps) with a different relative position from that in real physical space, despite its greater potential for hand tremor reduction over the object magnification approach (M2). Considering the effectiveness of this method in micromanipulation tasks in practice, we propose two ways to harness the tremor amplification approach. First, tremor amplification can be used for pre-task training sessions, which can help users to understand their hand tremor pattern prior to actual micromanipulation tasks. The users will then be able to adjust their posture or pay particular attention to regulating their hand tremor. Second, the amplified tremor may be presented as assistive information, not directly applied to the object image in a VR environment.

The simulated tasks in this paper were conducted while our system was measuring rotational elements and provided modified stimuli in a VR environment (i.e., object magnification and tremor amplification) to help users better control their hand tremor. To embrace more practical cases such as tremor disease analysis and assistance, our future work will include approaches for the translational elements of tremor. Additionally, future studies could include other types of tasks such as studying the behavior of a subject’s tremor after real world application, and comparison with performance in the virtual world. Likewise, a new design for emphasizing translational tremor elements is required to apply our system to other fields such as brain disorder, rehabilitation, and aiming training in military applications.

VR, human arm posture, and the brain are the key factors controlling human hand tremor. The experiment conducted in this paper demonstrates the potential for increased self-awareness in hand tremor. The modified visual representation in VR helped the subjects to perceive hand tremor information more clearly, and thus to develop better control over their hand tremor, through both tremor amplification and size magnification conditions. In other words, the visual stimulus enabled the subjects to be well aware of their hand tremor and have a better control in manipulation. Our detailed analysis of hand tremor elements shows that the major element of hand tremor originates from the specific structures of arm joints and rotation movements, and demonstrates that the visual modification methods discussed reduced the major hand tremor elements effectively. This visual modification has a potential to be incorporated into the microsurgical training systems for delicate and safe motion control of surgical tools.