The Korea Institute of Science and Technology Information (KISTI) has been studying the e-Science paradigm. With its successful application to particle physics, we consider the application of the paradigm to astroparticle physics. The Standard Model of particle physics is still not considered perfect even though the Higgs boson has recently been discovered. Astrophysical evidence shows that dark matter exists in the universe, hinting at new physics beyond the Standard Model. Therefore, there are efforts to search for dark matter candidates using direct detection, indirect detection, and collider detection. There are also efforts to build theoretical models for dark matter. Current astroparticle physics involves big investments in theories and computing along with experiments. The complexity of such an area of research is explained within the framework of the e-Science paradigm. The idea of the e-Science paradigm is to unify experiment, theory, and computing. The purpose is to study astroparticle physics anytime and anywhere. In this paper, an example of the application of the paradigm to astrophysics is presented.

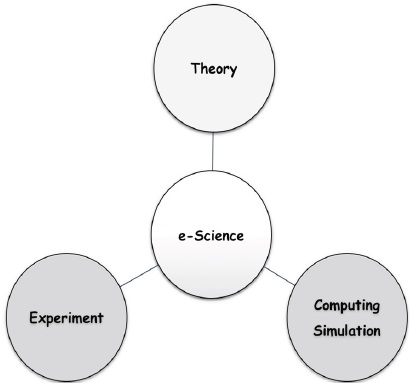

Current research can be analyzed by big data in the framework of the e-Science paradigm. The e-Science paradigm unifies experiments, theories, and computing simulations that are related to big data (Lin & Yen 2009). Hey explained that a few thousands of years ago, science was described by experiments (Hey 2006). In the last few hundred years, science was described by theories and in the last few decades, science was described by computing simulations (Hey 2006). Today, science is described by big data through the unification of experiments, theories, and computing simulations (Cho et al. 2011).

We introduce the e-Science paradigm in the search for new physics beyond the Standard Model, as shown in Fig. 1. It is not a mere set of experiments, theories, and computing, but an efficient method of unifying researches. In this paper, we show an application of the e-Science paradigm to astroparticle physics.

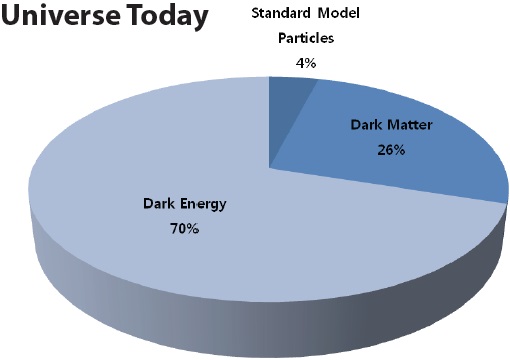

Dark matter is one of three major principal constituents of the universe. The precision measurements in flavor physics have confirmed the Cabibbo-Kobayashi-Maskawa (CKM) theory (Kobayashi & Maskawa 1973). However, the Standard Model leaves many unanswered questions in particle physics such as the origin of generations and masses, and the mixing and abundance of antimatter. Astrophysical evidence indicates the existence of dark matter (Bertone et al. 2005). It has been established that the universe today consists of 26% of dark matter and 4% of Standard Model particles, as shown in Fig. 2. Therefore, there are efforts to search for dark matter candidates using direct detection, indirect detection, and collider detection, as these could hint at new physics beyond the Standard Model. The e-Science paradigm for astroparticle physics is introduced to facilitate research in astrophysics.

2.1 Experiment-Computing (Cyber-Laboratory)

For experiment-computing, we constructed the socalled “cyber-laboratory” e-Science research environment (Cho & Kim 2009). In collider experiments, there are three integral parts, i.e., data production, data processing, and data analysis. First, data production is to take online shift anywhere. Online shift is taken not only in the on-site main control room, but also in the off-site remote control room. Second, data processing was performed using a data handling system. Offline shift can also be taken via a data handling system. The goal is to handle big data between user communities (Cho 2007). Third, data analysis was performed for the collaborators to work together as if they were on-site. One of the examples is the Enabling Virtual Organization (EVO) system. In collider physics, we have applied the cyber-laboratory to the CDF experiment at the Fermilab in the United State and Belle experiment at KEK in Japan.

Computing simulation is an important addition to experiment-computing. The Large Hadron Collider (LHC) experiments show that computing simulations need more Central Processing Unit (CPU) power than reconstructions of experimental data. Since the cross sections of particles in new physics (e.g., dark matter candidates) are much smaller (<10-6 nb) than that of the Higgs boson (10-3–10-1 nb), at least a thousand times more data is needed than that for the discovery of the Higgs boson. Therefore, the development of simulation toolkits is an urgent issue. Big data from the Monte Carlo (MC) simulation is also needed for relating the experimentally measured variables at the LHC experiments to the parameters of the underlying theories (Papucci & Hoeche 2012). The MC simulation is well suited for running on a distributed computing architecture because of its parallel nature.

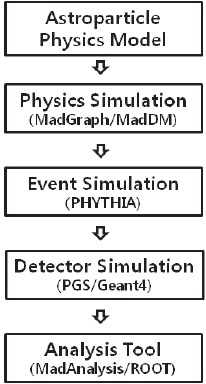

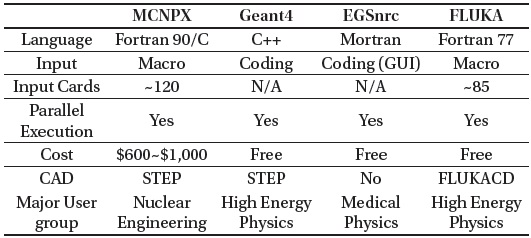

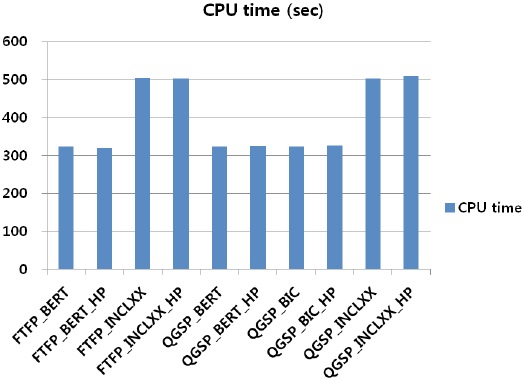

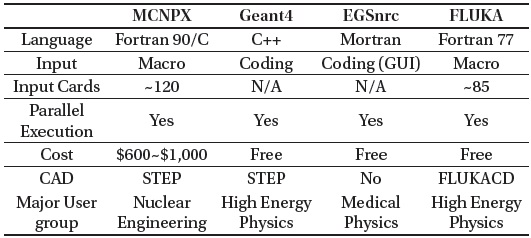

The flowchart of a simulation for an astroparticle physics model is shown in Fig. 3 (Cho et al. 2015). From the astroparticle physics model, the Feynman diagrams are generated by MadGraph/MadDM (Alwall et al. 2014) and the event simulation is generated by PYTHIA (Sjostranda et al. 2014). Then, the detector simulation is performed using Geant4 (Agostinelli et al. 2003). In the last step, the output is analyzed by MadAnalysis (Eric et al. 2013) and ROOT (Antcheva et al. 2009). Astroparticle theory groups use supercomputers for MC simulations in physics beyond the Standard Model. The need for more CPU power demands a solution to cost-effective computing (Cho et al. 2015). For this purpose, we need to study simulation toolkits. Table 1 shows a comparison of the general features of several MC simulation toolkits (Cho 2012).

[Table 1.] Comparison of general features of several MC simulation toolkits (Cho 2012)

Comparison of general features of several MC simulation toolkits (Cho 2012)

Geant4 is a toolkit that simulates the interaction of particles with matter. It can be applied not only to accelerator physics, nuclear physics, high energy physics, and medical physics, but also to cosmic ray research and space science (Agostinelli et al. 2003). Geant4 is more accurate than the other simulation toolkits of similar capabilities; however, it consumes much more CPU time. Therefore, we need to study parallelization and optimization of the Geant4 toolkit.

For theory-experiment, tools for both theoretical and experimental analysis were developed. Experimental results provide theoretical models and theoretical models provide experimental results. As already applied to particle physics, we applied these tools to astroparticle physics.

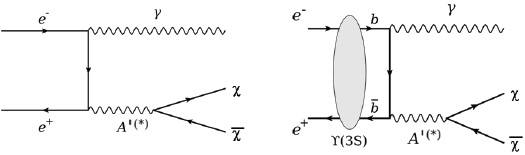

Using the cyber-laboratory for experiment-computing, dark matter production at an

For computing-theory, we show an example of the Graphical Processing Unit (GPU) machines used in lattice Quantum ChromoDynamics (QCD). Lattice QCD is well suited for GPU machines. Compared to a CPU, a GPU has hundreds of optimized cores to process information simultaneously. We applied simulation toolkits to study the finite volume effects in Lattice QCD (Kim & Cho 2015). It is a non-perturbative approach to solving the QCD theory (Wilson 1974). The Charge-Parity (CP) violation parameter

We also studied the optimization of the Geant4 simulation toolkit by comparing the CPU time consumed for various physics models. We generated one million events for each physics model and measured the CPU time. The beam was a 1 GeV uranium and the target was liquid hydrogen. Geant4 version 10.2 was tested, which was released on December 4, 2015. We used the Tachyon II supercomputer at KISTI. Fig. 6 shows the CPU time for various physics models. Then, we compared the results of the simulation for each physics model with the experimental data to determine the optimized physic model.

For theory-experimental, we applied the method to astroparticle physics as already applied to particle physics. In the application to particle physics, we compared the results of collider experiments with those of the left-right model (Cho & Nam 2013). Using the effective Hamiltonian approach, the amplitude of

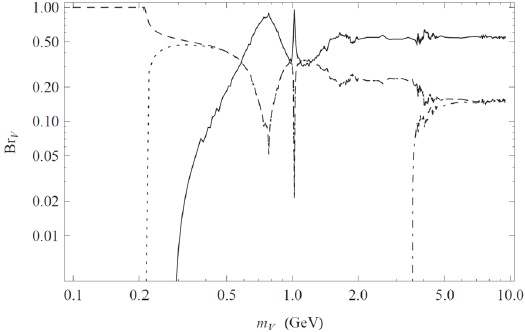

This method can be applied to astroparticle physics. A recent paper shows that there is feedback from stellar objects and a new light

As in particle physics, the e-Science paradigm that unifies experiment, theory, and computing has been introduced in astroparticle physics. For experiment-computing, we use a cyber-laboratory to study the problem of dark matter anytime and anywhere. For computing-theory, we study theoretical models using computing simulations and improve simulation toolkits to reduce CPU time. For theory-experiment, theoretical models are constructed to describe the experimental results.

The research on dark matter and the dark photon is as an example of physics beyond the Standard Model and the application of the e-Science paradigm to astroparticle physics.