We develop a new method to globally calibrate the feature points that are derived from the binocular systems at different positions. A three-DOF (degree of freedom) global calibration system is established to move and rotate the 3D calibration board to an arbitrary position. A three-DOF global calibration model is constructed for the binocular systems at different positions. The three-DOF calibration model unifies the 3D coordinates of the feature points from different binocular systems into a unique world coordinate system that is determined by the initial position of the calibration board. Experiments are conducted on the binocular systems at the coaxial and diagonal positions. The experimental root-mean-square errors between the true and reconstructed 3D coordinates of the feature points are 0.573 mm, 0.520 mm and 0.528 mm at the coaxial positions. The experimental root-mean-square errors between the true and reconstructed 3D coordinates of the feature points are 0.495 mm, 0.556 mm and 0.627 mm at the diagonal positions. This method provides a global and accurate calibration to unity the measurement points of different binocular vision systems into the same world coordinate system.

The binocular vision system was proposed in the 1870s, with the advantage of non-contact inspection and high measurement precision [1-3]. It provides flourishing developments in motion parameters testing, measurement of machine parts, parameter detection of micro-operating systems, 3D profile reconstruction [4-7], etc. It also gradually permeates into the aerospace, robot navigation, industrial measurement and bio-medical areas [8-13]. System calibration is the basis for binocular vision to obtain 3D coordinates. It provides the conversion relationship between the 2D coordinates of the images of the cameras and the 3D coordinates of the objects [3, 14, 15]. As the measurement accuracy of the binocular vision system depends on the camera calibration, the research on the camera calibration method has much theoretical importance and practical value.

Monocular vision measurement refers to the method of a camera taking a single photo for the measurement work [16]. It is difficult to achieve real-time large-scale mapping with a monocular camera, due to the purely projective nature of the sensor [17, 18]. Furthermore, the binocular vision system adopts two cameras, which obtain the 3D coordinates by means of matching the corresponding points [19-21]. In this paper, we study the global calibration method of the binocular vision systems that are located on different positions. The previous calibration method can be divided into three aspects by the dimension of the calibration object. The calibration objects of the binocular vision system include 1D objects, 2D objects and 3D objects [7, 22]. The 1D-object-based calibration was proposed a few years ago, which required an object consisting of more than three feature points [23]. The 1D calibration object can be conveniently moved, which is able to solve the block problems among multiple cameras. However, as the constraints provided by the unrestricted 1D object cannot completely identify the internal and external parameters of the camera, the motion of the 1D object is usually strictly constrained [24]. As for the method of the 2D calibration object, the calibration plate using a 2D gray-modulated sinusoidal fringe pattern is chosen to extract the phase feature points as the 2D calibration data [25-27]. The knowledge of the plane motion is not necessary in this calibration and the setup is easier. However, it cannot deal with the occlusion and depth-related problems [28, 29]. A 2D calibration object was reported to calibrate a binocular vision system used for vision measurement [12]. A precise calibration method was proposed for a binocular vision system which is devoted to minimizing the metric distance error between the reconstructed point and the real point in a 3D measurement coordinate system. The measurement accuracy of one binocular vision system is improved compared with the traditional method. For a vision measurement with multiple binocular vision systems, the measurement world coordinates of a binocular vision system are different from the ones of another binocular vision system considering the different positions of the 2D object. Therefore, the measurement world coordinates of a binocular vision system should be transformed to the ones of another binocular vision system. And then the front view of a 2D object is also difficult to simultaneously observe by multiple binocular vision systems. As for the 3D calibration object, the calibration object usually consists of two or three planes orthogonal to each other [30, 31]. The highest accuracy is usually obtained by using a 3D calibration object [32]. But this approach requires expensive calibration apparatus and an elaborate setup [20, 33]. A global calibration method of a laser plane is proposed, adopting a 3D calibration board to generate two horizontal coordinates and a height gauge to generate the height coordinate of the point in the laser plane [34]. The method is performed by a camera and a 3D calibration object. However, a 3D object is unable to calibrate the measurement system that consists of multiple binocular vision systems. A binocular vision system captures the images of the front view of the 3D object while the binocular vision system opposite to the former system is blocked by the back view of the 3D object. The blocking problem in the 3D calibration makes the simultaneous observation by all involved cameras impossible. Thus, we propose a three-degrees-of-freedom (three-DOF) calibration method that translates the 3D calibration board and rotates it to solve the view block problems between opposite binocular systems. The measurement coordinates of multiple binocular vision systems are also unified in a unique coordinate system.

A three-DOF global calibration method for binocular vision measurement is explored in this paper, which unites the measurements of the binocular vision systems at different positions into a single world coordinate system. The rest of this paper is organized as follows: Section 2 presents the principle of the three-DOF global calibration system that calibrates the binocular systems at the original, coaxial and diagonal positions. Section 3 proposes a three-DOF calibration model. According to the model, the coordinates of the feature points at the coaxial and diagonal positions are unified into a same world coordinate system. Section 4 outlines the global calibration experiments in coaxial and diagonal positions. Section 5 summarizes this paper.

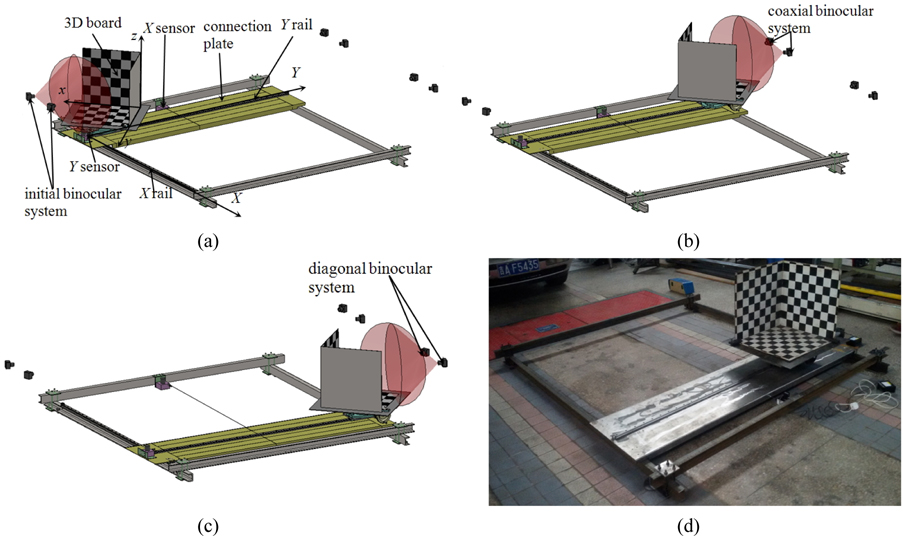

II. Three-DOF CALIBRATION SYSTEM

The 3D calibration board is chosen as the benchmark of a single measurement system with binocular vision, which takes advantage of the simple and accurate calibration process. However, it is difficult to perform the global calibration among several binocular measurement systems in a large view field because of the limited size of the 3D calibration board and the perspective blocking problem.

Considering the complicated process to manufacture a large 3D calibration board, we present a three-DOF calibration system that makes the 3D calibration board move along the precise rail with a known distance and rotate around the turntable with an angle in the large view field. The three-DOF global calibration system unites the measurement results of the binocular vision systems at different positions into a single world coordinate system.

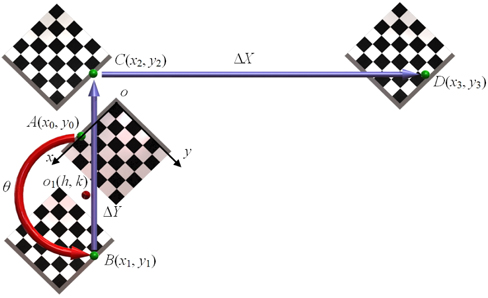

The structure of the global calibration system for binocular measurement systems is shown in Fig. 1. The three-DOF calibration system is mainly composed of a translational subassembly and a rotational subassembly. The translational subassembly mainly consists of a base frame, a connection plate, an

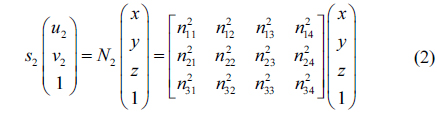

III. Three-DOF CALIBRATION MODEL

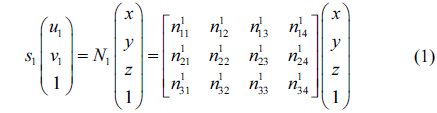

The imaging model of the binocular vision is indicated as [4]

where (

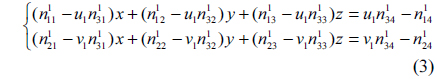

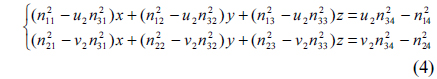

By eliminating the two scale factors

From Eqs. (3) and (4), the 3D coordinates of the feature points are reconstructed by the transformation matrices and image coordinates of the feature points.

When we globally calibrate the binocular system on the coaxial or diagonal position, the 3D calibration board is moved from the initial position to the coaxial or diagonal position of the rectangle constructed by the frames. A laser projector is adopted to project a global horizontal laser plane at the initial position. Two horizontal lines on the calibration plate coincide with the laser plane by adjusting the height of the calibration board. Thus, the

The intersection point of the three planes of the 3D calibration board in the initial position is taken as the global origin

Three-DOF calibration model is shown in Fig. 2. When the binocular system on the coaxial position is globally calibrated, the 3D calibration board on the initial position rotates around the axis of the turntable, moves along the

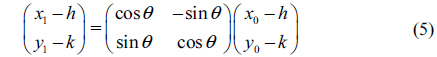

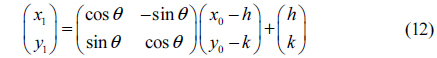

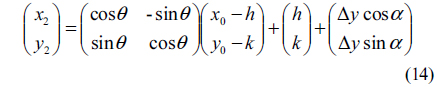

The feature points of the 3D calibration board are purely rotated around the axis of the turntable, while the feature points in the rotation formula should be rotated around the origin of the coordinate system. Therefore, the misalignment between the axis of the turntable and the origin of the coordinate system should be considered when we perform the rotation transformations of the feature points. The

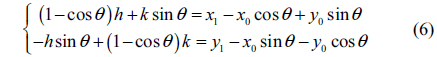

The result of the above matrix multiplication is represented as

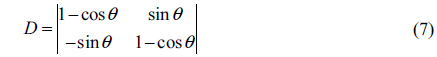

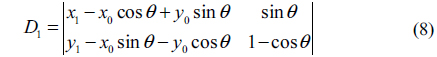

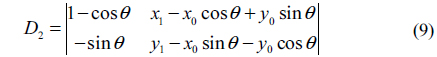

The coefficient determinants are represented by

The coordinates (

Therefore, the 3D calibration board is rotated by a known angle

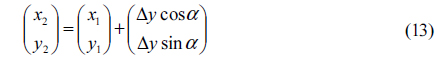

In the calibration of the binocular system at the coaxial position, the 3D calibration board is rotated by 180 degrees and moved along the

where

As the point

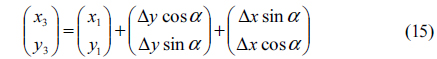

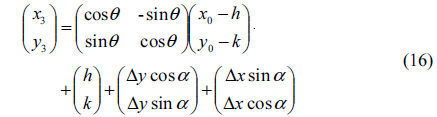

In the calibration of the binocular system at the diagonal position, the 3D calibration board is rotated by 180 degrees and moved along the

As the point

The coordinate (

In the experiments, the size of the selected 3D calibration board is 500 mm × 500 mm × 500 mm. The plane pattern of the calibration board is a square checkerboard with the same size of 60 mm × 60 mm. The evenly distributed 18 points in the two sides of the calibration board are taken as the feature points. The measurement scope and accuracy of the displacement sensors in the

Three global calibration experiments are conducted to unite the measurement results of the binocular vision system at the coaxial position into the world coordinate system at the initial position. The 3D calibration board moves along the

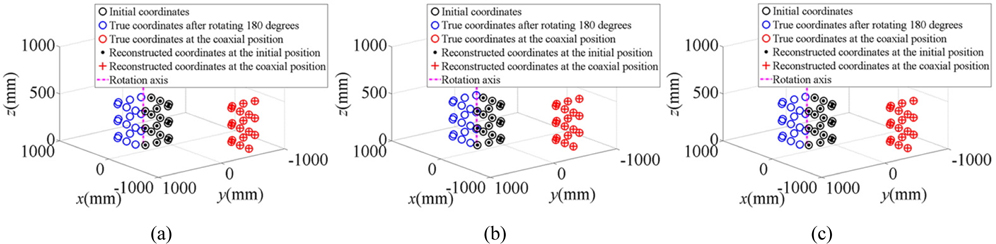

According to the calibration processes of the coaxial position, the 3D world coordinates at different positions are reconstructed in Fig. 3. In the experiments, we make a comparison between the world coordinates of the reconstructed feature points and the true feature points. The coordinates of the reconstructed feature points are obtained from the image captured by the binocular system. In Fig. 3, the reconstructed feature points on the 3D calibration board are rotated by 180 degrees from the initial position. Then the board moves along the coaxial direction and finally reaches the coaxial position. The reconstructed and the true coordinates of the feature points at the coaxial position are in coincidence.

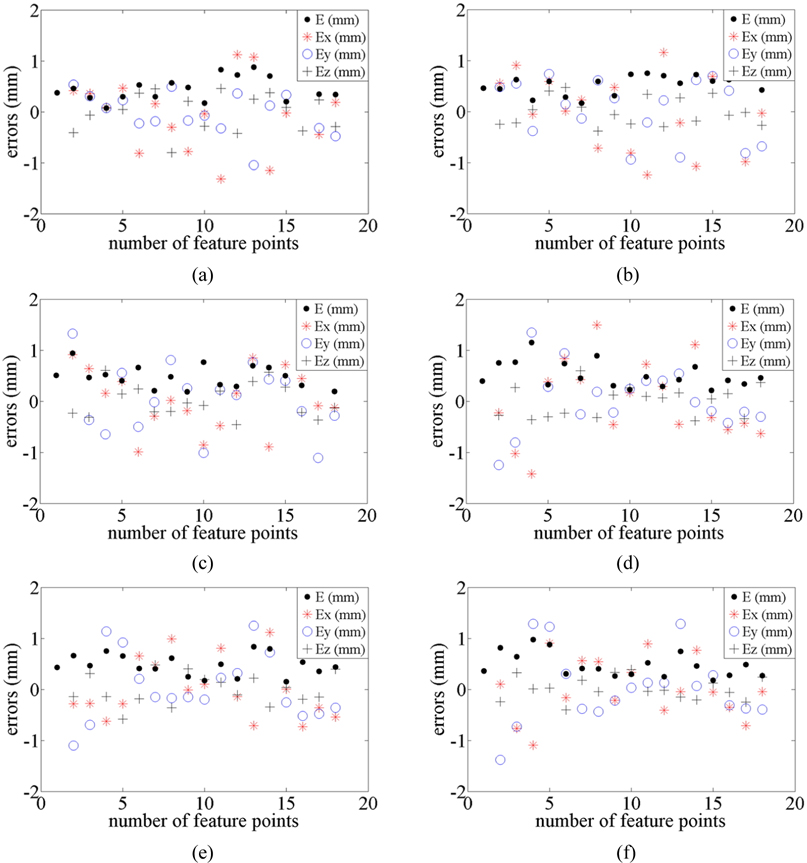

In order to further analyze the accuracy of the reconstructed results, in the three experiments, the differences between the true and reconstructed 3D coordinates of the feature points at the initial position are indicated in Figs. 4(a)~4(c), respectively. The differences between the true and reconstructed 3D coordinates of the feature points at the coaxial position are indicated in Figs. 4(d)~4(f), respectively.

In Figs. 4(a)~4(c), the scope of the maximal errors about the 3D calibration board at the initial position is −1.5 mm−1.5 mm in the three experiments. The root-mean-square errors of the first group are 0.725 mm, 0.461 mm and 0.348 mm in the

In Fig. 4(a), the errors’ scope is −1.5 mm-1.5 mm in the

Three global calibration experiments are performed to unite the measurement results of the binocular vision system at the diagonal position into the world coordinate system at the initial position. The 3D calibration board moves along the

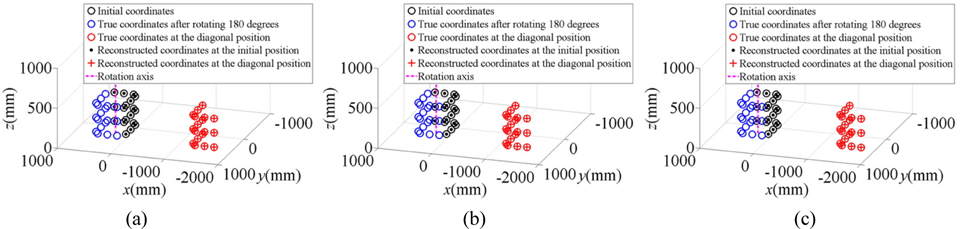

According to the calibration processes of the diagonal position, the 3D world coordinates at different positions are reconstructed in Fig. 5. In Fig. 5, the reconstructed feature points on the 3D calibration board are rotated by 180 degrees from the initial position. Then the board moves along the

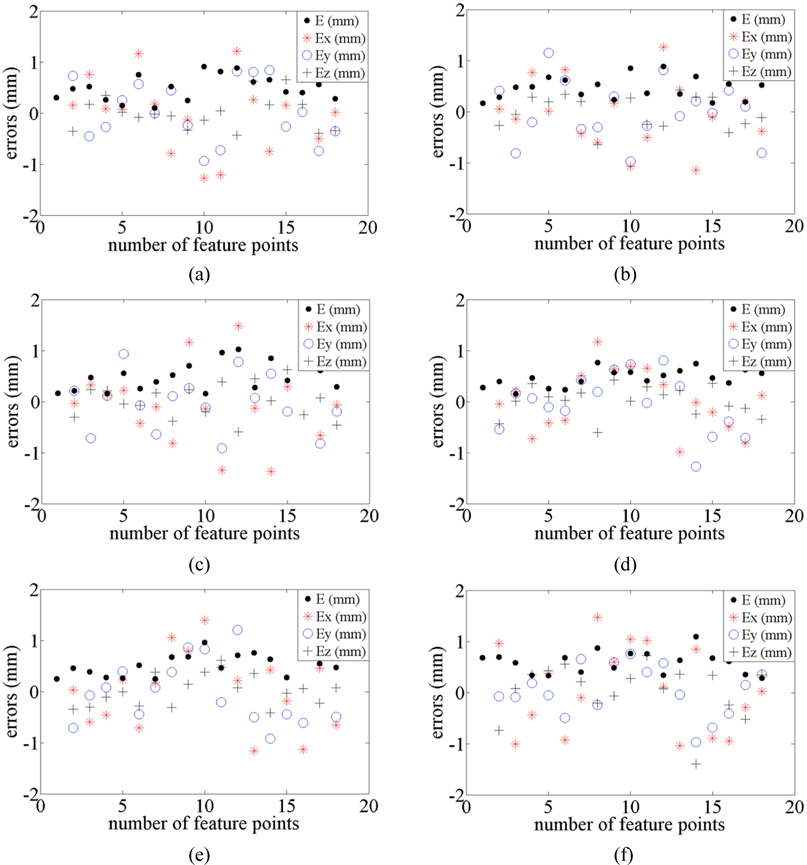

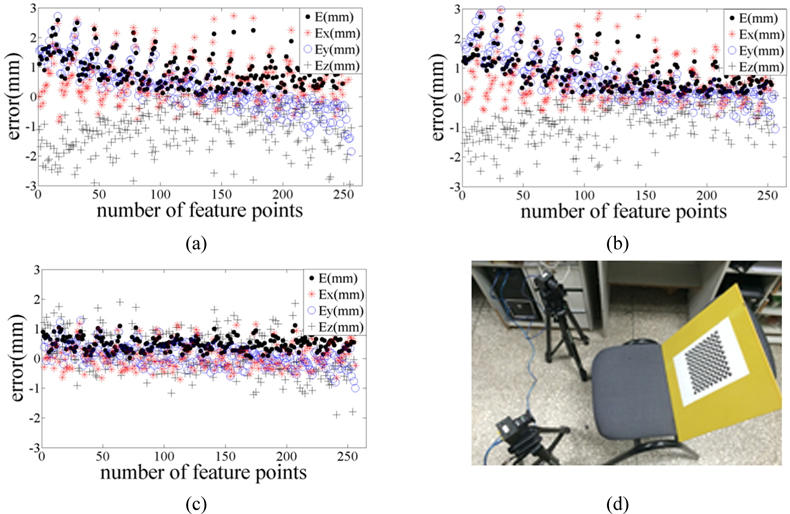

In the three experiments, the differences between the true and reconstructed 3D coordinates of the feature points at the initial position are indicated in Figs. 6(a)~6(c), respectively. The scope of the maximal errors about the 3D calibration board at the initial position is −1.5 mm−1.5 mm. The root-mean-square errors of the first experiment are 0.690 mm, 0.571 mm and 0.312 mm in the

In Fig. 6(a), the scopes of the errors in the

We perform calibration experiments for a binocular vision system with a 2D calibration board. The projection matrices of two cameras are determined by Zhang’s method [27]. The experimental results are shown in Figs. 7(a)~7(d). The measurement results are also evaluated by reconstructed errors. The experimental root-mean-square errors between the true and reconstructed 3D coordinates of the feature points are 0.989 mm, 1.048 mm and 0.546 mm The root-mean-square errors of the three group experiments are 0.963 mm, 0.952 mm, 0.421 mm in the

This paper addresses a global calibration method with a three-DOF calibration system. The model of the three-DOF global calibration system is studied in this paper. The 3D calibration board is moved to an arbitrary position in the model. According to the motion law of the three-DOF global calibration system at different positions, we deduce the global calibration model of the binocular systems at different positions. The measurement results of the binocular systems at different position are unified into a world coordinate system that is determined by the initial position of the calibration board. Three experiments of the binocular systems at the coaxial and diagonal positions are respectively conducted to verify the global calibration method of the vision measurement systems. The root-mean-square errors between the true and reconstructed 3D coordinates of the feature points are 0.573 mm, 0.520 mm and 0.528 mm at the coaxial position, respectively. The root-mean-square errors between the true and reconstructed 3D coordinates of the feature points are 0.495 mm, 0.556 mm and 0.627 mm at the diagonal position, respectively. The reconstructed errors of the global calibration system for the binocular measurement systems are minimal at the direction that is perpendicular to the baseline and the measurement distance. The method unities the measurement results of the binocular vision systems at different positions into the same world coordinate system, which is of great significance in the research field of the vision-based measurement.