There are several methods to record three dimensional (3D) information of objects such as lens array based integral imaging, synthetic aperture integral imaging (SAII), computer synthesized integral imaging (CSII), axially distributed image sensing (ADS), and axially distributed stereo image sensing (ADSS). ADSS method is capable of recording partially occluded 3D objects and reconstructing high-resolution slice plane images. In this paper, we present a computational method for depth extraction of partially occluded 3D objects using ADSS. In the proposed method, the high resolution elemental stereo image pairs are recorded by simply moving the stereo camera along the optical axis and the recorded elemental image pairs are used to reconstruct 3D slice images using the computational reconstruction algorithm. To extract depth information of partially occluded 3D object, we utilize the edge enhancement and simple block matching algorithm between two reconstructed slice image pair. To demonstrate the proposed method, we carry out the preliminary experiments and the results are presented.

Depth extraction from three-dimensional (3D) objects in real world is attracting a great deal of interest in many diverse fields of computer vision, 3D display and 3D recognition [1-3]. Especially, 3D passive imaging makes it possible to extract depth information by recording different perspectives of 3D objects [3-9]. Among 3D passive imaging technologies, recently axially distributed stereo image sensing (ADSS) has been studied [8] where the stereo camera is translated along its optical axis and many elemental image pairs for a 3D scene are collected. The collected images are reconstructed as 3D slice images using a computational reconstruction algorithm based on ray back-projection. The ADSS is an attractive way to provide high-resolution elemental images and simple axial movement.

Partially occluded object has been one of the challenging problems in 3D image processing area. In the conventional stereo imaging case [1,2], two different perspective images were used for 3D information extraction using a corresponding pixel matching technique. However, it is difficult to find accurate 3D information of partially occluded objects because 3D object image may be hidden by partial occlusion and the correspondences are lost.

In this paper, we present a computational method for depth extraction of partially occluded 3D objects using ADSS. In the proposed method, the high resolution elemental image pairs (EIPs) are recorded by simply moving the stereo camera along the optical axis and the recorded EIPs are used to reconstruct 3D slice images using the computational reconstruction algorithm. To extract depth information of partially occluded 3D object, we utilize the edge enhancement filter and block matching algorithm between two reconstructed slice image pair. To demonstrate the proposed method, we carry out the preliminary experiments and the results are presented.

II. DEPT EXTRACTION OF PARTIALLY OCCLUDED 3D OBJECTS USING ADSS

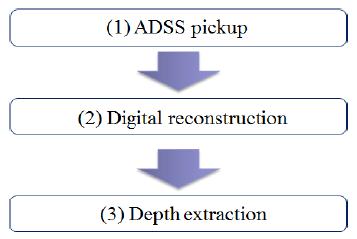

The proposed depth extraction method using ADSS for partially occluded 3D objects is shown in Fig. 1. It has three processes: ADSS pickup process, digital reconstruction process and the depth extraction process.

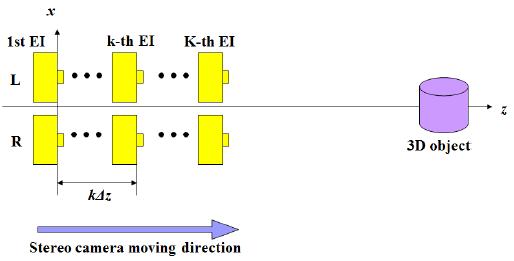

Fig. 2 shows the recording process of 3D objects in the ADSS system. We record EIPs by moving the stereo camera along its optical axis. We suppose that optical axis is the center of the two cameras located at

>

C. Digital Reconstruction in ADSS

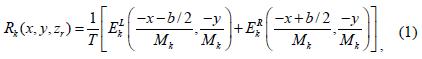

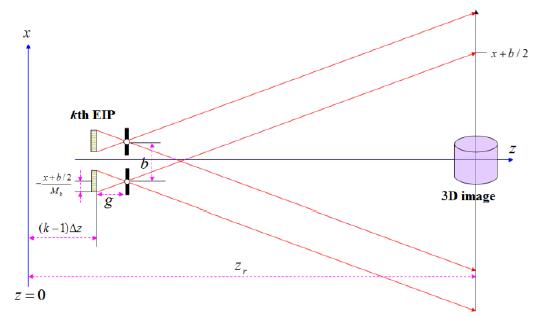

The second process of the proposed method is the computational reconstruction using the recorded EIPs. Fig. 3 illustrates the digital reconstruction process. The computational reconstruction is based on the inverse ray projection model [8]. We suppose that the reconstruction plane is at distance

where

and

Then, the reconstructed 3D slice image at (

In the depth extraction process of the proposed method, we extract depth information using slice images from digital reconstruction process. The reconstructed slice images consist of different mixture images with focused images and blurred images according to the reconstruction distances.

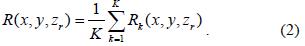

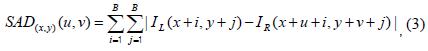

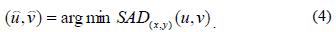

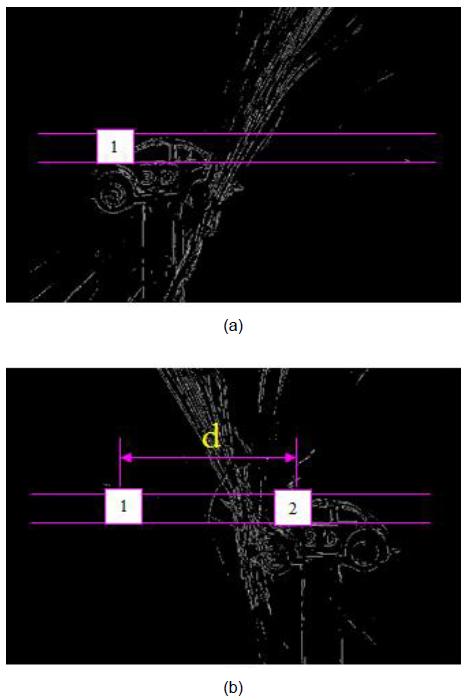

The focused images of 3D object are reconstructed only at the original position of 3D object. While, blurred images are shown out of original position. Based on this principle, we find the focused image part in the reconstructed slice images. To do so, we firstly apply an edge-enhancement filter to the slice image. Next, we extract depth information using the edge-enhanced images as shown in Fig. 4. The depth extraction algorithm used in this paper is shown in Fig. 4. In general, block matching minimizes a measure of matching error between two images. The matching error between the blocks at the position (

where the block size is B×B. Using SAD result, the best estimate is defined to be the (

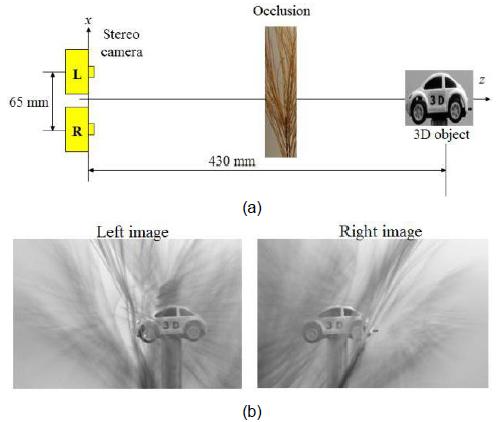

To demonstrate our depth extraction method using ADSS, we performed the preliminary experiments for partially occluded 3D objects. The experimental structure is shown in Fig. 5(a). The 3D object is a toy car. It is positioned at 430 mm away from the first stereo camera. The occlusion is a tree which is located at 300 mm. Two different cameras are used for stereo camera. The baseline of the two cameras was 65 mm. Each camera has an image sensor of 2184×1456 pixels. Two lenses with focal length

Next, to reconstruct slice images for the partially occluded 3D objects, the recorded 41 EIPs were applied to the computational reconstruction algorithm as shown in Fig. 4. The 2D slice images of the 3D objects were obtained according to the reconstruction distances. Some reconstructed slice images are shown in Fig. 5(b). The reconstructed slice image is focused on the car object. Here, the distance of the reconstruction plane was 430 mm from the sensor where the ‘car’ object is originally located.

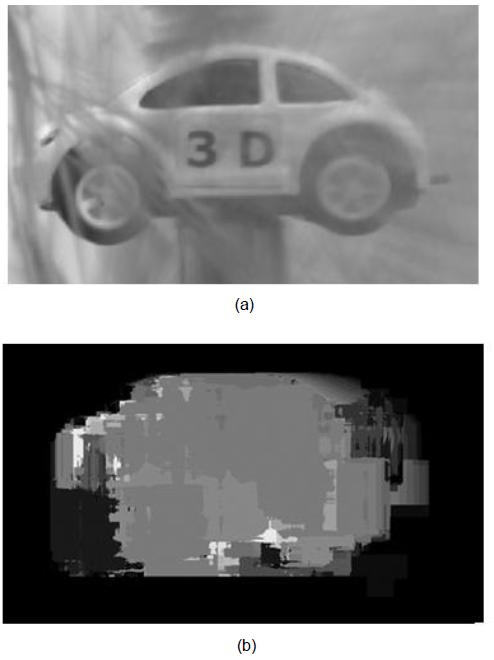

Now, we estimated the depth of the object using the depth extraction process described in Eqs. (3) and (4). We applied the edge-enhancement filter to the slice image as shown in Fig. 5(b). Next, we extracted depth information using the edge-enhanced images. The block size was 8×8. The estimated depths are shown in Fig. 6(b). This result reveals that the proposed method can extract the 3D information of partially occluded object shown in Fig. 6(a).

In conclusion, we have presented a depth extraction method using ADSS. In the proposed method, the high-resolution EIPs were recorded by simply moving the stereo camera along the optical axis and the recorded EIPs were used to generate a set of 3D slice images using the computational reconstruction algorithm. To extract depth of 3D object, the edge enhancement filter and block matching algorithm between two slice images were used. To demonstrate our method, we performed the preliminary experiments of partially occluded 3D objects. The experimental results reveal that we can extract depth information because ADSS provides clear images for partially occluded 3D objects.