In “A Computational Foundation for the Study of Cognition” David Chalmers articulates, justifies and defends the computational suf ficiency thesis (CST). Chalmers advances a revised theory of computational implementation, and argues that implementing the right sort of computational structure is sufficient for the possession of a mind, and for the possession of a wide variety of mental properties. I argue that Chalmers’s theory of implementation is consistent with the nomological possibility of physical systems that possess different entire minds. I further argue that this brain-possessing-two-minds result challenges CST in three ways. It implicates CST with a host of epistemological problems; it undermines the underlying assumption that the mental supervenes on the physical; and it calls into question the claim that CST provides conceptual foundations for the computational science of the mind.

In “A Computational Foundation for the Study of Cognition” (forthcoming) David Chalmers articulates, justifies and defends the

My aim here is to argue that Chalmers’s theory of implementation does not block a different challenge to CST. The challenge, roughly, is that some possible physical systems simultaneously implement different computational structures (or different states of the same computational structure) that suffice for cognition; hence these systems simultaneously possess different minds. Chalmers admits this possibility elsewhere (1996). But I argue that it is more than just a remote, implausible scenario, and that it renders CST less plausible.

Chalmers writes that by justifying CST “we can see that the foundations of artificial intelligence and computational cognitive science are solid.” I wish to emphasize that my argument against CST is not meant to undermine in any way the prospects of the theoretical work in brain and cognitive sciences.1 My conclusion, rather, is that CST is not the way to construe the conceptual foundations of computational brain and cognitive sciences. While I do not offer here an alternative conceptual account, I have outlined one elsewhere (Shagrir 2006; 2010), and intend to develop it in greater detail in future.

The paper has three parts. In the next section I discuss the notion of implementation. I point out that Chalmers’s theory is consistent with the nomological possibility of physical systems that simultaneously implement different complex computational structures. In Section 3 I say something about the current status of CST. In Section 4 I argue that the possibility of simultaneous implementation weakens the case of CST.

1This is in sharp contrast to Searle, who concludes from his universal implementation argument that “there is no way that computational cognitive science could ever be a natural science” (1992: 212).

Hilary Putnam (1988) advances the claim that every physical system that satisfies very minimal conditions implements every finite state automaton. John Searle (1992) argues that the wall behind him implements the Wordstar program. Chalmers argues that both Putnam and Searle assume an unconstrained notion of implementation, one that falls short of satisfying fairly minimal conditions, such as certain counterfactuals. Chalmers (1996) formulates his conditions on implementation in some detail. He further distinguishes between a finite state automaton (FSA) and a combinatorial state automaton (CSA) whose states are combinations of vectors of parameters, or sub-states. When these conditions are in place, we see that a rock does not implement every finite state automaton; it apparently does not implement even a single CSA.2

I do not dispute these claims. Moreover, I agree with Chalmers on further claims he makes concerning implementation. One claim is that every system apparently implements an FSA: A rock implements a trivial onestate automaton. Another claim is that many physical systems typically implement more than one FSA. In particular, if a system implements a more complex FSA, it typically simultaneously implements simpler FSAs. A third claim is that relatively few physical systems implement very complex CSAs. In particular, very few physical systems implement a CSA that (

Nevertheless, I want to point out that one could easily construct systems that simultaneously implement different complex CSAs. I will illustrate this claim with the example of a simple automaton.3 The description I use is somewhat different from the one used by Chalmers. Chalmers describes automata in terms of “total states.” I describe them in terms of gates (or “neural cells”), which are the bases of real digital computing. The two descriptions are equivalent.4

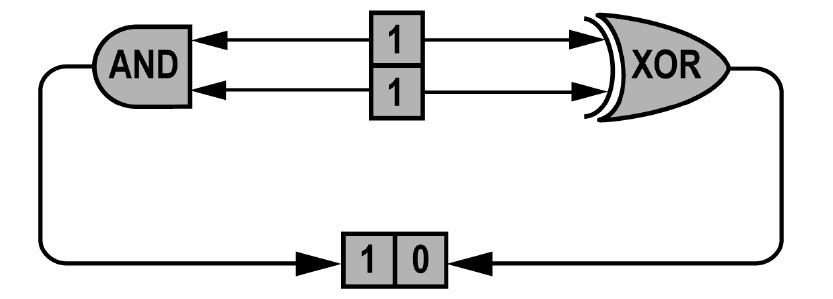

Consider a physical system P that works as follows: It emits 5-10 volts if it receives voltages greater than 5 from each of the two input channels, and 0-5 volts otherwise.5 Assigning ‘0’ to emission/reception of 0-5 volts and ‘1’ to emission/reception of 5-10 volts, the physical gate implements the logical

Let us suppose it turns out that flip detectors of P are actually tri-stable. Imagine, for example, that P emits 5-10 volts if it receives voltages greater than 5 from each of the two input channels; 0-2.5 volts if it receives under 2.5 volts from each input channel; and 2.5-5 volts otherwise. Let us now assign the symbol ‘0’ to emission/reception of under 2.5 volts and ‘1’ to emission/ reception of 2.5-10 volts. Under this assignment, P is now implementing the

The result is that the very same physical system P simultaneously implements two distinct logical gates. The main difference from Putnam’s example is that this implementation is constructed through the very same physical properties of P, namely, its voltages. Another difference is that in the Putnam case there is an arbitrary mapping between different physical states and the same states of the automaton. In our case, we couple the same physical properties of P to the same inputs/outputs of the gate. The different implementations result from coupling different voltages (across implementations, not within implementations) to the same inputs/outputs. But within implementations, relative to the initial assignment, each logical gate reflects the causal structure of P. In this respect, we have a fairly standard implementation of logical gates, which satisfies the conditions set by Chalmers on implementation.

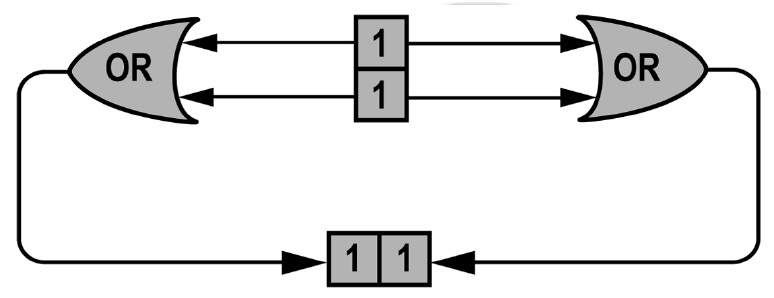

We could create other dual gates in a similar way. Consider a physical system Q that emits 5-10 volts if it receives over 5 volts from exactly one input channel and 0-5 volts otherwise. Under the assignment of ‘0’ to emission/ reception of 0-5 volts and ‘1’ to emission/reception of 5-10 volts, Q implements an

Yet another example is of a physical system S. This system emits 5-10 volts if it receives over 5 volts from at least one input channel and 0-5 volts otherwise. Under the assignment of ‘0’ to emission/reception of 0-5 volts and ‘1’ to emission/reception of 5-10 volts, S implements an

I do not claim that these physical systems implement every logical gate. They certainly do not implement a complex CSA. The point I want to emphasize is quite different. You can use these kinds of

Note that I do not claim that this ability is shared by every physical system. We can assume that physical systems often implement only one automaton of the same degree. It might also be the case that, technologically speaking, it will be ineffective and very costly to use these tri-stable gates (though I can certainly see some technological advantages). The point is philosophical: If implementing some CSA suffices for the possession of a mind, then I can construct a physical system that simultaneously implements this CSA

One may point out that these physical systems always implement a deeper, “more complex,” automaton that entails the simultaneous implementation of the “less complex” automata. Thus the system P in fact implements a tri-value logical gate that entails both the

2See also Chrisley (1994) and Copeland (1996). 3The original example is presented in an earlier paper (Shagrir 2001). 4See Minsky (1967) who invokes both descriptions (as for the second description, he presents these and other gates as McCulloch and Pitts “cells”) and demonstrates the equivalence relations between them (the proof is on pp. 55-58). 5We can assume for purposes of stability that the reception/emission is significantly of less/more than 5 volts. 6See also Sprevak (2010), who presents this result more elegantly without invoking tri-stable flip-detectors. 7Shagrir (2012).

Chalmers does not explicate the sense in which computational structure suffices for cognition, e.g., identity, entailment, emergence and so on. It seems that he is deliberately neutral about this question. Keeping this metaphysical neutrality, we can express the sufficiency claim in terms of supervenience: Mind supervenes on computational structure. Chalmers points out that some mental features, e.g., knowledge, might not supervene on (internal) computational structure, as they depend on extrinsic, perhaps non-computational, properties.8 But he suggests that cognitive (“psychological”) and phenomenal properties supervene on computational properties. Cognitive properties, according to Chalmers,

It should be noted that supporters of CST might disagree with Chalmers about the details. One could hold the view according to which a certain computational structure suffices for having a mind, but this computational structure does not fix all mental properties. Thus one might hold that having a certain computational structure ensures that one is thinking, but this structure does not fix the psychological content of one’s thoughts. One could also hold the view that computational structure does not fix one’s phenomenological properties. Thus one could argue that CST is consistent with the nomological possibility of spectrally inverted twins who share the same computational structure. For the purposes of this paper, however, I identify CST with what Chalmers has said about the relationship between computational structure and mentality.

Chalmers provides a justification for CST. As I understand him, the justification is an argument that consists of three premises: 1. Cognitive capacity (mind) and (most of) its properties supervene on the causal structure of the (implementing) system, e.g., brain. 2. The pertinent causal structure consists in causal topology or, respectively, organizational invariant properties. 3. The causal topology of a system is fixed by some computational structure. Hence cognitive capacity (mind) is fixed by an implementation of some computational structure; presumably, some complex CSA.

The first premise is very reasonable: Many (perhaps all) capacities, cognitive or not, are grounded in causal structure. This, at any rate, is the claim of mechanistic philosophers of science.9 The third premise makes sense too, at least after reading Chalmers on it (section 3.3). The second premise is the controversial one. In the non-cognitive cases, the causal structure that grounds a certain capacity essentially includes non-organizationalinvariant properties, i.e.,

At this point intuitions clash, especially when it comes to phenomenal properties. I share with Chalmers the intuition that (at least some) siliconmade brains and neural-made brains, which have the same computational structure, produce the same cognitive properties and even the same experience, say the sensation of red. But this intuition,

The upshot is that the debate about the validity of CST is far from settled. My aim in the next part is to provide yet another, but very different, argument against it.

8There are those, however, who argue that the pertinent computational structure extends all the way to the environment (Wilson 1994). 9See, for example, Bechtel and Richardson (1993/2010), Bogen and Woodward (1988), and Craver (2007).

The challenge to CST rests on the nomological possibility of a physical system that simultaneously implements two different minds. By “different minds” I mean two entire minds; these can be two minds that are of different types, or two different instances of the same mind.

Let me start by explicating some of the assumptions underlying CST. CST, as we have seen, is the view that computational structure suffices for possessing a mind (at least in the sense that a mind nomologically supervenes on computational structure). Then two creatures that implement the same structure cannot differ in cognition, namely one having a mind and the other not. The friends of CST assume, more or less explicitly, that having a mind requires the implementation of a “very complex” CSA, perhaps an infinite machine. This automaton might be equipped with more features: Ability to solve (or approximate a solution for) certain problems in polynomial-bounded time; some universality features; ability to support compositionality and productivity; and so on. Let us call an automaton that underlies a mind a COG-CSA.

Another assumption is that there is more than one type of COG-CSA. We can assume that all human minds supervene on the very same COG-CSA (or closely related automata that under further abstraction can be seen as the same computational structure). We can also assume that monkeys, dolphins and other animals implement automata that in one way or another are derivatives of COG-CSA. But I guess that it would be overly anthropocentric to claim that every (possible) natural intelligent creature, perhaps living on very remote planets, every artificial (say, silicon-made) intelligent creature, and every intelligent divine creature, if there are any, implements the very same COG-CSA. I take it that the CST is not committed to this thesis.

Yet another assumption concerns cognitive states, events, processes and the like, those that supervene on computational structure. The assumption is that every difference in cognitive states depends on a difference in the total states of the (implemented) COG-CSA(s), be it the total states of different COG-CSAs, or the total states of the same COG-CSA. Thus take phenomenal properties. If you experience the ball in front of you as wholly blue and I experience the ball in front of me as wholly red, then we must be in different total states of COG-CSA (whether the same or different COG-CSA). The same goes for me having different phenomenal experiences at different times. The difference in my phenomenal experiences depends on being in a different total state of (the implemented) COG-CSA.

The last assumption is that

The next step in the argument is the claim that CST is consistent with the nomological possibility of a physical system that simultaneously implements two COG-CSAs. We can assume that the two automata are of the same degree of complexity (if not, we use the number of gates, memory, etc., required for the more complex automaton). I do not claim that any arbitrary system can do this. I also do not claim that any “complex” CSA suffices for a mind. The claim is that

Now, assuming that CST is true, we can use these tri-stable gates to build a physical system BRAIN that implements a COG-CSA, one that is sufficient for mind. This, of course, might be hard in practice, but it seems that it could be done “in principle,” in the sense of nomological possibility. BRAIN simultaneously implements another complex CSA that is of the same complexity of CSA. It consists of tri-stable gates that implement two different or even different runs of the same (like the system S described above) Boolean function. In fact, it seems that it is nomologically possible to simultaneously implement another

Let us see where we stand. I have argued that if Chalmers’s theory of implementation is correct (as I think it is), then it is nomologically possible to simultaneously implement two different COG-CSAs. Thus, if CST is true (a

As I see it, the friends of CST have two routes here. One is to show that a more constrained notion of implementation is not consistent with these scenarios. I discuss this route in more detail elsewhere (Shagrir 2012), but will say something about it at the end. Another is to embrace the conclusion of a physical system simultaneously possessing two entire minds. Chalmers seems to take this route when saying that “there may even be instances in which a single system implements two independent automata, both of which fall into the class sufficient for the embodiment of a mind. A sufficiently powerful future computer running two complex AI programs simultaneously might do this, for example” (1996: 332). He sees this as no threat to CST. I agree that the multiple-minds scenarios do not logically entail the falsity of CST. But I do think that they make CST less plausible. I will give three reasons for this.

The deluded mind – Two minds often produce different motor commands, e.g., to raise the arm vs. not to raise the arm. But the implementing BRAIN always produces the same physical output (say, proximal motor signals). This, by itself, is no contradiction. We can assume that one mind dictates raising the arm (the output command ‘1’), translated to a 2.5-5 voltcommand to the motor organ. The other mind dictates not to raise the arm (the output command ‘0’), translated to the same 2.5-5 volt-command to the motor organ. The problem is that the physical command results in one of the two; let us say that the result is no-arm-movement. Let us also assume that the same goes for the sensory apparatus, so that the first mind experience a movement (input ‘1’) though there is no movement. This mind is a deluded, brain-in-a-vat-like, entity. It decides to move the arm and even sees it moving, though in fact no movement has occurred. This mind is just spinning in its own mental world. It supervenes on a brain that functions in accordance with the mental states of the other mind.

Let us compare these simultaneous implementation cases to another one. ROBOT is a very sophisticated

The extent of delusion depends upon the pair of minds that can be (if at all) simultaneously implemented. Not every pair enhances the same extent of delusion. There might be cases in which the two minds somehow switch roles or can somehow co-exist, more meaningfully, in the same brain. Two minds, for example, can differ in their phenomenal experience—say, colors —which does not result in any behavioral (physical) difference.12 There are also the cases of split-brain patients, whose corpus callosum connecting the two brain’s hemispheres is severed.13 These patients are capable of performing different mental functions in each hemisphere; for example, each hemisphere maintains an independent focus of attention.14 It sometime even appears as if each hemisphere contains a separate sphere of consciousness in the sense that one is not being aware of the other.15 However, it is also worthwhile to note that the performance of cognitive functions in splitbrain patients is not entirely normal. Moreover, the claim that we have two different entire minds has been challenged too: “Of the dozens of instances recorded over the years, none allowed for a clear-cut claim that each hemisphere has a full sense of self” (Gazzaniga 2005: 657). Our construction suggests that we have two entire minds implemented in the very same physical properties, and connected to the very same sensory and motor organs.

The supervenient mind – Strictly speaking, the scenarios depicted above are consistent with

The foundations of mind – Let us assume that further scientific investigation shows that our brains have the ability to simultaneously implement complex automata. The action-potential function might be only bi-stable. The current wisdom is, however, that our cognitive abilities crucially depend on the spiking of the neurons; so perhaps we can implement the CSA in the number of spikes, which are more amenable to multiple implementations. My hunch is that theoreticians will readily admit that our brains (like ROBOT) might simultaneously implement other automata, and that these automata might even be formal, computational, descriptions of (other) minds. Nevertheless, they will be much more hesitant (perhaps for the reasons raised in the supervenient-mind paragraph) to admit that our brain possesses more than one mind. This hesitation is no indication that the possession of a mind is more than the implementation of an automaton. But it is an indication that theoreticians do not take CST as the correct understanding (in the sense of conceptual analysis) of the claim that cognition is a sort of computation. If they did, they must have insisted that brains that simultaneously implement these complex automata simultaneously possess different minds. This is bad news for CST. CST gains much of its support from the claim that it provides adequate foundations for the successful computational approaches in cognitive and brain sciences. If it does not provide the required foundations—if CST and the computational approaches part ways —there are even fewer good reasons to believe in it.

As said, none of these are meant to constitute a knock-down argument against CST. They do not. But I believe that these considerations make CST less plausible.

Now, another route to undermine the brain-possessing-two-minds argument is to put more constraints on the notion of implementation.17 I discuss this issue in more detail elsewhere (Shagrir 2012). Here I want to refer to Chalmers’s statement about constraining the inputs and outputs: “It is generally useful to put restrictions on the way that inputs and outputs to the system map onto inputs and outputs of the FSA” (forthcoming).18 The real question here is how to specify the system’s inputs and outputs. Presumably, an intentional specification is out of the question.19 Another option is a physical (including chemical and biological) specification. We can tie the implementation to specific proximal output, say the current of 2.5-10 volts, or to something more distal, say, physical motor movement. But this move has its problems too (for CST). For one thing, this proposal runs against the idea that computations are medium-independent entities; for they now depend on specific

The last option, which is more in the spirit of Chalmers, is to characterize the more distal inputs and outputs in syntactic terms. Thus if (say) the output of 2.5-10 volts is plugged to a movement of the arm, and the output of 0-2.5 volts to no-movement, we can say that one automata is implemented and not the other. My reply is that this option helps to exclude the implementations in the case discussed above, but is of no help in the general case. For there is yet another system in which the output of 2.5-5 is plugged to (physical) light movement, and the output of 0-2.5 is plugged to no movement. In this case we can individuate movement (which is just ‘1’) either to high movement or to light-movement-plus-high-movement. In short, there is no principled difference between the syntactic typing of internal and external events. It might help in certain cases, but it does not change the end result about simultaneous implementation. This does not exhaust all the options. But it indicates that finding the adequate constraints on inputs and outputs is no easy task.

10Chalmers refers the reader to the arguments by Armstrong (1968) and Lewis (1972). For criticism see Levin (2009, section 5). 11It would be interesting to see how these cases fare with Chalmers’s argument about the Matrix hypothesis (Chalmers 2005). 12This is not exactly the inverted qualia case. The phenomenal properties are different, but the implemented COG-CSAs are different too (though the inputs/outputs are the same). 13For review see, e.g., Gazzaniga (2005). 14Zaidel (1995). 15Gazzaniga (1972). 16Supervenience does not automatically ensure dependence (see, e.g., Kim 1990, Bennett 2004 and Shagrir 2002). 17Scheutz (2001) offers a thorough criticism of Chalmers’s theory of implementation. His main line is that the more important constraint on implementation, other than being counterfactual supportive, is the grouping of physical states (see also Melnyk 1996; Godfrey-Smith 2009). I believe that my result about simultaneous implementation is compatible with his revised definition of implementation (Shagrir 2012). I agree with Scheutz’s point about grouping, but I suspect that one cannot rule out the cases presented here while keeping a satisfactory characterization of inputs and outputs. 18Piccinini argues that we need to take into account the functional task in which computation is embedded, e.g., on “which external events cause certain internal events” (2008: 220). Piccinini ties these functional properties to certain kind of mechanistic explanation. Godfrey-Smith (2009) distinguishes between a broad and a narrow construal of inputs and outputs. 19Chalmers writes: “It will be noted that nothing in my account of computation and implementation invokes any semantic considerations, such as the representational content of internal states. This is precisely as it should be: computations are specified syntactically, not semantically” (forthcoming).

I have advanced an argument against CST that rests on the idea that a physical system can simultaneously implement a variety of abstract automata. I have argued that if CST is correct then there can be (in the sense of nomological possibility) physical systems that simultaneously implement more than one COG-CSA (or two instances of the same COG-CSA). I have no proof of this result; nor do I think that the result constitutes a knock-down argument against CST. But I have argued that the result makes CST less plausible. It brings with it a host of epistemological problems; it threatens the idea that the mental-on-physical supervenience is a dependence relation, and it challenges the assumption that CST provides conceptual foundations for the computational science of the mind.

20There might be, however, other physical properties that the robot metal arm and the biological human arm share; see Block (1978) for further discussion.