Nowadays the most of electronic devices are controlled and interacted with, remotely. Hand gesture is a useful interaction tool between human and electronic devices. It can be used with smartphones, computers, robots, smart rooms, and infotainment systems.

Some research studies have been done with different sensors such as the Kinect sensor [1], leap motion sensor [2], [3] and other methods that attach devices to the human body to recognize hand gestures. These systems have several drawbacks including computational costs, detection of limited hand gestures, and they require a lot of computational resources.

Similar research work in [3] uses only two channels and three features to recognize hand motions and gestures. This method cannot detect many hand gestures.

We propose a novel hand gesture recognition system using an infrared proximity sensor and fuzzy logic based classification. The proposed method can reduce the drawbacks of existing methods: specifically, power consumption, size, computational cost, and it can recognize more hand gestures in real time.

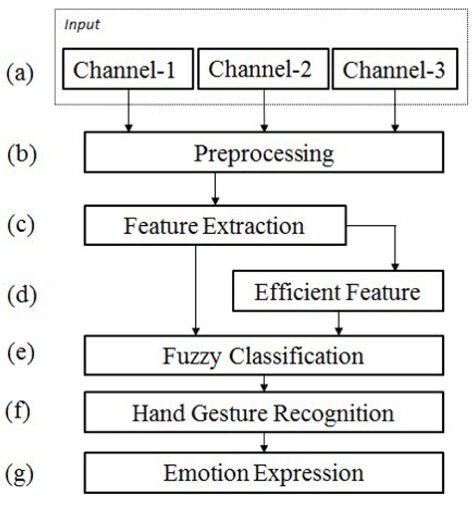

In this paper, we have proposed a new method for robust hand gesture recognition using a fuzzy logic based classification with a new type of sensor array. The overall workflow of the proposed method is depicted in Figure 1. Each step will be explained in detail in the following sections. Our first contribution is a sensor model for recording hand gesture information as an input through three channels.

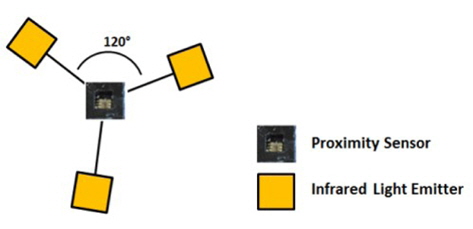

The raw input signal is recorded through a three-channel sensor. The sensor model consists of three infrared light emitters and one sensor. The sensor has three receiver channels, which is a proximity sensor with three individual receiver channels that used to record the input signal.

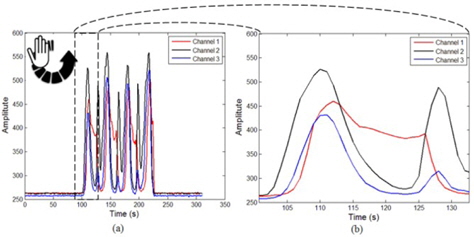

The main structure of the emitter and sensor is shown in Figure 2. Each emitter is placed 2 cm from the sensor at 120° to each other. A sample input signal associated with a hand gesture (a round swipe, which is a circular hand movement of the hand) is plotted in Figure 3. In this case, the hand moves over the sensor several times. In Figure 3(a), there is a pattern for that hand gesture, with the magnified image in Figure 3(b).

When a hand moves over the sensor and emitters, a certain amount of light is reflected from the surface of the hand and is relayed to the sensor with small delays for each channel.

In the next step, we records the hand gesture signal detected by the sensor (the three channels, are recorded separately), and then we apply the pre-processing method (Figure 1(b)), in which smoothing and clipping the region of interest are done in the preparation for the next step.

The most important aspect to classifying the hand gesture is to extract manifold features (Figure 1(c)) and efficient features (Figure 1(d)). Hand gestures are variable even when the same motion is attempted, and their own special features. We have extracted manifold features from hand gestures signal in order to separately classify each motion. There are hundreds of hand motions that can be recognized by our sensor. We initially tested about 30 types of hand motions identified their details and features.

Variation in hand gestures can increase depending on their features. For example, there are only two main gestures: hit and swipe.

However these can be divided into many gestures depending on their main features, such as iteration, direction, time, and speed.

The main features from the input signal were extracted by performing extensive experimentation and analysis based on hand gesture datasets that were collected.

Efficient features were also extracted from main features. The efficient features are listed below and they are used with and without the main features to classify each hand gesture.

Efficient features: Rising edge (time and angle), falling edge (time and angle), slope, amplitude, variance, local minimum, local maximum, time delay of amplitude, time delay of rising edge, angle of each sample, angle sequence, angle variance in histogram and speed.

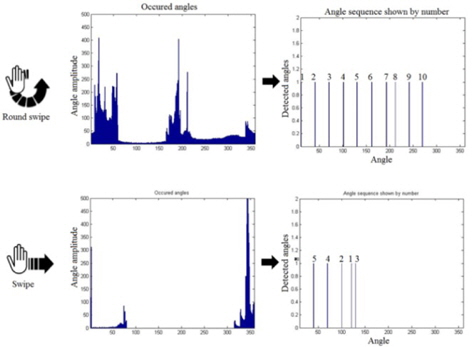

Some features in turn generate other features that together recognize new gestures. Only one or two features are used to differentiate between two gestures, while more features are needed for a comparison with other gestures. Features have specific parameters that are needed to calculate the differences between two motions [4]. A round swipe hand gesture example is shown in Figure 4 (the first row of the figure). When the gesture has been executed, its entering and exiting angles of this gesture occur sequentially as shown in the angle histogram, and the variance of the histogram is wide. From the histogram, estimated angles are sorted naturally for the round swipe motion. However, a simple swipe motion (see the second row of Figure 4) has its execution angles non-sequential, and the variance of its histogram is small.

Hand gesture signals are not like sound signals so we cannot apply cross-correlation related methods for estimating time delays. The same hand gesture signals can be different because of hand shape and size, or motion execution.

Similar feature patterns of the input signal lead to a difficulty in classifying hand gestures. In order to solve this problem, we applied fuzzy logic to efficiently classify each feature pattern.

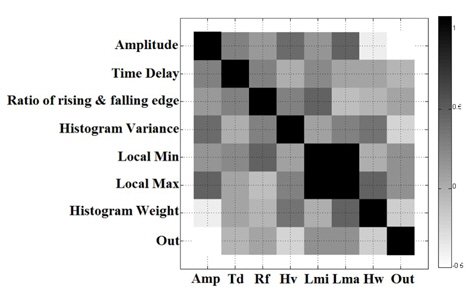

Similarity between each feature is represented by the independence bar shown in Figure 5. The dark color indicates higher correlation, whereas the brighter light indicates lower correlation between features.

ANFIS was used to generate fuzzy rules automatically by learning from the stipulated input and output data. A large amount of input and output data are selected as training data. In our case, ANFIS is used for those hand gestures that are distinguishable with a low number of feature patterns.

Fuzzy rules were defined based on the feature patterns [5] of the input. ANFIS is combination of fuzzy logic and neural networks that was first introduced in [6].

Fuzzy logic uses linguistic variables [7] to formalize the precise information containing unique properties. It also uses fuzzy sets and fuzzy membership functions based on retrieved knowledge with fuzzy IF-THEN rules. Fuzzy membership functions and fuzzy sets are defined based on the experiments and analysis of input signals. One of the fuzzy rules that we defined is described below as an example.

This rule is defined for recognizing a round swipe and the corresponding raw data are depicted in Figure 3(a) and (b). Similarly, other IF-THEN rules were derived in this manner.

Manifold features are needed as motion type increases. After recognizing the hand gestures, we were able to find the relationship between a hand gesture and the related emotion expressions. Robots can express all the emotions of humans by using these hand motions [8].

2.4 Emotion Expression with Hand Gesture Recognition

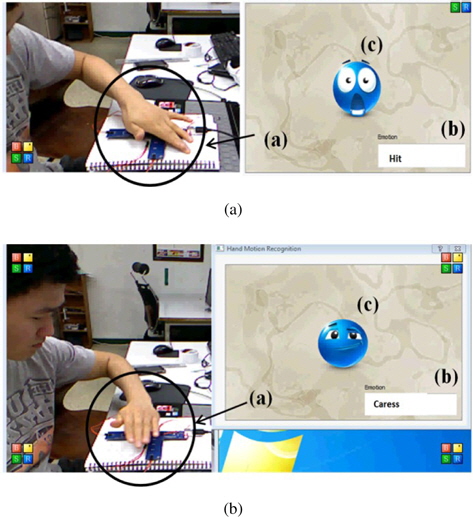

We can easily understand the basic relationship between hand gestures and emotion expression as illustrated in Figure 6. Humans can feel things that are in physical contact with their face, body, or skin. The proposed system is able to recognize hand gestures efficiently, which enables the association of emotion expression and hand gesture recognition. The sensor shown in Figure 2 is attached to the body of a robot, and subsequently, the robot can respond with appropriate feelings or emotions.

The robot senses hits, caresses, flicks, and other hand motions through its skin, and human like emotions. For example, if a human hits the robot by touching its body, the robot feels angry and expresses its feelings by showing a facial or other expression, as shown in Figure 6.

3. Simulation and Experimental Result

The overall process as described in the preceding section was implemented in MATLAB at first, and then we moved to C++ (with QT).

We collected a hand gesture datasets of over 200 people and tested our proposed method in real time. Some hand gesture signals are plotted in Figure 7, using MATLAB, showing similar and different patterns of two individual hand gestures.

When the same gesture is executed in different ways (such as unique manner people use for the same gesture), the corresponding signals of that gesture are not the same. We have collected and analyzed extensive hand motion data, extracting different features, and can show how people perform the same gesture in different ways.

The input signal of a hand gesture depends on human nature or habit, hand shape, hand size, motion execution and other factors, and consequently the signals can be different for the same hand gesture. Each gesture shows specific patterns and shapes as illustrated in Figure 7, although some are very similar. The difficulty in classifying hand gestures is due to the similar feature patterns and nature of the input signal. The independence of each feature is graphically illustrated in Figure 5. As explained previously, fuzzy logic was the method used to overcome this difficulty. We tested our algorithm on large datasets to analyze how people perform different gestures and noted that some people tap on the sensor in a single direction while others tap in different directions. The hand gesture data depicted in Figure 7 is unique to one person. When other people execute the same gestures, the associated signal is different.

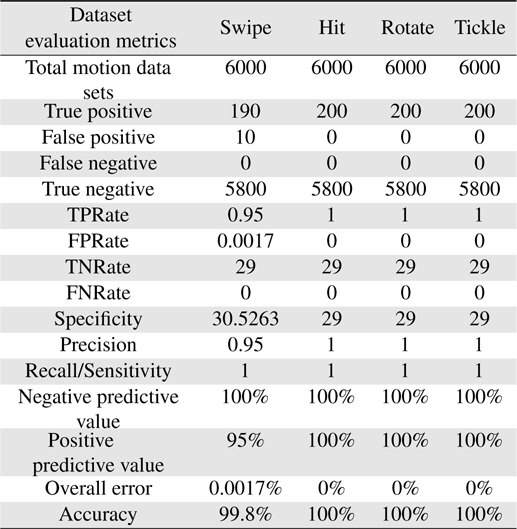

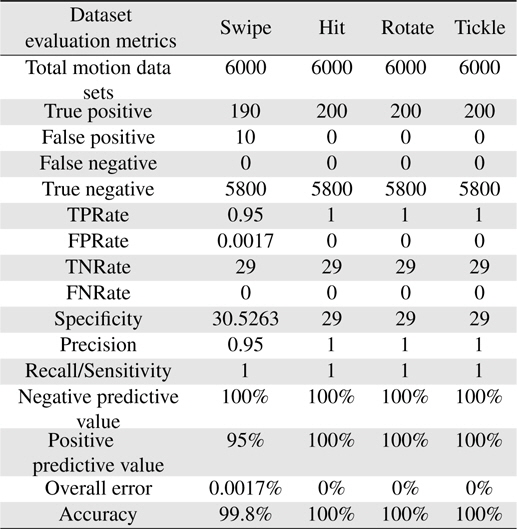

Many features are used in our method with some being useful for recognition and others are less so. We used evaluation metrics to validate our proposed method’s performance and showed the recognition rate on the some hand gestures as shown in Table 1. From the evaluation metric results, our method shows high recognition rate of hand gestures recognition. Some of them are almost recognized by 100%.

[Table 1.] Hand gesture recognition with different evaluation metrics

Hand gesture recognition with different evaluation metrics

One of the findings is that less useful features can create new useful features, which we call efficient features. Graphically, this process is illustrated in Figure 4. A significant problem was that hand gesture’s signals features vary with different people. Other researchers have used two or three features in a pattern recognition system, and advanced projects such as human blood cell pattern recognition systems use thousands of features.

We have conducted emotion expression with hand gesture recognition and part of our simulations and experimental results are depicted in Figure 8.

A user executes a hit motion, and our method recognizes the motion as a “Hit” and a corresponding emotion expression is shown, as on the right side of Figure 8(a) (see the blue emoticon). When the user executes a swipe motion over the sensor, our proposed method recognizes it as a “Caress” with the corresponding emotion expressed on the blue cartoon’s face (the feeling/emotion of being cared for, see Figure 8(b)).

In this paper, we introduced a hand gesture recognition method using fuzzy logic-based classification with a new type of sensor array. The sensor is unaffected by normal indoor environment lights.

We extracted important features from recorded signals and also generated efficient features from that data. We applied fuzzy logic to similar features that were extracted from different hand gestures.

When different gestures are discovered, resultant input feature patterns increase, as does the number of fuzzy rules. Evaluation metrics are used to validate our proposed algorithm of recognition rate.

We conducted emotion expression with hand gesture recognition and our proposed method has been tested in real time, and with more hand gestures than the other existing methods. In our future research work, we aim to propose a more intelligent algorithm to recognize even more hand motions.