Research on geometric processing, three-dimensional (3D) animation, and 3D reconstruction has been growing fast due to rapid technological development. One impact from this situation is the increase in data that can be created, especially 3D data. Because data is one of the intellectual property rights, its protection is of utmost importance and watermarking is one way to protect the data. The watermarking system concept is embedded from the watermark data to the source data. Watermark data can be in a text, audio, image, or video, or in a 3D file, and source data can also be in one of these formats.

Research in watermarking has been performed for many decades. For two decades, 3D watermarking especially has been performed and rapidly developed. Kanai et al. [1] used multiresolution wavelet decomposition to perform digital watermarking in 3D polygons. To perform multiresolution wavelet decomposition, a lazy wavelet has been proposed. This method uses complex computation because the ratio of the wavelet coefficient vector and edges should match. If it does not match, the shape of the 3D polygon will be changed. Other research about watermarking in 3D triangular meshes includes 3D irregular meshes based on wavelet multiresolution analysis [2], identification of a watermarking technique that can be performed on 3D triangular mesh [3], and blind watermarking using oblate spheroidal harmonics [4].

Neviere et al. have proposed a new watermarking method for 3D triangular mesh, a constraint optimization framework to perform the watermarking [5]. This method also uses complex computation because it needs the input that has been previously optimized and this method has not been implemented to the data from the Kinect sensor. In this study, we proposed 3D triangular mesh watermarking based on discrete wavelet transform (DWT) and selecting one of the axes from a 3D coordinate system. Although DWT has previously been used by many researchers in 3D triangular mesh watermarking, we perform another approach in order to use the DWT without performing complex computations. Our approach uses constant regularization and a correction term. We also implement this watermarking to the 3D triangular mesh from the Kinect sensor, which can be created from depth data.

This research makes the following contributions:

1) providing a simple method for watermarking 3D triangular mesh, 2) introducing constant regularization and a correction term for 3D triangular mesh, 3) implementing watermarking to the data generated from the Kinect sensor.

The organization of this paper is as follows. Section 1 introduces the watermarking of 3D triangular meshes. In Section 2, 3D triangular mesh data and text data are presented in detail. In Section 3, we describe the insertion and extraction process. Section 4 introduces a correction term for correcting the 3D triangular meshes watermarked before the extraction process. Section 5 presents the experiments and results. Finally, we present our conclusions in Section 6.

Building a watermarking system requires two main types of data: source and watermark. We used depth data that has been generated from the Kinect sensor and processed it to become 3D triangular mesh. Our watermark data was the text data because it is easy for the user. The user simply enters the text directly rather than provide an image, voice, or video that represents him or her.

We used the kinect sensor as equipment for data acquisition. It produces good depth data when the distance between the object and the sensor are in the range 1.2–3.5 m [6]. To implement this watermarking system, we used a face as the object, following the previously mentioned procedures to obtain the data.

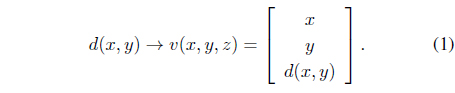

Assume we have coarse depth data that can be represented as a scalar function

By following [7], we can obtain the smoothness of the 3D face point cloud. Since the 3D face point cloud is not connected at each vertex, the object cannot be realized. The object can be realized if each vertex is connected by an edge to another. This connectivity is computed automatically by creating an edge between two vertices that influence each other. Finally, the object can be represented as

In this research, text data can be created by using a mixed set of characters, numbers, or symbols. Because it is created from these components, the text data has a string data type. In order to use text data in the watermark system, it should be converted to binary data.

Assume we have text data

3. 3D Triangular Mesh Watermarking

Given the object as source data and the text data as watermark data, the goal of 3D triangular mesh watermarking is to embed watermark data into the source data. Thereby, it is important to adapt watermark data to the characteristics of the source data.

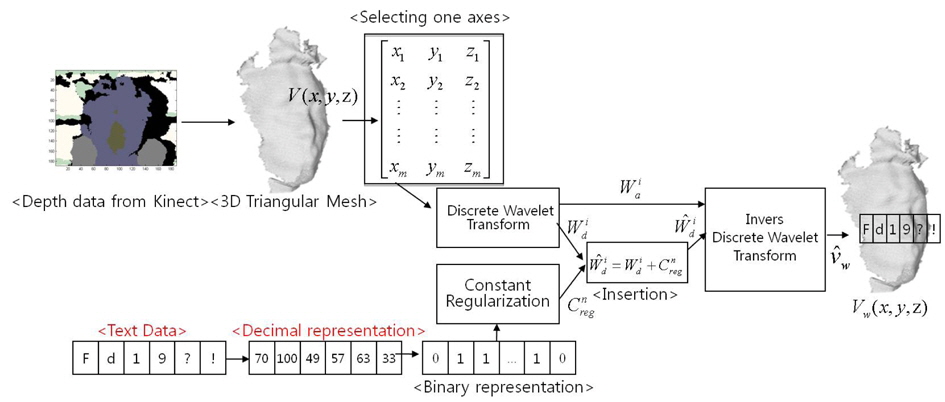

There are two main steps in the 3D triangular mesh watermarking procedure: The first is the insertion process, in which the text data embeds into the object as shown in Figure 1. The second is the extraction process, in which the text data is extracted from the watermarked object as shown in Figure 2.

To simplify the explanation, we mention again the object that can be represented as

Defined the column vector is one of the coordinate axes that has been selected and has a size 1 ×

DWT has an impact in that where the decomposition level is greater, it will reduce the insertion space. Because of this reason, we used one level decomposition of DWT in our research. Before inserting the text data into the object, there are two main steps that should be required. The first step is that the size of

where

Because human eyes are sensitive to dents, it is important to maintain the shape of watermarked object so that it looks similar to the original object. In addition, much information from is contained in . Because of these reasons, we select as an insertion area; besides, there is no important information contained in .

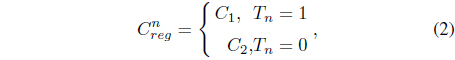

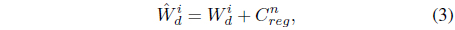

To perform the insertion process [9], we sum each of the detailed coefficients with each of the constant regularizations

where is the watermarked detail coefficient of DWT.

After the insertion process finishes, the next step is watermarked object reconstruction. To perform this process, we should do inverse DWT using and . This result can be represented as and we can use to the following equation

where

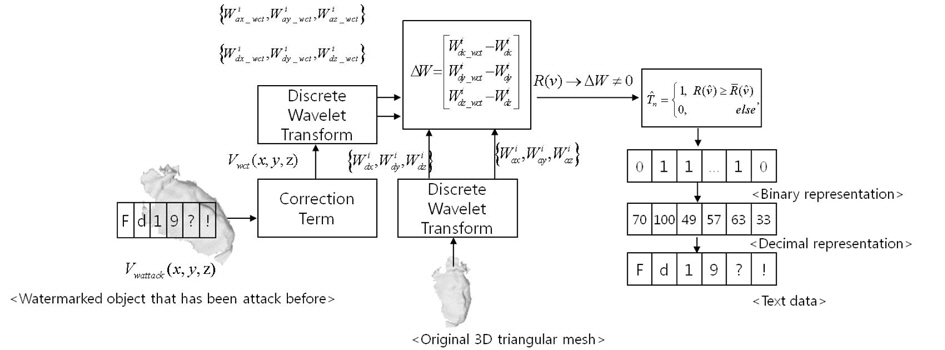

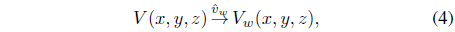

Assume we have a watermarked object that has been repaired using a correction term .The goal from the extraction process is to extract the text data from the watermarked data. Because our watermarking system is nonblind, we need the original object

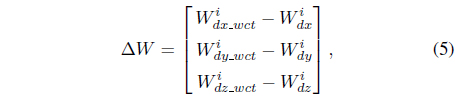

where is equal to ∆

Now, we have a set binary value of . A goal of the next step is text data reconstruction. In a similar way to the previous method, we will first convert the binary value, into a decimal value and we can then determine the text data, , by converting the decimal value to the character (string data type) using the ASCII table.

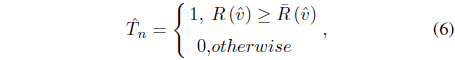

Attacks on a watermarked object is a reasonable condition. This is possible if a user wants to cheat on a person’s work. In order to solve this problem, the watermarking system should have a robust performance. Consider this problem: we introduce the correction term before the extraction process. This correction term satisfies each type of geometric attack. The types of geometric attacks that are proposed in this research are rotation, scale, and translation attacks.

The type of geometric attack should be known first before the correction term process. The procedure of this process is as follows. First, we investigate the rotation attack. We perform a comparison between the vertices from the original object and the vertices from the watermarked object that has been previously attacked. We also investigate the length of the result from each vertex comparison. If we can detect the result of a different length from each vertices comparison, we can determine a rotation attack.

The second procedure investigates a scale attack. To investigate this attack, we divide the vertices of the each axis from the watermaked object with the original one; we then compare the results to each other. We can determine a scale’s factor if we can detect these comparison results. If the two investigations previously cannot be known, we assume that the attack is a translation attack. A translation factor can be determined by using vertices subtraction between

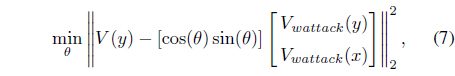

After we detect the type of attack, we can perform the correction term. The following equation describes the correction term of the rotation attack. The goal of the correction term in the rotation attack is to find the angle that influenced the watermarked object. This problem can be solved by using the least squared method. Assume that the watermarked object that has been attacked is

where

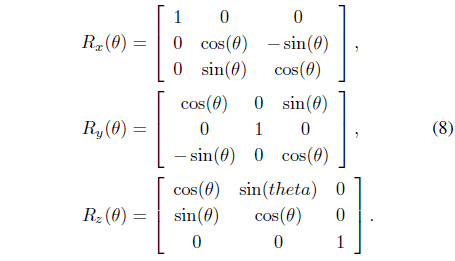

In addition, there are three basic rotation matrices [

The remaining correction terms are for the scale and translation attacks. We can use the division between

To represent characters, symbols, and numbers in text data, we used “Fd19?!” as text data. In order to determine the performance of the system, we performed a testing scheme using three scenario. The first scenario is a testing of the system using a rotation attack; the second scenario is a testing of the system using the scale’s attack. The last one is a testing system using a translation attack. The processes were carried out on a 2.4 GHz Intel core i3 processor with 2 GB memory.

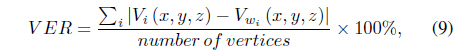

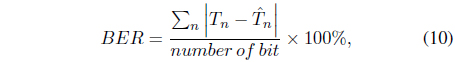

Performance of the system was evaluated in terms of the vertices error rate (VER) and bit error rate (BER), defined as follows:

where

In this experiment, we used a single attack, not a blending attack, to perform the attack. The sample of the angle that influence in

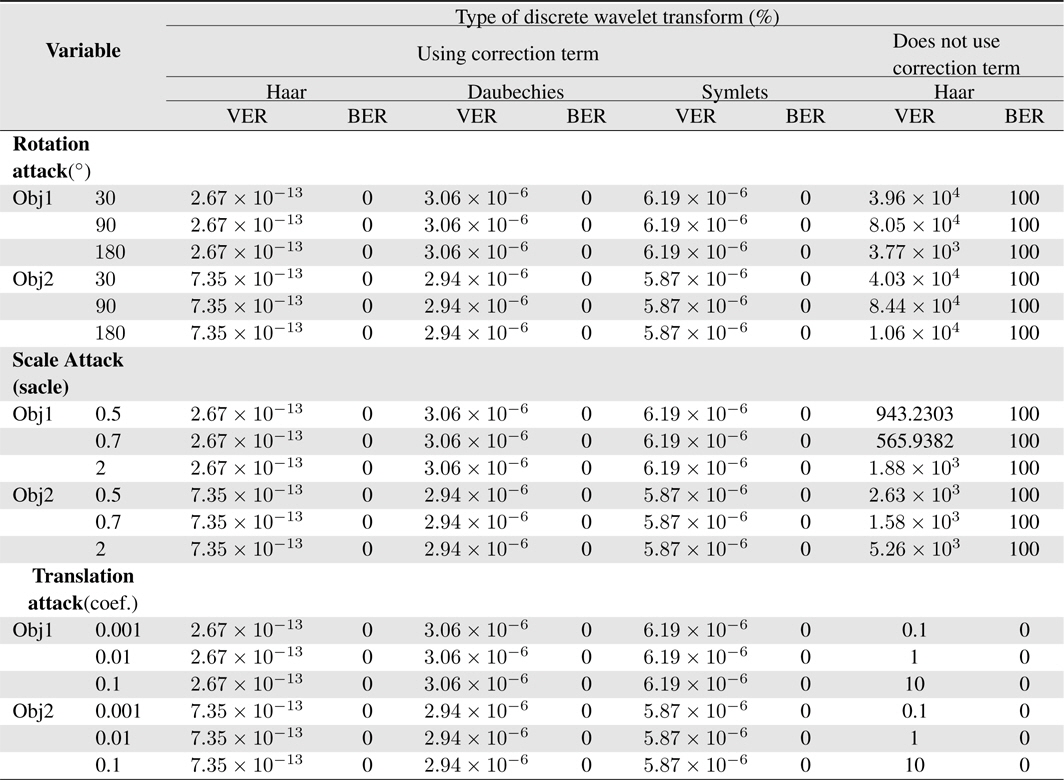

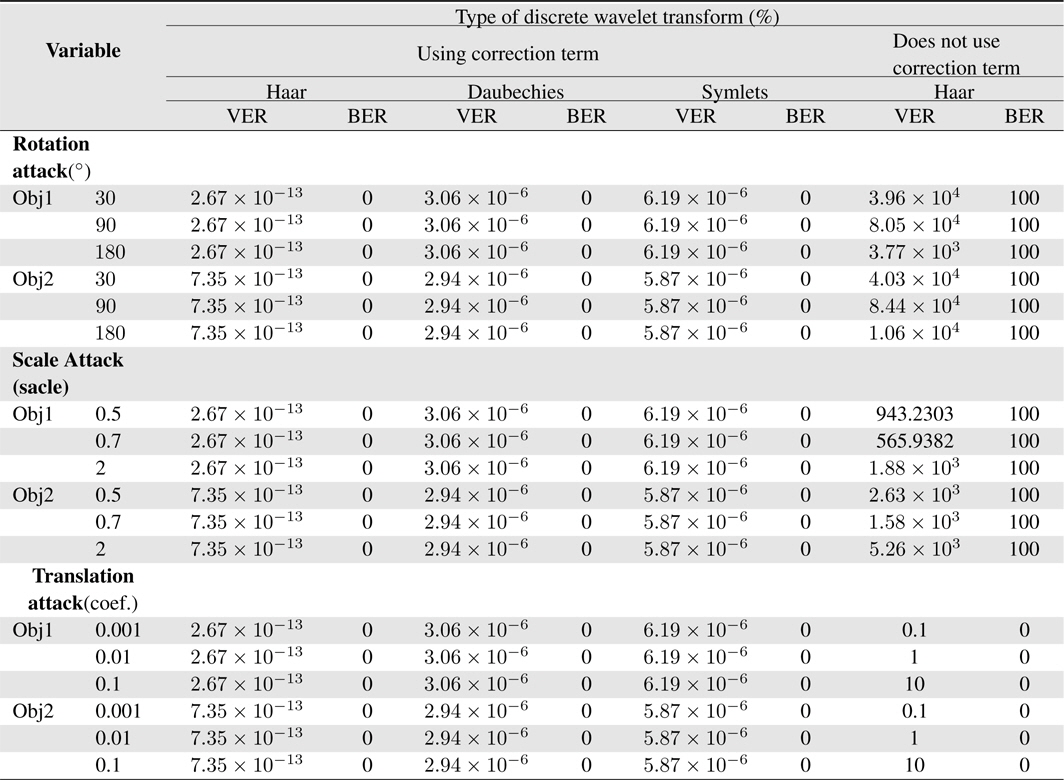

From Table 1, the Haar DWT by using correction term produces the VER, which has a smaller result than the other type of DWT. Because the Haar DWT is the simplest type of DWT, it does not need a complex computation to perform it. The result of the extraction process, which is extremely good, is caused by a correction term, which is performed before extraction process and without correction term, which is affected by the attacks vertex will contain a value that can distort the shape of the object. The BER values show this result.

[Table 1.] Performance comparisons

Performance comparisons

This paper presents a simple method for 3D triangular mesh watermarking produced from a Kinect sensor. The key idea of this research is how to implement the DWT to perform 3D triangular mesh watermarking that does not have complex computation. By performing constant regularization and a correction term, the watermarking can be successful. It also produced a significant result for the VER and BER. Haar DWT is a type of DWT that produces the smallest value for the VER.

Because this research performed a single attack, future research should perform a blending attack. The simple and robust method of blind watermarking also can be performed for future research because in our research, we performed non-blind watermarking.