Liquid crystal displays (LCDs) have slow responses as compared with cathode ray tube (CRT) displays because of LCD hold time [1-3], so ghost phenomenon or motion blurs are often perceived around fast moving objects in LCDs. To reduce the motion blurs, backlight flashing [4], signal overdrive [5], or frame rate up-conversion (FRUC) [6-12] can be used. However, luminance fluctuation may be seen when the flashing rate is not high enough in backlight flashing, and signal overdrive does not consider image contents [1]

To increase video frame rates for high temporal resolution, frame repetition, back/gray frame insertion and linear interpolation may be used; however, these methods cannot remove motion blurs and judders because they do not use motion information [13]. To produce unaffected frames without block artefacts and motion blurs, motion compensated frame interpolation (MCFI) must be used, where motion vectors (MVs) must be correctly estimated by means of various motion estimation (ME) methods.

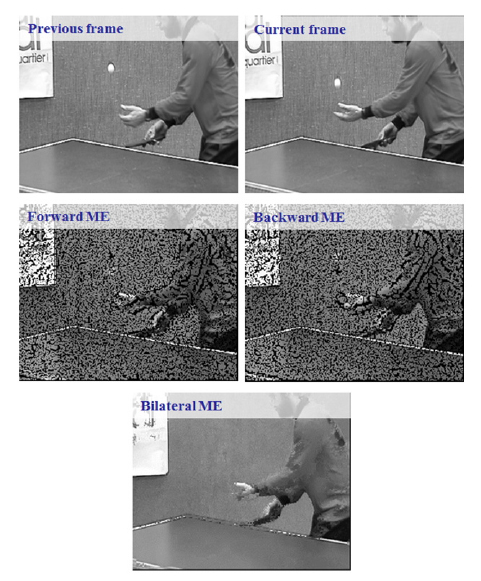

The conventional MCFI utilizes unidirectional ME (UME); forward ME, backward ME and bilateral ME [14-17]. The critical drawback of the UME is shown in Fig. 1. Forward and backward MEs cannot estimate all pixels’ MVs, so holes and overlaps result from multiple MVs and no MVs. Meanwhile, bilateral ME may not estimate correct MVs when a matching score of a block including moving objects is lower than matching scores of other blocks. In the result of MCFI using bilateral ME of Fig. 1, the block including a moving ball is not compensated [18].

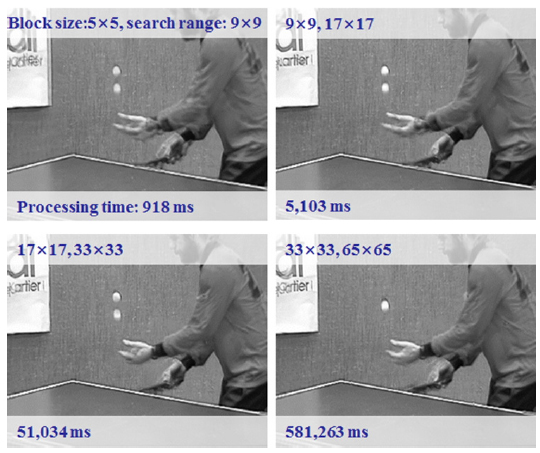

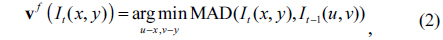

For the outstanding interpolation results, bidirectional ME (BME) and overlapped block motion compensation (OBMC) are used [19-22]. The results of BME + OBMC are very sensitive to the sizes of blocks and search regions for block matching as shown in Fig. 2. When the sizes are small, the large motions are not estimated, while the detailed motions are not compensated and huge processing time is needed when large sizes are utilized.

Various methods have been proposed to improve the performance or computation of FRUC [23-25]. Regions overlapping with large or complex motions are not, however, correctly compensated. To solve this problem, variable block sizes and overlapping ranges are needed. A method using variable block sizes was presented [26]; however, this used block splitting by quad scales (coarse-grained block size is 16×16, and fine-grained block size is 4×4), and refined motion estimation is always performed for all blocks whether or not a block is located at an object boundary.

This paper is aimed at developing a novel FRUC method that robustly compensates regions overlapped with large or complex motions. Accordingly, the proposed method uses variable block sizes and overlapping ranges. The block sizes are enlarged by checking a matching score, and the weighted overlapping ranges (WOBMC, weight-OBMC) are also enlarged by the ratio of block enlargement. Therefore, the block sizes and the weighted overlapping ranges can be suitably determined according to the movement and the size of objects, so the proposed method can interpolate frames with high visual and objective performance.

II. MCFI USING BME AND WOBMC WITH VARIABLE BLOCK SIZES

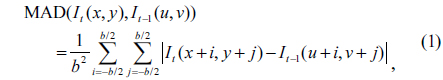

To estimate forward MVs, the proposed method uses a block matching algorithm (BMA) using mean of absolute differences (MAD), which can directly compare matching scores for blocks with variable sizes in contrast to sum of absolute differences (SAD) [27], in the direction from the current frame (

where the block size is

where

To estimate an MV at pixel

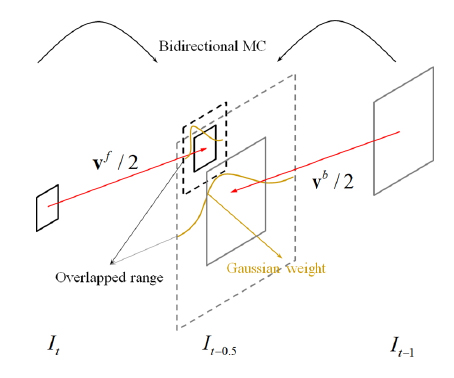

Using the estimated forward and backward MVs, v

Finally, the brightness of the interpolated frame may be reduced owing to the OBMC; the method compensates for this by using the difference with the interpolated image using the averaging

where denotes the low-pass filtering of

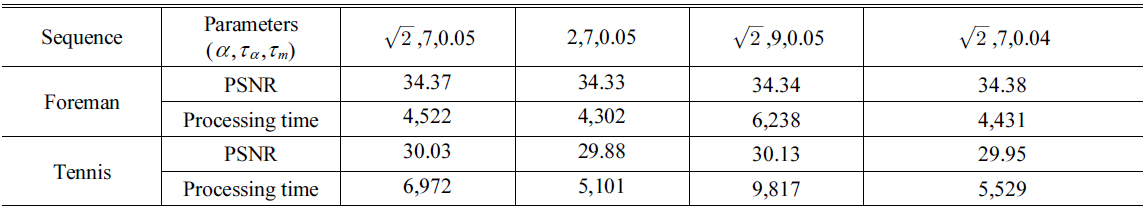

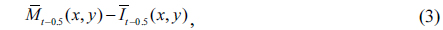

To evaluate the proposed method, the proposed method tested 12 image sequences; CIF (common intermediate format, 352×288) with 30 Hz, full HD (high definition, 1920×1080) with 50 Hz, and used 51 odd frames and produced 50 intermediate frames. The 50 images were compared with the 50 real even frames to evaluate their peak signal-to-noise ratio (PSNR) as shown in Fig. 5. The initial sizes of the block, search range and overlapping range are set to 5×5, 9×9 and 7×7, respectively; other parameters were selected as follows: is the typical value for upscaling of images, and

[TABLE 1.] Comparison of Variable Parameter values (PSNR [DB] and processing time [ms])

Comparison of Variable Parameter values (PSNR [DB] and processing time [ms])

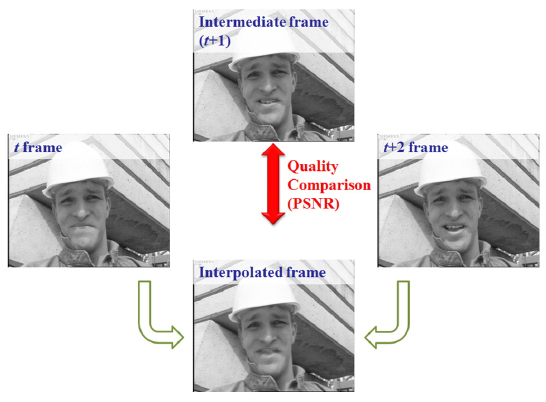

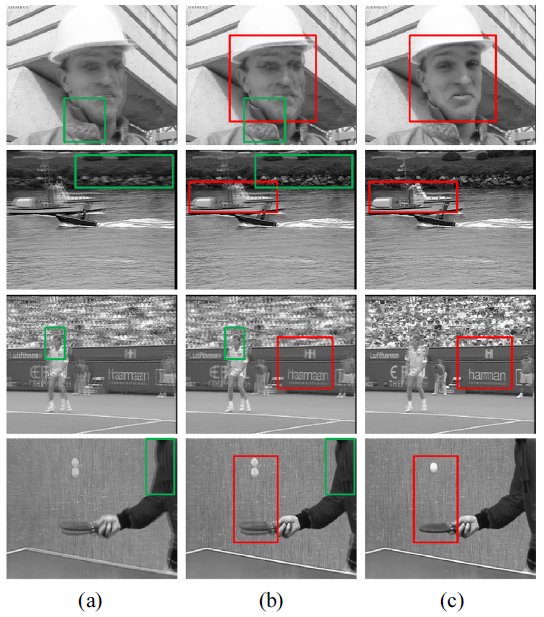

Visual comparison of the results of OBMC, WOBMC and the proposed method is shown in Fig. 6. The result images interpolated by WOBMC have fewer block artefacts than the images interpolated OBMC in almost all regions because WOBMC uses weights of all pixels. The proposed method, moreover, removed most block artefacts and motion blurs, and was able to produce unaffected intermediate frames owing to the variable block sizes

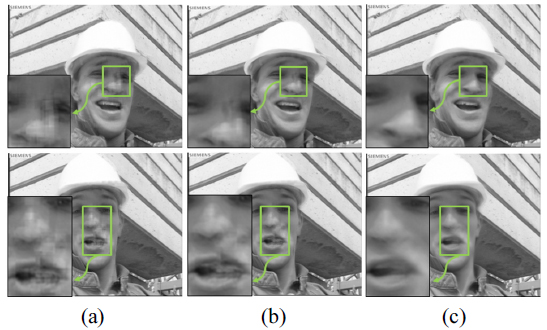

Some result images are shown in Fig. 7; many regions of motion blurs in averaging images (Fig. 7(a)) are observed, small motion regions are compensated in the results by static block size (Fig. 7(b), green boxes), and most motion regions are more clearly interpolated by the proposed method (Fig. 7(c), red boxes). In the results of our method, the face region is preserved in the ‘foreman’ sequence, and the shape of the boat and the river bank regions is clearly interpolated in the ‘coastguard’ sequence. The spectators are clearly separated, the text of the billboard is distinctly shown, and the motion blur of the play is reduced in the ‘stefan’ sequence. Especially, the table tennis ball is shown with one object in the ‘tennis’ sequence.

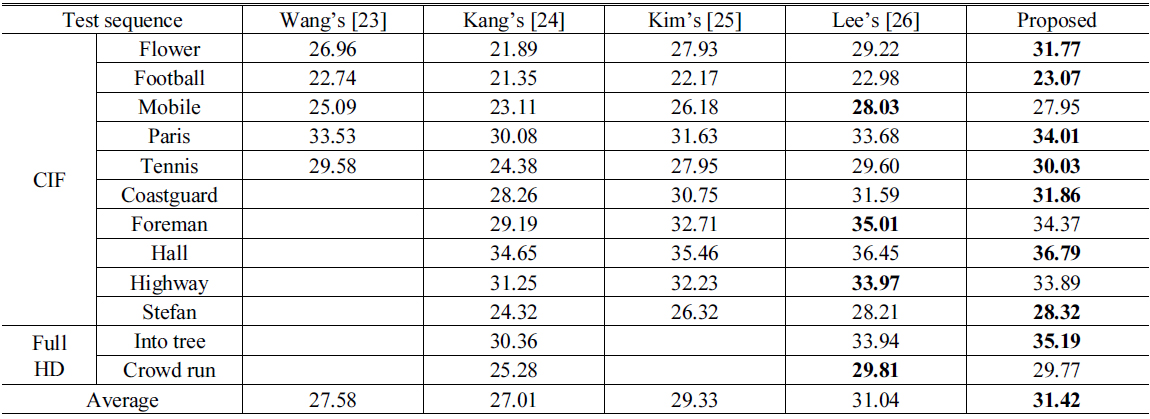

For objective comparison, the proposed method was compared with other recent methods [23-26] as shown in Table 2. In most test sequences, the PSNRs are higher than those of other methods. Especially, in sequences having large motions, such as ‘football’, ‘tennis’ and ‘stefan’, the performance of the proposed method is improved. In Fig. 8, the resultant images of the proposed method and other conventional methods [23-26] are shown (significant motion blurs and block artifacts are marked as red regions). Even though variable block size method [26] can reduce some motion blurs, large motion blurs are not corrected; however, the proposed method reduced most motion blurs and block artifacts.

[TABLE 2.] PSNR Comparison with Other Methods (PSNR [DB])

PSNR Comparison with Other Methods (PSNR [DB])

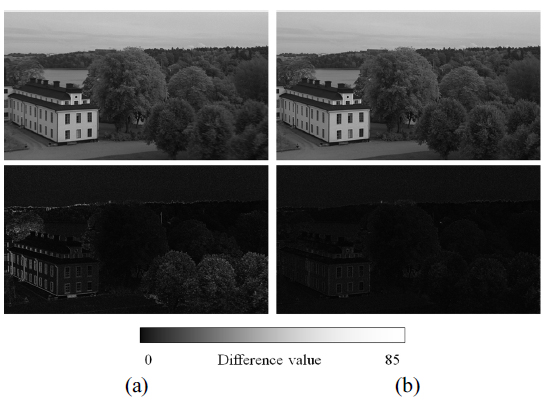

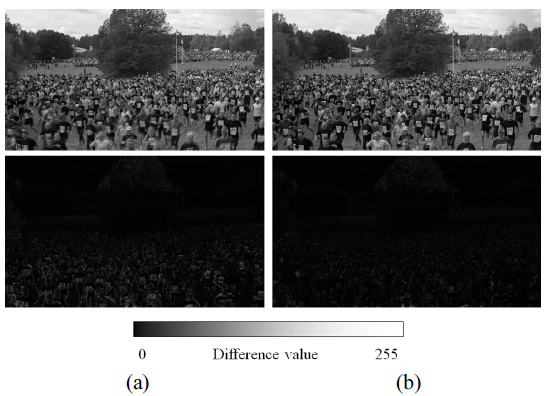

In the test of full HD image sequences, the results of our method are very similar to the original frames as shown in Figs. 9 and 10, where difference values between the results of the proposed method and the original images are very low values.

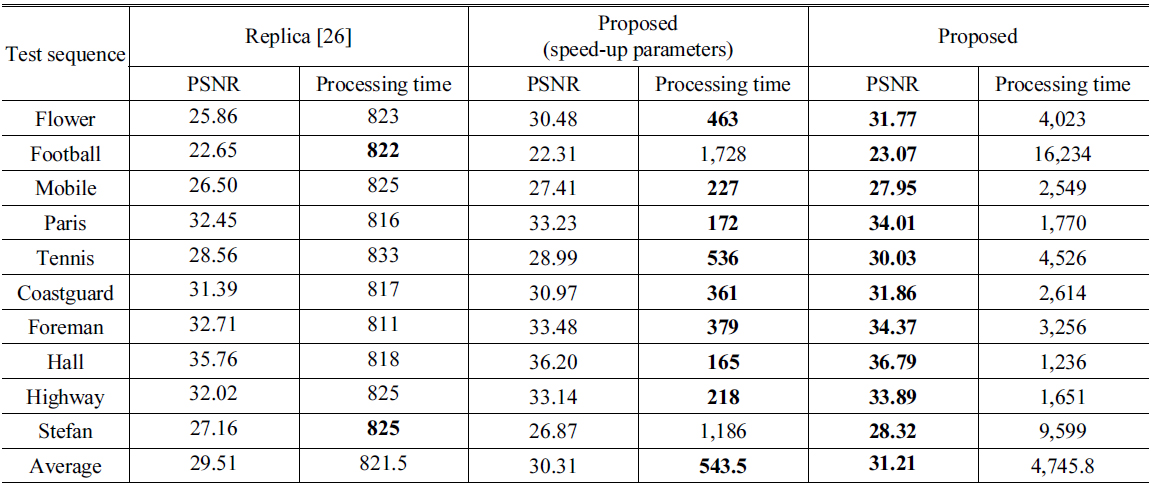

To evaluate the complexities of the proposed method, we compared the proposed method with a symmetric motion estimation method with variable search ranges [28], which was implemented by software. The Lim’s method [28] uses variable search ranges, while our method uses variable MV sizes and variable search ranges. The average PSNR of the proposed method is a little higher than the Lim’s method, while our method can speed up about 144 times on average. In addition, we compared our method and the replica of Lee’s method [26] as shown in Table 3, where we tested the proposed method with two parameter sets; one set is the same parameters of the above experiments, and the other set (say speed-up parameters) is 7×7 and 5×5 for initial search range and overlapping range, respectively, and one MV is estimated for 5×5 pixels. The proposed method has the most complexity; however, the result with speed-up parameters has the least complexity, and has higher PSNR than the result of the replica of Lee’s method [26].

[TABLE 3.] Comparison with replica [26] (PSNR [DB] and processing time [ms])

Comparison with replica [26] (PSNR [DB] and processing time [ms])

The average processing time of the proposed method for 10 CIF sequences is 4,746 ms; however, this is the processing time under software implementation. The processing time of variable block size ME realized by dedicated hardware for HD images is fast enough for real-time processing [29, 30], so the proposed method can be used for real-time FRUC processing by hardware implementation.

A FRUC method using WOBMC with variable block sizes has been proposed in this paper; in the proposed method, bidirectional MVs are estimated using variable block sizes and search ranges comparing matching scores. The overlapping range for WOBMC is also enlarged by using the ME expansion rate. In addition, brightness compensation is utilized by comparing with the median filtered image of averaging interpolation. Therefore, the proposed method can provide high-performance FRUC regardless of the magnitude and complexity of motions to reduce LCD motion blurs.

![Comparison of Variable Parameter values (PSNR [DB] and processing time [ms])](http://oak.go.kr/repository/journal/15924/E1OSAB_2015_v19n5_537_t001.jpg)

![PSNR Comparison with Other Methods (PSNR [DB])](http://oak.go.kr/repository/journal/15924/E1OSAB_2015_v19n5_537_t002.jpg)

![Sample result images (from top to bottom: replica [23], replica [24], replica [25], replica [26] and the proposed method); (a) ‘flower’, (b) ‘football’, and (c) ‘stefan’ sequences.](http://oak.go.kr/repository/journal/15924/E1OSAB_2015_v19n5_537_f008.jpg)

![Comparison with replica [26] (PSNR [DB] and processing time [ms])](http://oak.go.kr/repository/journal/15924/E1OSAB_2015_v19n5_537_t003.jpg)