Recently, the use of mobile devices has increased, and mobile traffic is growing rapidly. In order to deal with such massive traffic, cognitive radio (CR) is applied to efficiently use limited-spectrum resources. However, there can be multiple communication systems trying to access the white space (unused spectrum), and inevitable interference may occur to cause mutual performance degradation. Therefore, understanding the effects of interference in CR-based systems is crucial to meaningful operations of these systems. In this paper, we consider a long-term evolution (LTE) system using additional spectra by accessing the TV white space, where low-power devices (LPDs) are licensed primary users, in addition to TV broadcasting service providers. We model such a heterogeneous system to analyze the co-existence problem and evaluate the interference effects of LPDs on LTE user equipment (UE) throughput. We then present methods to mitigate the interference effects of LPDs by ‘de-selecting’ some of the UEs to effectively increase the overall sector throughput of the CR-based LTE system.

With the proliferation of smartphones, wearable devices, and other mobile devices, the efficiency of mobile wireless communication services has become more important. As mobile traffic is growing dynamically [1], the capacity demand on wireless networks and the necessity of additional spectrum resources are increasing. But frequency resources are limited, and securing additional spectrum bands for mobile communications is often too costly. Furthermore, it is not easy to relocate a spectrum band that has been already allocated to various systems even if their usages are limited. This necessitates the efficient use of CR-based systems with spectrum-sharing schemes. At the same time, the requirements for peak data rates and cell average spectrum efficiency are becoming stricter. In the case of LTE-Advanced (LTE-A), it is required to support 1 Gbps peak data rate and 3.7 bps/Hz/cell average spectrum efficiency. To meet such requirements for LTE-A, carrier aggregation (CA) is proposed, which aggregates two or more component carriers and supports the transmission bandwidth up to 100 MHz [2]. However, it is difficult to obtain a spectrum as large as 100 MHz exclusively dedicated to LTE-A. To solve the problem of the scarcity of spectra, cognitive radio (CR), a technology that provides flexibility of use of spectrum bands among heterogeneous systems, is actively considered [3]. CR enables a secondary user (SU) to use the white space without a license by sensing whether the target band is used by a primary user (PU) or not. It is important that SUs do not interfere with PUs [4-6].

It is desired that LTE systems acquire supplementary spectrum bands, and a study for unlicensed LTE systems using CR is actively conducted, especially for targeting TV white space (TVWS) [7–10]. However, LTE systems and other communication systems intend to use the TVWS as SUs and standardization of IEEE 802.22 and IEEE 802.11af for WLAN, and WLAN utilizing the TVWS is also in progress. It could cause interference to these systems if dissimilar systems attempt to use the same TVWS. Therefore, a study on the co-existence of heterogeneous systems in the same spectrum band is needed [11-13]. Also, usages of several different types of LPDs using the TVWS spectrum, such as Bluetooth devices and wireless microphones, keep increasing, becoming yet another important source of mutual interference. Therefore, identifying the effect of interference

Thus, in this paper, we intend to investigate the interference effects of low-power devices (LPDs) on a CR-based LTE system. Although the interference effects are mutual and the amounts of interference in both directions need to be analyzed, we here focus on the interference of LPDs on LTE as the first step by simulating a multi-cell LTE system utilizing TVWS when LPDs are present. We then propose to exclude some of the UEs under a severe interference effect by the LPDs in scheduling the transmission resources. This is possible since the TVWS for such an LTE system is a secondary carrier in CA. UEs under severe interference are allocated with their primary, licensed LTE band only. We analyze three cases of UE deselection, which are random-based, distance-based, and SINRbased. SINR stands for

The rest of this paper is organized as follows. In Section II, we describe the system model. The detailed description for analysis cases is proposed in Section III. Simulation results and analysis for performance evaluation are provided in Section IV. The paper is concluded in Section V.

The signal model received by

where

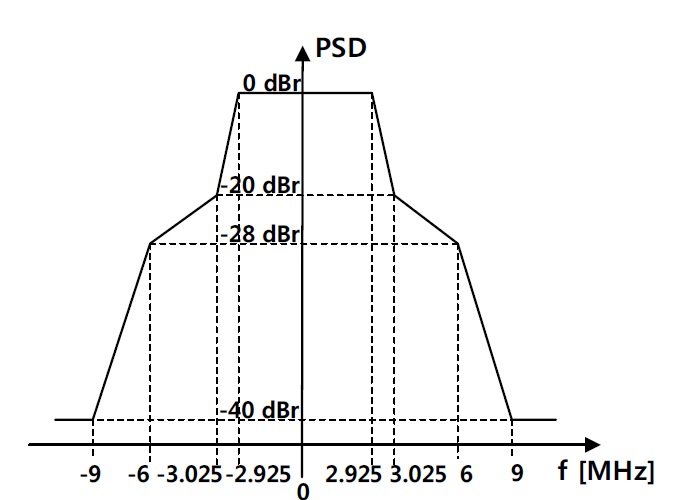

We define devices that transmit less than 1-W signal as LPDs and consider the worst case of 1-W transmission power giving the maximum amount of interference to LTE UEs. A spectral mask for 6 MHz of TVWS is shown in Fig. 1 [14]. The LPD antenna height is assumed to range from 1 m to 10 m, and 0 dBi antenna gain is assumed. The pathloss model for LPD is as defined in [2], generating a similar pathloss condition as the LTE occupying the same frequency and environment.

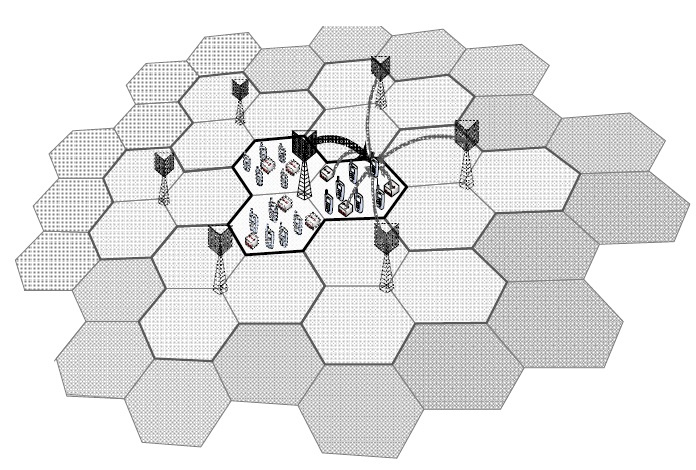

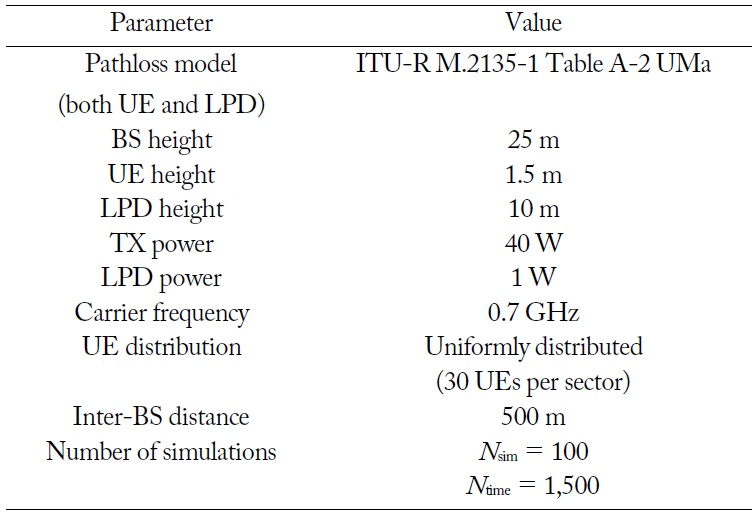

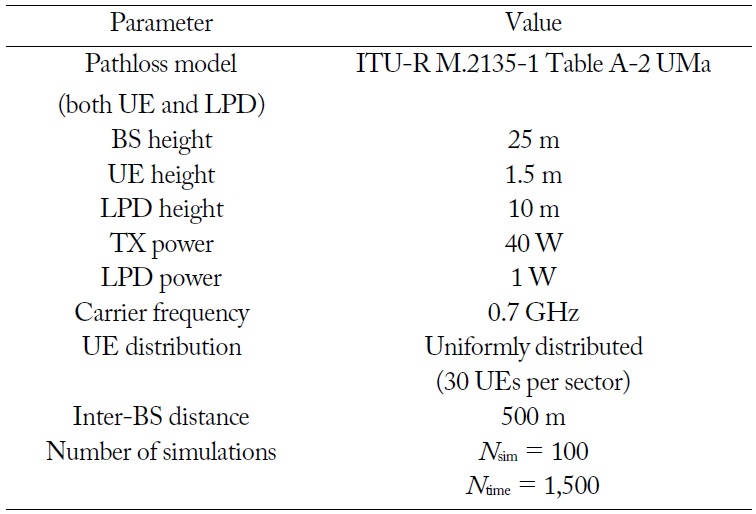

For the simulation environment of the CR-based LTE system, we consider a 19-cell, 57-sector multi-cell model. The overall structure of the system is shown in Fig. 2. Table 1 lists simulation parameters as given in [14], except the carrier frequency. The carrier frequency we use is 700 MHz for the TVWS transmission. Proportional fair (PF) scheduling using the weighted sum-rate maximization is used for sequential UE selection. To evaluate the SINR and throughput of UEs, a simulation is repeatedly conducted for

[Table 1.] Simulation parameters for performance evaluation

Simulation parameters for performance evaluation

III. INTERFERENCE EFFECTS ON LOW POWER DEVICES

It is not easy to detect the LPDs because their transmit power is too weak for the LTE base station (BS) to sense their signals. Moreover, cooperative sensing among LTE BSs or remote radio heads is not very well applied to this situation since LPDs are usually distributed locally. The LPDs would be severely interfered with by the LTE signals if the LTE BS transmits signals without detecting LPDs.

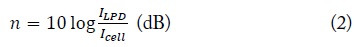

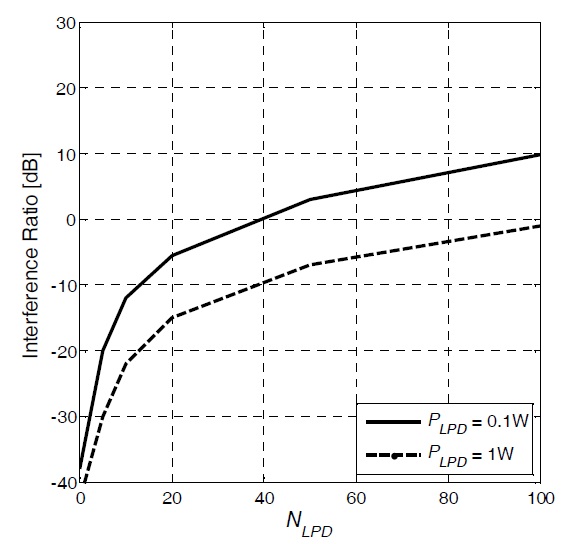

Fig. 3 shows the interference ratio that LTE systems receive from

where

Moreover, the performance degradation of the LTE system becomes significantly severe when LPDs of 1 W exist, in comparison to the 0.1 W case. Severely interfered with UEs give a negative impact on the overall system performance, especially when PF scheduling is conducted. Thus, the de-selection of high-level interfered UE could be helpful for improving the overall system performance. It is possible for highly interfered UE to be excluded from the scheduling set.

The target band is an auxiliary spectrum for CA and it does not need to be used all the time. We conduct a simulation for the following three cases to evaluate the performance of a CRbased LTE system.

This case is to choose UEs to be excluded from the scheduling set randomly, without considering the amount of interference from LPDs. The result can be used for a benchmarking purpose for other de-selection cases.

2. Distance-Based De-selection

The de-selection criterion in this case is the distance from LPDs. The distance between each UE and LPD pair is calculated, and the nearest UEs from LPDs are excluded from the serving set. In practical situations, it would be difficult to measure the exact distance between an LTE UE and an LPD. Therefore, the distance-based de-selection performance can be used to understand how much improvement can be made if such information is assumed to be available. It can also be used to evaluate the effectiveness of the SINR-based de-selection scheme we propose below.

In this case, the serving set is settled according to the SINR, which includes the channel state of each UE, the interference, and noise. The interference is the sum of the interference from exterior BSs and LPDs. Accordingly, SINR does not show the effect of LPDs directly, but it includes an indirect influence of LPDs. Performance evaluation results here are based on the SINR of the combined effect, and thus can be regarded as the actual achievable performance in practical situations. It turns out that the SINR-based scheme works considerably better than the distance-based scheme, demonstrating the effectiveness of the proposal in real operations. UEs that have the worst SINR are removed from scheduling set.

IV. PERFORMANCE EVALUATION AND ANALYSIS

The performance in three cases of de-selection is evaluated and analyzed in this section. The simulation parameters are shown in Table 1. BSs are generated and the inter-BS distance is 500 m. Thirty single-antenna UEs and 10 LPDs are generated uniformly in the center cell and large-scale fading is calculated based on the model described in [2]. The simulation is conducted repeatedly

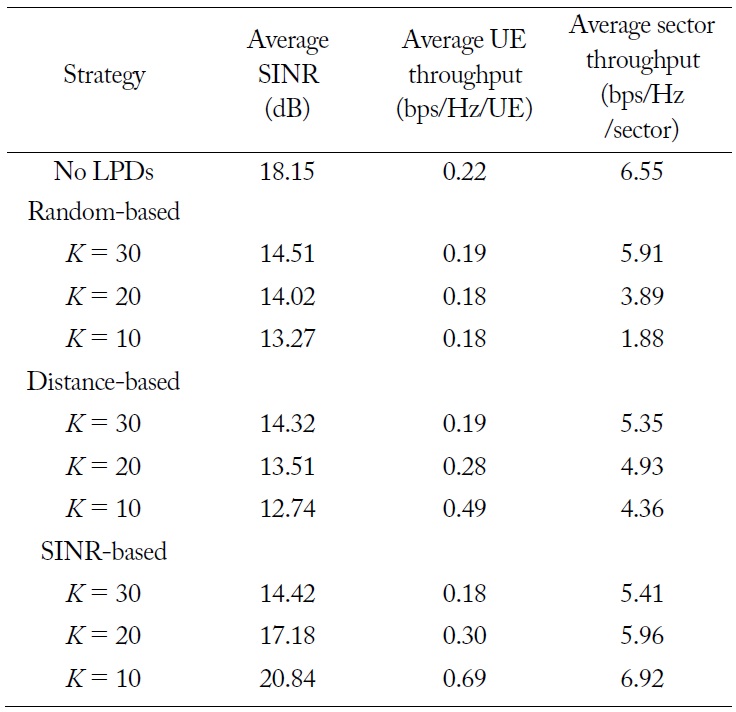

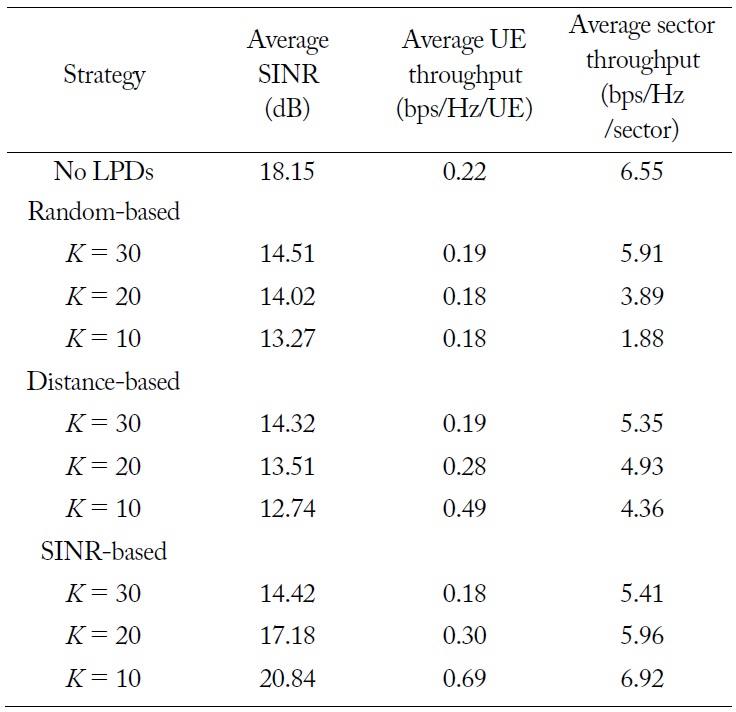

The performance measures are the throughput of scheduled UEs in the serving set, as well as the sector throughput, which is the sum of throughput of served UE in sector. The average values of the SINR, UE throughput, and sector throughput are shown according to the number of UEs of the serving set, denoted by

[Table 2.] Performance of de-selection strategies

Performance of de-selection strategies

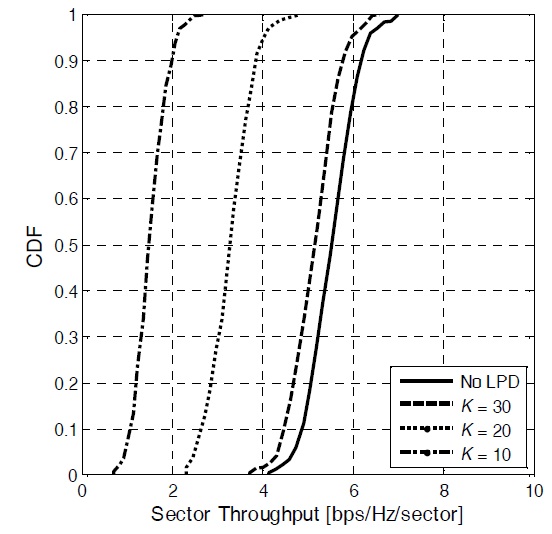

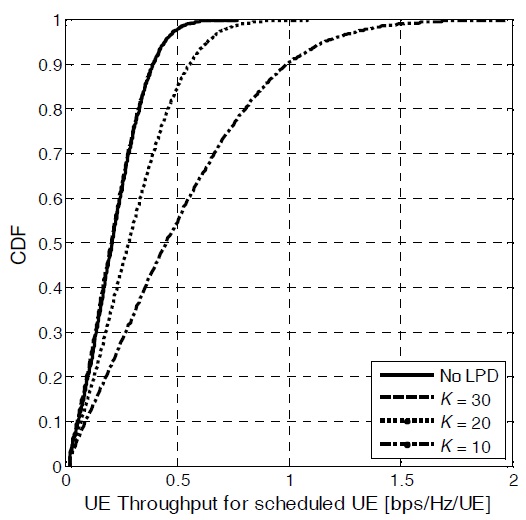

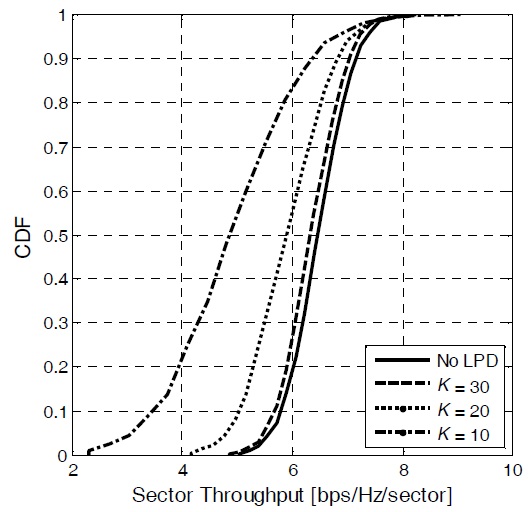

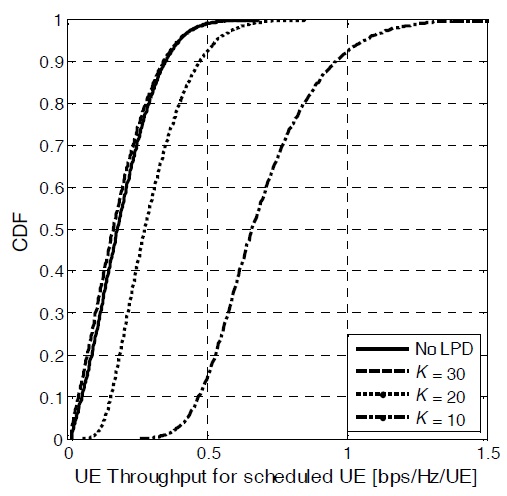

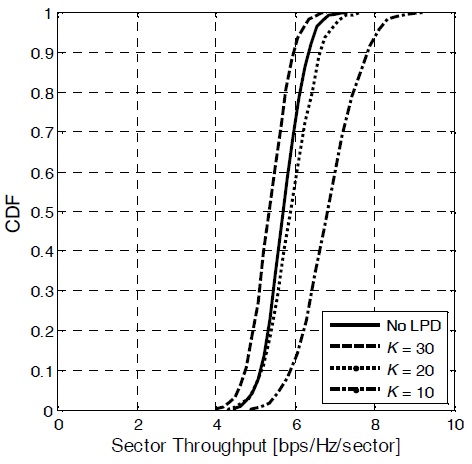

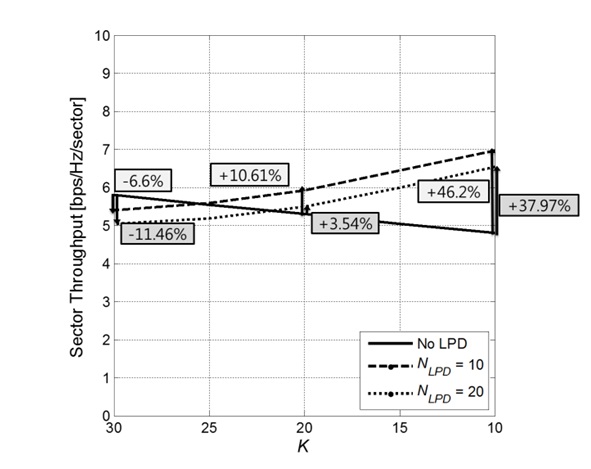

The sector throughput of random-based de-selection is shown in Fig. 4. When the number of de-selected UEs increases, the peak value of the sector throughput becomes degraded. It means that both the SINR and the throughput of each UE decrease. Fig. 5 shows the UE throughput, and Fig. 6 illustrates the sector throughput of distance-based de-selection. When the number of de-selected UEs increases, the value of the lower five percent of the sector throughput falls off, while the peak value of the sector throughput is almost the same even though UE throughput improves.

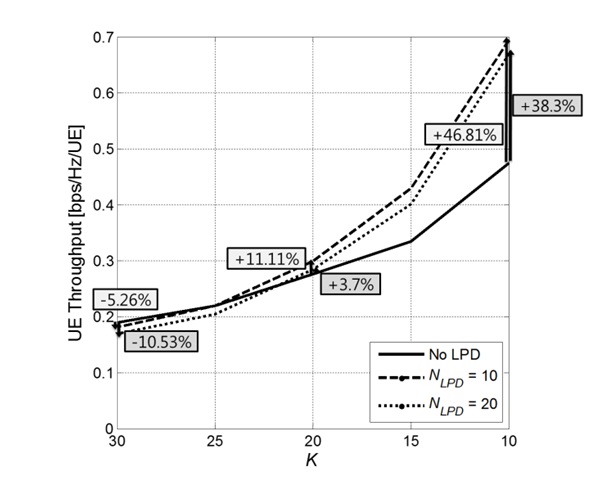

The UE throughput of SINR-based de-selection is illustrated in Fig. 7, and the sector throughput is shown in Fig. 8. SINR-based de-selection brings about improvement of the UE throughput and the sector throughput. The median value of UE throughput is almost three times and the median value of the sector throughput is nearly 1.3 times when

The performance of each strategy is shown in Table 2. When 30 UEs are scheduled with existing LPDs, the performance degradation occurs more commonly than in the case in which no LPDs are present. While random-based de-selection brings about a decline of performance, the average UE throughput of distance-based de-selection increases as

When multiple-input multiple-output (MIMO) transmitssion and reception are considered, more accurate measurement of the SINR from interfering sources can be made, producing a potential increase of the throughput of CR-based LTE systems. In particular, if a massive MIMO array is put into use in the near future, very accurate beamforming can be performed to spot specific locations of interfering sources. The actual sensing performance, together with the increased throughput amount, is a topic for future research.

The performance of LTE systems in utilizing TVWS as the secondary band was evaluated in this paper. In particular, the performance in UE throughput as well as the sector throughput was evaluated under the influence of LPDs, which is an important interference factor. Although such an interference causes significant degradation in LTE performance, a big portion of the loss can be gained back by intelligently choosing the scheduled set of UEs.