In this paper, we propose an algorithm that can efficiently detect and monitor multiple ships in real-time. The proposed algorithm uses morphological operations and edge information for detecting and tracking ships. We used smoothing filter with a 3×3 Gaussian window and luminance component instead of RGB components in the captured image. Additionally, we applied Sobel operator for edge detection and a threshold for binary images. Finally, object labeling with connectivity and morphological operation with open and erosion were used for ship detection. Compared with conventional methods, the proposed method is meant to be used mainly in coastal surveillance systems and monitoring systems of harbors. A system based on this method was tested for both stationary and non-stationary backgrounds, and the results of the detection and tracking rates were more than 97% on average. Thousands of image frames and 20 different video sequences in both online and offline modes were tested, and an overall detection rate of 97.6% was achieved.

Real-time monitoring and identification of moving ships and vessels in the sea is an important and crucial task in many coastal surveillance operations [1, 2]. Ship detection and tracking is very challenging due to the unpredictable environment and high requirements of robustness and accuracy. Very few studies have attempted to resolve these challenges. Conventional work has mainly focused on images obtained by satellites or on temporal sequences of marine radar images [3-7].

Our system allows early and reliable detection of traffic, both cooperative vessels and stealthy intruders. It uses a single camera mounted either on a fixed platform or on a moving ship. Background subtraction is the initial stage in automated visual surveillance systems. In this study, we use a morphological “open” operation and frame subtraction for background subtraction. The results of background subtraction are used for further processing. We use the edge information of ships for their detection. The Sobel edge detector and morphological “close” operations are performed to trace the exact ship and vessel locations.

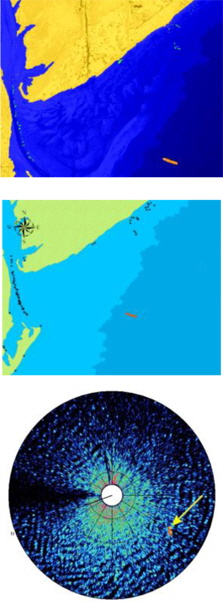

Most past work mainly deals with images captured by satellites or with temporal sequences of marine radar images [3-7]. Researchers from the fields of automatic target detection and recognition have often employed space-based imaging for ship detection and maritime traffic surveillance [6]. Inggs and Robinson [8] investigated the use of radar range profiles as target signatures for identifying ship targets and neural networks for classifying these signatures. Tello et al. [9] used wavelet transform, obtained using a multiresolution analysis, to analyze the multi-scale discontinuities of synthetic aperture radar (SAR) images and detected ship targets in a particularly noisy background [10].

Determined a relative improvement in the ship detection performance of polar-metric SAR over that of singlechannel SAR. In a recent work, Wang et al. [7] developed an approach based on the alpha-stable distribution in a constant false alarm rate (CFAR) ship detection algorithm for spaceborne SAR images. Fig. 1 shows a few examples of satellite images. Compared with the large body of investigations on the feasibility of satellite-based SAR for ship detection, considerably lesser research and development activity has been devoted toward the detection and tracking of vessels by using digital camera imagery.

Above conventional work was done with satellite images and SAR radar images. These applications are for detecting ship objects in long distance which more than several hundred kilometers. Our work is for detecting ship objects in several kilometers such as coastal surveillance systems which require general CCD cameras in short distance compared with satellite and radar application.

In this work, a stationary and a non-stationary background are assumed for the video sequences tested [1].

Background subtraction is a commonly used class of techniques for segmenting out objects of interest from a scene for applications, such as surveillance.

1) Stationary Background

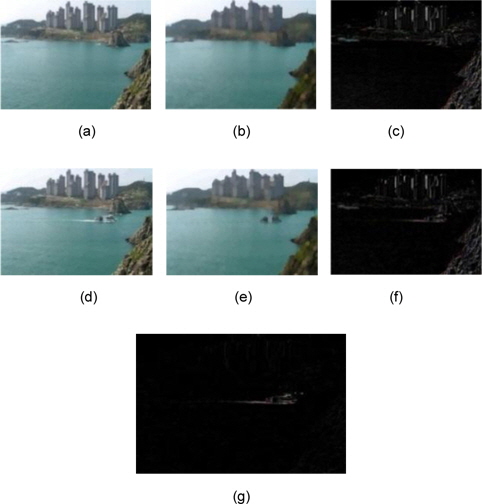

The first frame of a video sequence, which does not contain any object of interest, could be set as the initial background. If the background frame (in RGB) is B and the current frame (in RGB) is f, we process them using the morphological “open” operation. That is, by applying the open operation on frames B and f, we generate the intermediate image frames B1 and f1, which are then subtracted from the original B and f RGB frames to yield frames B2 and f2, respectively. Thus, the unnecessary background in B2 and f2 frames is faded. The subtraction of frame B2 from frame f2 generates the actual background image needed for further processing. In other words, to obtain and identify the foreground dynamic objects and to detect ships, we subtract the registered background image B2 from the processed input frame f1, as shown in Fig. 2.

>

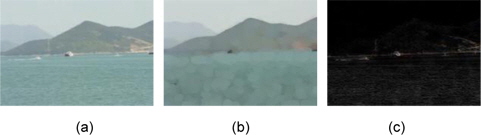

B. Smoothing, Filtering, and Morphological Processing

We employ a 3 × 3 Gaussian filter to smooth the image frames for minimizing noise and unnecessary data. After filtering, we convert the RGB components to their corresponding grayscale. It is evident that the sea region has mostly horizontal edges, and the vertical edges mostly belong to ship objects. Therefore, by extracting the vertical edges of each frame, a considerable amount of unwanted data is eliminated. This process identifies the outlines of ship objects, as well as the boundaries between the ship objects and the image background. We employ the Sobel edge detector, which is the most appropriate in our case. The Sobel operator is used in image processing, particularly in edge detection algorithms. Technically, it is a discrete differentiation operator that computes an approximation of the image intensity function’s gradient. At each point in an image, the Sobel operator yields either the corresponding gradient vector or the norm of this vector. The Sobel operator is based on convolving the image with a small, separable, integer-valued filter in the horizontal and vertical directions, and is therefore characterized by low computational cost. All edge detectors need an adaptive threshold value. The threshold value that we used is fixed and is adaptive for all the tested video sequences. After extracting the edges, we apply the morphological “close” operation to obtain the approximate locations and the partial contours of the ships. In other words, the ship is indicated as a white patch, whereas the rest of the area in the image is blank, as shown in Fig. 4. To remove all unwanted noise and generate an image frame with only the ship components, we convert the image frame into a binary image with an appropriate threshold value. We set the threshold very carefully to avoid eliminating the ship components.

>

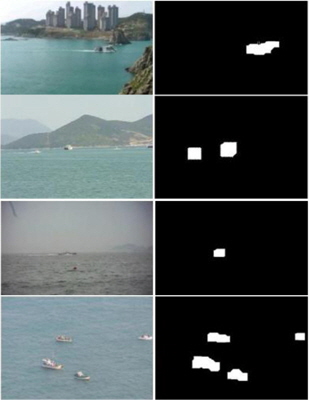

C. Ship Labeling and Tracking

To track and detect ships, we apply a labeling algorithm onto the resultant images for counting the number of ships; i.e., by determining the number of connected components, we can find all objects of interest in the frame. Connectedcomponent labeling (alternatively connected-component analysis) is an algorithmic application of graph theory, wherein the subsets of connected components are uniquely labeled on the basis of a given heuristic. Connectedcomponent labeling is used in computer vision for detecting connected regions in binary digital images, but color images and data with higher dimensionality can be processed as well. When integrated into an image recognition system or human–computer interaction interface, connected-component labeling can be performed on various types of information. Usually, it is performed on binary images obtained via a thresholding step. An algorithm traverses the images for labeling their vertices on the basis of the connectivity and relative values of their neighbors. Connectivity is determined on the basis of the medium. For example, image graphs can be 4-connected or 8-connected. Subsequent to labeling, a graph may be partitioned into subsets, after which the original information can be recovered and processed. Due to occlusions, occasionally, while executing the labeling algorithm, two components are merged and treated as one entity. Vessel marking in consecutive frames generates the tracking procedure.

The proposed algorithm was executed on a PC equipped with an Intel E2200 @ 2.20 GHz CPU and 1 GB of RAM. All our algorithms were implemented in the MATLAB environment. The system was built on digitally captured video streams of the YUV, AVI, MOV, and MP4 formats with input image resolutions of 1920 × 1080, 720 × 480, 640 × 480, and 320 × 240. Twenty different video sequences were used for testing. All video sequences were captured from a distance of 1.5–3 km and at several different angles.

The video sequences selected for ship tracking were of different light intensities and were captured on different days with different backgrounds.

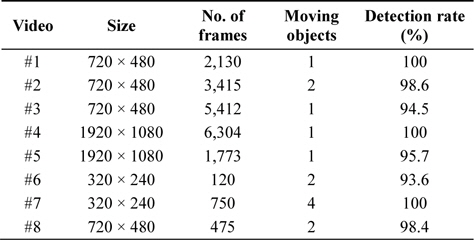

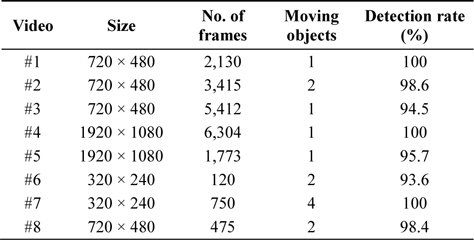

Table 1 lists the results of the proposed algorithm for the eight different video sequences used. The results reveal that the proposed algorithm is not affected by shadows or object backgrounds. Our test video sequences comprised a minimum of 100 frames and a maximum of 5,000 frames, and the overall detection rate for the tested sequences was 97.6%. Overall tracking rate can be more than 97.6 % because the detection rate was obtained for each frame. Because of reflection of light and waves, ship object can disappear for some frames. With tighter conditions for the intermediate frames without ship objects we can increase the detection rate of ship for ship tracking.

[Table 1.] Experimental results of proposed algorithm

Experimental results of proposed algorithm

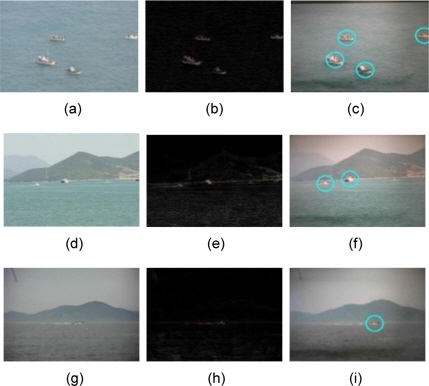

Fig. 5 shows the experimental intermediate result of each phase of the proposed algorithm. The images in the first column (Fig. 5(a), (d), and (g)) are the original image frames; those in the second column (Fig. 5(b), (e), and (h)) are after background subtraction; and those in the third column (Fig. 5(c), (f), and (i)) are the final detected frames; the blue circles indicate the detected ships.

Ship detection and tracking are among the most computationally demanding object detection tasks. In this paper, we proposed an adaptive moving ship detection and tracking algorithm based on edge information and employs morphological operations. Ship detection is challenging due to water color, ship color, weather conditions (rainy, cloudy, sunny, and snowy), and different conditions during day and night times. However, our system can accurately detect ships within a region of interest, and it is not affected by shadows and ship background. The implementation of this work was successful, with an overall identification rate of 97.6%, which was achieved in 0.319 seconds. The proposed system can be a solution to the problem of monitoring secured coastal areas. In the future, we expect that the popularity of hybrid solutions capable of predicting and applying the best combination of detectors and trackers to increase.