How to provide relevant information that fits into users’ information needs has long been a core research question among information scientists and information professionals over several decades. Since the late 1990s when online information became prolific on the Internet, researchers and practitioners in information science have become interested in better understanding of people’s assessment of information credibility, quality, and cognitive authority (e.g., Rieh, 2002), which were initially identified as primary user-centered relevance criteria in a variety of studies (e.g., Wang & Soergel, 1998). As the empirical findings of credibility research accumulated, several researchers began to recognize the importance of investigating people’s credibility assessment as a research agenda in its own right beyond relevance criteria studies (Rieh, 2010; Rieh & Danielson, 2007).

The motivation for studying the credibility of online information is primarily drawn from dramatic changes in today’s digital environments which allow people to create user-generated content easily using a variety of web publishing and social media tools. The credibility assessment of user-generated content available in various social media, such as blogs, microblogs (e.g., Twitter), Wikis (e.g., Wikipedia), social news sites, and social networking sites poses another layer of challenges for online users because people may not be able to rely on their primary criteria for credibility judgments – examining the characteristics of original sources (Rieh, 2002).

There are at least three important problems with current credibility research. First, there is a lack of consensus on definitions and notions of credibility. Therefore, each credibility study begins with different sets of assumptions and conceptualizations of credibility. Sometimes related concepts such as quality, authority, and reliability of information are used interchangeably without providing clear distinctions. What is more problematic is that credibility is conceptualized differently depending on the academic discipline. For instance, communication researchers, Human-Computer Interaction (HCI) researchers, and information science researchers have adopted distinct conceptual frameworks to conduct credibility research. Communication researchers (e.g., Johnson & Kaye, 1998) often use media-based frameworks to investigate the relative credibility perceptions of various media channels (i.e., online news, blogs, wikis, magazines, TV, etc.). HCI researchers, such as Fogg (2003), focus on evaluating website credibility, identifying specific elements which improve or hurt credibility perceptions. On the other hand, credibility research within information science tends to use the content-based credibility framework (e.g., Sundin & Francke, 2009). Despite the field-specific foundations of credibility research, there has been renewed attention to investigating the credibility of online information in various research communities as people increasingly select what information to use based on their judgments of information credibility.

The second problem is that the majority of previous credibility studies have investigated credibility assessment from the perspective of information consumers whose primary information activities are information seeking and reading. However, as people engage in many more diverse online information activities beyond seeking and reading in the Web 2.0 environment, they are not only information seekers or readers but also commentators, bloggers, user-generated content providers, raters, and voters in the online environment. The multiple roles that users play in today’s digital environments have not been well incorporated into credibility studies.

The third problem is that most credibility research does not pay much attention to the contexts in which online information is used. Previous studies tend to treat credibility assessment as a binary evaluation question by asking study participants whether they can trust information by showing online content from web pages which contain particular features (Lim, 2013; Xu, 2013). Or, researchers often conducted surveys asking respondents about their general perception of online information from particular media or web sites (Flanagin & Metzger, 2010; Johnson & Kaye, 1998; Metzger, Flanagin, & Medders, 2010). These approaches have limitations as researchers often miss opportunities to understand the contexts associated with credibility assessment.

Putting these three problems together, this study claims that it is critical to examine what leads users to use online information in the first place and what it is that people try to achieve by using information. That is because people will eventually do something with the information they interact with. For instance, they will use information to create user-generated content, to learn something new, to make decisions, to solve problems, or to entertain themselves. Depending on the contexts in which they interact with information, their constructs of credibility, concern about credibility, effort put into credibility assessment, and strategies used for credibility judgments might be characterized distinctively.

This paper can be distinguished from previous research on credibility by emphasizing the importance of examining the contexts of people’s information interaction. Saracevic (2010) formulated four axioms of context, and the first axiom seems to capture the approach to be taken in this paper clearly: “One cannot not have a context in information interaction.” Saracevic states that every interaction between an information user and an information system is conducted within a context, also pointing out that context is an ill-defined term. Given the diversity of information activities, topics, user goals, and intentions, this study aims at offering a groundwork for drawing the attention of information science researchers in general and credibility researchers in particular for a better understanding of credibility assessment in contexts. Demonstrating the influence of contexts on credibility assessment has motivated this research.

This paper addresses three research questions:

Research Question 1: To what extent do people’s goals and intentions when conducting online information activities influence their perception of trust? Research Question 2: To what extent does the topic of information affect people’s credibility perceptions of traditional media content (TMC) and user-generated content (UGC)? Research Question 3: To what extent does the amount of effort that people invest in credibility assessment differ depending on the type of online activity (information search vs. content creation)?

Credibility is a complex and multi-dimensional concept. Trustworthiness and expertise have long been known as the two key dimensions of credibility perception (Hovland, Janis, & Kelley, 1953). Most researchers agree that trustworthiness captures the perceived goodness or morality of the source, which is often phrased in terms of being truthful, fair, and unbiased (Fogg, 2003). Through numerous studies, additional related concepts have been identified, including currency, fairness, accuracy, trustfulness, completeness, precision, objectivity, and informativeness (Arazy & Kopak, 2011; Rieh, 2010). Fogg (2003) defines credibility with respect to “believability.” Fogg emphasizes that “it doesn’t reside in an object, a person, or a piece of information” (p. 122), as credibility is a perceived quality. Rieh (2010) provides the definition of information credibility as “people’s assessment of whether information is trustworthy based on their own expertise and knowledge” (p. 1338). In this definition, Rieh focuses on two important notions of credibility assessment: (1) it is people who ultimately make judgments of information credibility; (2) therefore, credibility assessment relies on subjective perceptions.

Hilligoss and Rieh (2008) have termed these multiple concepts “credibility constructs,” illustrating that individual users conceptualize and define credibility in their own terms and according to their own beliefs and understandings. Rieh et al. (2010) adopted Hilligoss and Rieh’s (2008) framework and tested eleven different credibility constructs using a diary survey method. They found that people evaluated the importance of credibility constructs differently depending on the type of information. When using user-generated content, traditional credibility constructs such as authoritativeness and expertise of the author were not considered to be important. On the other hand, trustworthiness, reliability, accuracy, and completeness were still considered to be important credibility constructs across different types of information objects such as traditional websites, social media, and multimedia content (Rieh et al., 2010).

2.2. Context in Information Behavior Research

Courtright (2007) provides a literature review on context in information behavior research. According to this study, in spite of growing emphasis on the concept of context, there is little agreement as to how context, as a frame of reference for information behavior, is established by users and how it operates with respect to information behavior research. Courtright has introduced typologies of context: Context as container, context as constructed meaning, socially constructed context, and relational context (embeddedness). Among these four typologies, this study will take the context as constructed meaning approach, which examines context from the point of view of an information actor. Courtright’s definition of context with this person-in-context approach is that “information activities are reported in relation to contextual variables and influences, largely as perceived and constructed by the information actor” (p. 287).

Hilligoss and Rieh’s (2008) unifying framework of credibility assessment includes context as a key factor influencing people’s credibility assessment in terms of constructs, heuristics, and interaction. By guiding the selection of information or limiting the applicability of certain judgments, context “creates boundaries around the information seeking activity or the credibility judgment itself” (p. 1473). In their empirical study, context often emerged as an important factor when their study participants who were college students distinguished between class context and personal context or entertainment purposes and health context. Some contexts were closely related to individuals’ goals and tasks and others were established as social contexts. The topic of the information seeking task could be also considered as one of the contextual factors. Hilligoss and Rieh’s conceptualization of context provides a theoretical basis for this study in which context is investigated with respect to three different notions: (1) context as user goals and intentions; (2) context as topicality of information; and (3) context as information activities.

The data were collected from two empirical studies which employed different data collection and analysis methods. The two studies allowed the researcher to capture people’s perceptions of credibility assessment in both natural settings and lab settings.

Study 1 was a diary study which was designed to capture a variety of online information activities people engage in over time. Participants were recruited using a random sample of landline phone numbers belonging to residents in Michigan, U.S.A. 333 individuals agreed to participate in the diary study. Study participants received an email with a link to an online activity diary form five times a day over a period of three days. After removal of incomplete and inappropriate records, the data set included 2,471 diaries submitted by 333 respondents.

The diary survey asked respondents to report all online activities in which they had engaged during the preceding three hours when they received a new email. Two open-ended questions about what they were trying to accomplish by conducting the one activity they decided to report were asked, along with other rating questions about interest, confidence, and satisfaction regarding this activity. They were then asked to rate to what extent they trusted the information they chose to use for this activity. Respondents were also asked to indicate their ratings in terms of credibility constructs, heuristics, and interaction.

The first step in preparing this data for analysis was to code the responses to the two open-ended questions regarding participants’ one activity and their reason for conducting this activity. Coding schemes for respondents’ responses to these questions were developed iteratively using content analysis. Respondents’ descriptions of what they were trying to accomplish in conducting their online activity were coded in terms of goals and intentions. Behavior codes were used to represent the specific action(s) that the respondents described taking. In this paper, analysis using goals and intentions from the diary entries will be reported with respect to their trust and other credibility-related responses.

3.2. Study 2: A Lab-Based Study

Study 2 was a lab-based study which was designed to make comparisons of credibility assessment processes across two different information activity types (information search vs. content creation), across two different content types (traditional media content vs. user generated content), and across four different topics (health, news, products, and travel). This method enabled the researcher to control the variability of the tasks, time allotted, physical settings, and the initial websites where the subjects began each search task. Subjects were recruited from the general local population in a small town located in a Midwestern state in the U.S.A. through random phone sampling. Data were collected from individual experimental sessions with 64 study subjects. These 64 subjects were randomly assigned to one of the two experimental conditions – information search activity or content creation activity. Every subject in either condition completed four different tasks – two of which involved interacting with user generated content (UGC) and two of which involved interacting with traditional media content (TMC) on the starting website.

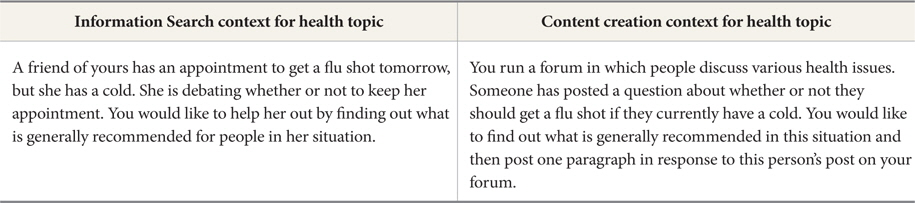

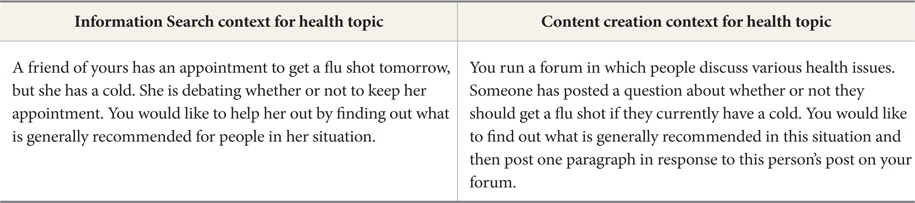

Following Borlund’s (2000) ‘simulated work task situation’ approach, 16 task scenarios were created in a way that simulates real life information needs. See Table 1 for sample scenarios. For information search tasks, subjects were asked to find information on an assigned topic and then copy-paste the URLs and portions of website content they found useful for the task into a Word document. For content creation tasks, subjects were asked to find information on an assigned topic and then write up a paragraph in a Word document. Subjects were given up to 10 minutes for each information search task and up to 20 minutes for each content creation task. Every subject had four tasks drawn from four topics: Health (getting a flu shot), news (international news in Japan), products (purchasing a new smartphone), and travel (a trip to Edinburgh, Scotland). The experiments were conducted one-on-one with each subject and lasted for 1.5 or 2 hours depending on the activity type (information search or content creation) to which the subject had been randomly assigned.

[Table 1.] Task Scenario Example

Task Scenario Example

For each topic, two websites were selected and one of the two was assigned to the subject.

- Health • http://flu.gov (TMC) • www.healthexpertadvice.org/forum/ (UGC) - News • www.cnn.com (TMC) • http://globalvoicesonline.org (UGC) - Products • www.pcworld.com (TMC) • www.epinions.com (UGC) - Travel • www.fodors.com (TMC) • www.tripadvisor.com (UGC)

The data were collected from the post-task questionnaire and the background questionnaire. The post-task questionnaire asked subjects to respond to various questions about credibility of online information, search tasks given to them, perceived effort they exerted for making credibility judgments, and perceived outcome of their search performance and search experience. In the background questionnaire, subjects were asked to fill out questions about demographic information as well as their prior experiences with various types of online activities. In addition, exit interviews were also performed with each study subject to collect data about their understanding, perceptions, and credibility assessments of UGC in general. All interviews were audio-taped and then transcribed for data analysis purposes.

4.1. User Goals, Intentions, and Trust

The data for this research question was drawn from Study 1, a diary study. The user goal is the driving force that leads people to engage in information interaction (Xie, 2008). An intention is a sub-goal that a user intends to achieve during information interaction. Both goals and intentions were identified from an open-ended question in the diary: “What were you trying to accomplish in conducting this activity?” In the diary, we also asked respondents about trust with this question: “To what extent did you trust the information that you decided to select?” with a scale of 1 (not at all) to 7 (very much).

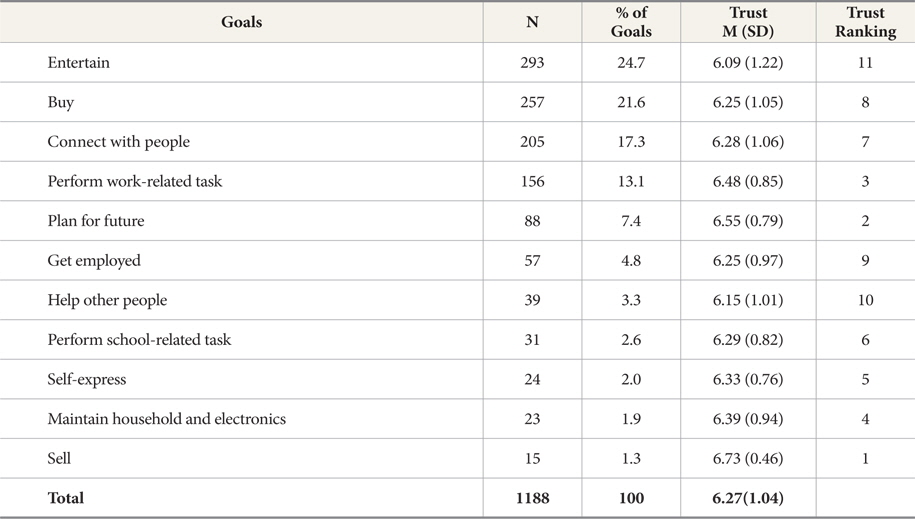

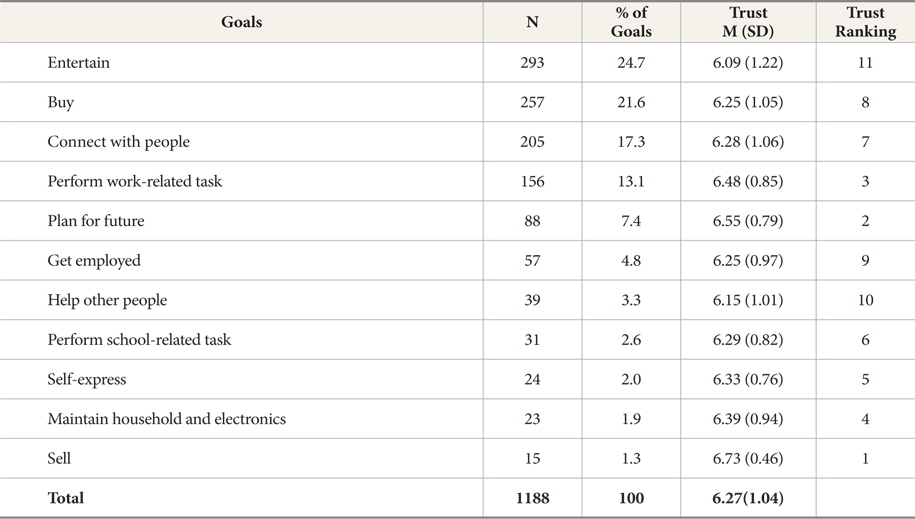

As presented in Table 2, respondents showed a higher level of trust for the information they decided to use in their everyday life. With a scale of 1 to 7, the highest average score of trust was 6.73 and the lowest average score was still higher than 6, scoring 6.09. Respondents trusted online information most when they engaged in information activities in order to sell a product online (M=6.73). When respondents selected information in the course of planning for the future (M=6.55) or performing a work-related task (M=6.48), they reported that they trusted the information more highly than they would for some other information activities. When their goal was to help other people (M=6.15) or to entertain themselves (M=6.09), their trust level toward the information selected was relatively lower.

[Table 2.] Information Activity Goals and Trust

Information Activity Goals and Trust

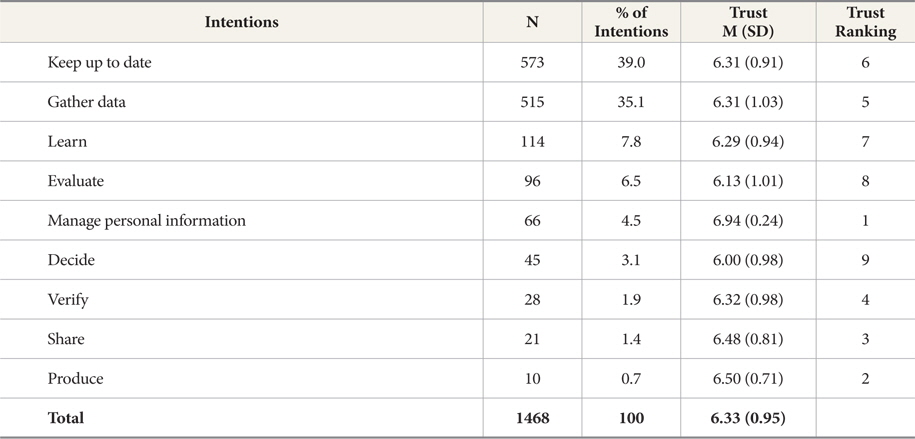

While we identified nine different kinds of intentions, keeping up to date (N=573; 39%) and gathering data (N=515; 35.1%) represented the majority of the intentions reported by respondents, as seen in Table 3. Not surprisingly, when respondents managed their own personal information, they rated their trust level highest (M=6.94). Respondents also reported that they trusted information highly when they engaged in information activities in order to produce (M=6.50) or share (M=6.48) content. On the other hand, when respondents selected information with the intention of evaluating something (M=6.13) or deciding about something (M=6.00), they rated their trust toward that information lower than for any other intentions.

[Table 3.] Information Activity Intentions and Trust

Information Activity Intentions and Trust

The analysis indicated that examining respondents’ trust perceptions with respect to goals and intentions was meaningful. However, we were not able to run a statistical test for significance because of the big difference in the frequencies of goals and intentions.

4.2. Topicality and Believability

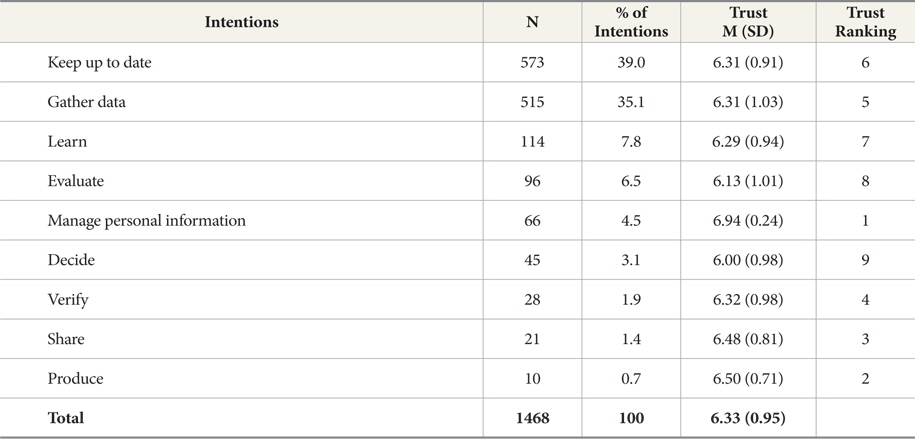

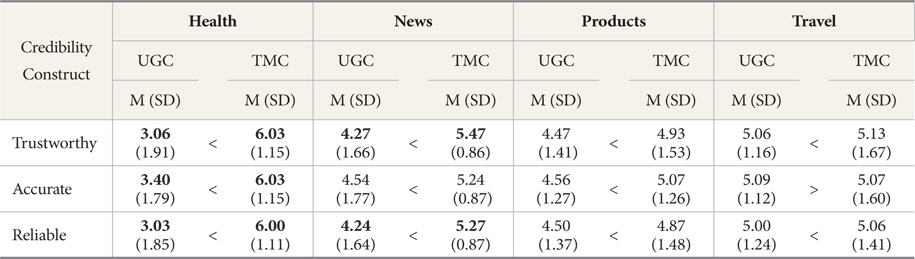

In Study 2, every time subjects completed each of the four tasks within their condition (information search or content creation), they were asked to rate the “believability” of the information they rated in the website that was given to them. Subjects were asked to rate believability with respect to three questions presented in the post-task questionnaire: Being trustworthy, accurate, and reliable with a scale of 1 (not at all) to 7 (very).

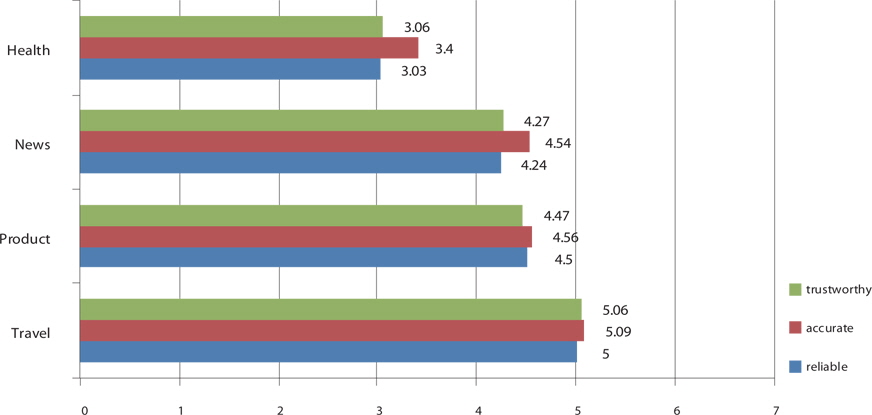

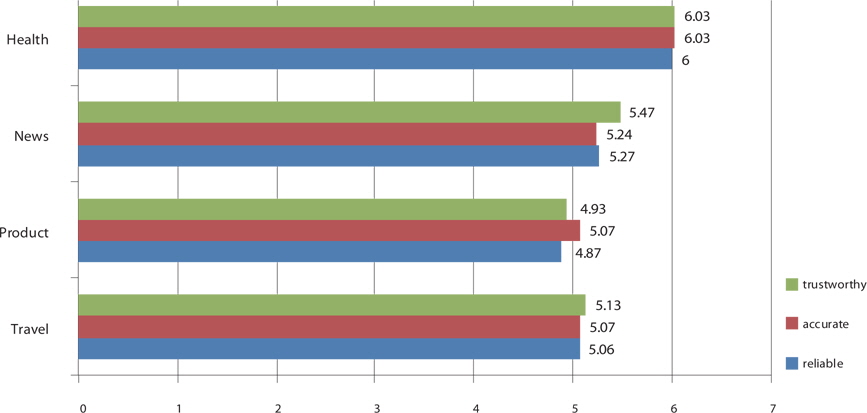

We wanted to investigate to what extent the topicality of task (health, news, products, and travel) affect people’s credibility perceptions of user generated content (UGC) and traditional media content (TMC). Overall, as shown in Table 4, the subjects of this study gave lowest believability ratings to health UGC (M=3.06) while giving highest believability ratings to health TMC (M=6.03). The difference in believability ratings for the three constructs of believability – being trustworthy, accurate, and reliable – between health UGC and health TMC was significant. In the case of news information, subjects overall rated TMC higher than UGC. They rated the trustworthiness and reliability of TMC significantly higher than UGC when using news information. Although subjects still rated the accuracy of news information from TMC (M=5.24) higher than that from UGC (M=4.54), the difference in ratings was not significant. In the case of using product information, subjects rated TMC higher than UGC in terms of all three believability constructs, but none of the believability ratings between product TMC and product UGC were significantly different. Assessing the believability of travel information using TMC and UGC was mixed. There was virtually no difference in subjects’ believability perceptions between travel UGC and travel TMC. It means that subjects of this study accepted travel UGC as almost equally reliable, accurate, and trustworthy compared to travel TMC.

[Table 4.] Credibility Constructs by Content Type Across the Four Topics

Credibility Constructs by Content Type Across the Four Topics

Figure 1 presents the comparison of UGC believability perceptions across four topics. Among the four topics we examined, subjects rated the believability of travel UGC highest compared to product UGC, news UGC, and health UGC. It may be because people tend to trust the UGC provided by other users who have first-hand experiences with certain locations or events when using travel information. Product and news UGC were perceived to be somewhat more believable than health-related UGC.

As shown in Figure 2, when using TMC, subjects believed health information most than information about other topics. With the exception of health, subjects’ believability ratings for news, travel, and product TMC did not seem to differ to a great extent. Putting Figure 1 and Figure 2 together, we learned that topic matters more when subjects assessed believability of UGC than for believability of TMC.

4.3. Credibility Assessment in Search and Creation Contexts

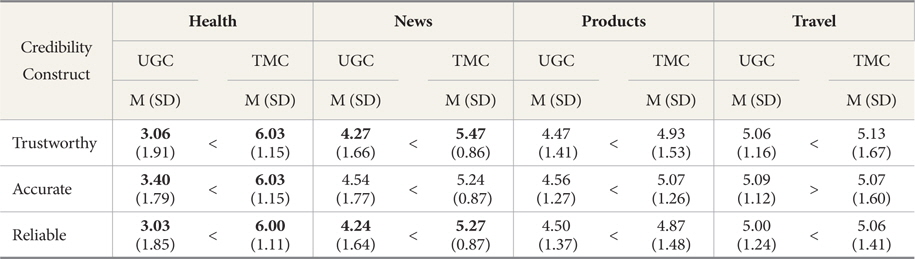

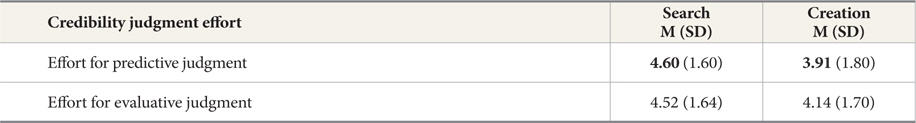

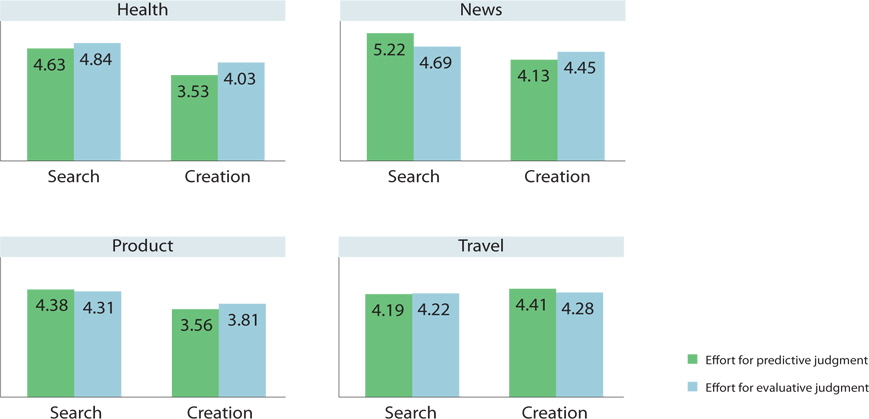

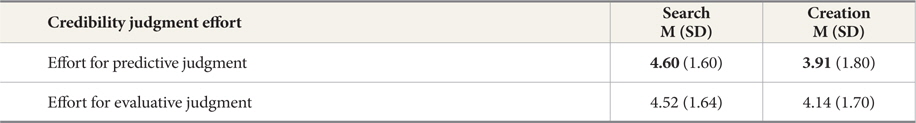

We examined the differences in the amount of effort subjects exerted for credibility judgments depending on their activity types (information search and content creation). In this study, two kinds of judgments people make in the process of information activity were investigated: predictive judgment and evaluative judgment. According to Rieh (2002), predictive judgment denotes predictions reflecting what people expect before accessing webpages, whereas evaluative judgment is made when people actually interact with the webpages.

Table 5 presents a comparison of the means and standard deviations for effort exerted for predictive judgment and for evaluative judgment across the two activity types. Overall, subjects reported that they made more effort both in predictive judgment and evaluative judgment when performing a search activity than for a creation activity. In particular, effort exerted for predictive judgment was statistically different depending on the activity type. It means that study subjects put significantly more effort into the process of deciding which websites to visit (predictive judgment) when they were searching for new information than when they were creating new online content. We speculate that those subjects in the information search condition perceived searching as a primary task, and they were more likely to be concerned about finding credible information because information evaluation could come across as one of the primary actions encompassed in the information search process. On the other hand, those subjects in the content creation condition could perceive that they were dealing with two sub-tasks, as they have to first find information and use that information in order to create their own content. Therefore, they may consider creating content to be a more cognitively demanding task, and thus, may put more effort into the actual content creation process while investing less effort in the preparatory activities of deciding which websites to visit and evaluating information from those websites.

[Table 5.] Credibility Judgment Effort by Activity Type

Credibility Judgment Effort by Activity Type

We also investigated whether the topic of a task influences the amounts of effort that subjects invested in the two activity types. Figure 3 summarizes the results of an analysis of the effect of activity type on credibility judgment effort exerted across the various topics. With the exception of travel, subjects exhibited a consistent pattern across the topics, investing more effort in making credibility judgments when performing a search activity. In particular, the differences in the effort exerted for predictive judgment between search and creation were found to be statistically significant for the topics of health, news, and products. In the case of travel, however, subjects demonstrated an opposite trend, exerting more effort in making credibility judgments when performing a content creation activity; however, these differences were not statistically significant.

The major findings and implications of this study can be summarized as follows. First, comparing people’s trust perceptions across various kinds of goals and intentions provided insights into understanding credibility assessment. The findings demonstrated that numeric ratings of trust perception did not say much about people’s credibility assessment because the ratings tended to be consistently high, showing that the lowest average score was still higher than 6.0 with a scale of 1 (not at all) to 7 (very much). Therefore, rather than relying on numeric scores, it was more important to characterize people’ credibility assessment with respect to a variety of contexts in which people use online information in their everyday life.

Second, people’s assessment of the credibility of UGC was influenced by the topic of the task in which they are engaged. On the contrary, credibility of TMC was less influenced by the topic of information. For instance, when using health and news information, subjects in this study had greater reservations regarding UGC. With travel-related and product-related UGC, however, subjects rated the credibility of UGC as the same or higher than for TMC. This finding has direct practical implications for web designers. The designers of health- and news-related websites may want to present TMC and UGC separately within a web page, explicitly labeling UGC so that users are not confused regarding the type of content with which they are interacting. On the other hand, the findings indicate that TMC and UGC can complement one another for the topics of travel and products. Users may wish to have both of these types of content available side by side so that they can validate the information across these two content types. Users’ credibility perceptions regarding travel UGC and product UGC can be even enhanced by presenting this UGC side-by-side with related TMC.

Third, the results of this study indicate that people indeed invest different amounts of effort when they engage in content creation activities versus information search activities. Surprisingly, people tend to exert more effort when they look for information than when they create content. We provided some speculations concerning such findings above in Section 4.3.

Examining credibility assessment in various contexts including goals, intentions, topicality, and information activities is a major theoretical contribution of this study. While goals, intentions, and topicality have been often considered as information seeking and use contexts in previous studies, we believe that including different types of information activities – searching and content creation – is an important contribution to the field of information science. While the majority of web credibility research has been conducted with respect to information seeking or news reading activities (e.g., Johnson & Kaye, 2000; Metzger, Flanagin & Medders, 2010; Rieh, 2002), little previous research has looked at people’s credibility assessment within the process of content creation, except for a couple of recent publications (St. Jean et al., 2011; Rieh et al., 2014). By conducting the study within the context of both information searching and content creation, we demonstrated that credibility assessment can be seen as a process-oriented notion rather than a matter of simple binary evaluation.

We hope that the findings of this study can help researchers and practitioners gain insights into how we might help users to judge the credibility of UGC more effectively in the course of their everyday information activities, rather than merely determining whether users do or should believe UGC or not. As UGC will be only increasing in number in online environments, more studies are needed in the future to examine how users select and assess UGC credibility efficiently and effectively in various information seeking and use contexts. Future research could also include more diverse types of information activities beyond searching and content creation. For instance, we can examine people’s credibility assessment of online information when they ask questions in online communities, social networking sites, or social Q&A sites.