Occluded objects have been an interesting research area for the field of three-dimensional (3D) object visualization and recognition. Recently, several approaches employed a 3D imaging technique to solve the occlusion problem [1-9]. Among them, integral imaging can be considered as one of the promising solutions, and thus some techniquesbased on integral imaging have been proposed for 3D recognition of partially occluded objects. Integral imaging is a method of recording and displaying techniques for 3D scenes with the lenslet array. The recorded elemental images consist of several two-dimensional (2D) images with different perspectives [10-17]. In 2007, a 3D image correlator using the computational integral imaging reconstruction (CIIR) method was proposed [6]. In CIIR, the plane images were generated by the magnification and superimposition process of elemental images. Each plane image contains both focused and defocused images for occlusion and target objects. This enables us to recognize target objects with occlusion. However, the CIIR process may produce the addition of blur noises in plane images due to the interpolated images and high computation loads. To overcome this problem, the modified CIIR-based 3D correlator using smart pixel mapping, which can reduce the magnification factor and thus improve the correlation performance, was proposed in 2008 [7]. However, this method also utilized the magnification process to generate the depth-converted plane images.

Recently, a depth slicing method using a convolution property between periodic functions (CPPF) has been proposed to produce the plane image array, which is similar to plane images from the previous CIIR method [18, 19]. In integral imaging with the lenslet array, an elemental image has periodic property depending on object depth. This method takes advantage of the periodic property of spatial frequency of an elemental image. The CPPF is performed by convolution between the elemental image and the 2D

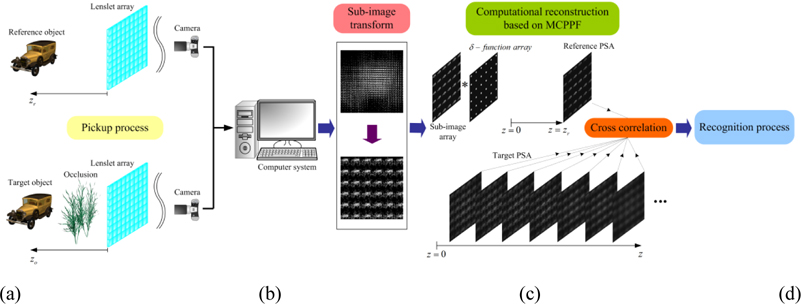

In this paper, we propose a 3D image correlator using the computational reconstruction based on the modified CPPF for partially occluded object recognition. We introduce a modified CPPF (MCPPF) method for sub-images to produce the plane sub-image arrays (PSAs) without magnification and superimposition processes used in the conventional methods. In the proposed correlator, elemental images of the reference and target objects are captured by the image sensor through the lenslet array. And, the recorded elemental images are transformed to the sub-images, which contain different perspectives according to the viewing direction. The proposed MCPPF method can be applied to these sub-image arrays for reconstruction of the 3DPSAs. Only the target PSA is reconstructed on the right plane where the target object was originally located and contains clearly focused perspectives. On the other hand, other target PSAs are reconstructed on the various reconstruction planes where the focused and blurred images are mixed. 3D object recognition is performed through cross-correlations between the reference and the target PSAs. To show the feasibility of the proposed method, some preliminary experiments on the target objects are carried out and the results are presented.

II. PROPOSED MCPPF-BASED 3D IMAGE CORRELATOR

Figure 1 shows the schematic diagram of the proposed method. The proposed method is divided into four processes; (1) pickup, (2) sub-image transform, (3) computational reconstruction using MCPPF and (4) recognition processes.

The first process of the proposed method is pickup of 3D objects. This is the same as the conventional pickup process in integral imaging. The lenslet array is used to capture 3D objects. We assume that a reference object

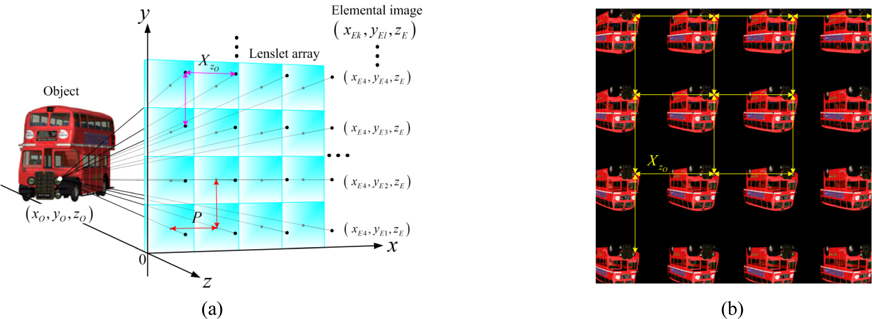

The conventional pickup process of an integral imaging system by using the direct pickup method is based on ray optics. The geometrical relationship between a point object and its corresponding point images on the elemental image plane is shown in Fig. 2(a). In the conventional integral imaging system with planar lenslet array, the geometrical relation of 2D form is given by

In the Fig. 2(a) and Eq. (1), the origin of the coordinates is the edge of the elemental lens located at the bottom of the lenslet array. The object point (

where

From the geometrical relationship, the 1D form of spatial period depending on object depth is given by |

Equation (3) implies that the intensity of the elemental image corresponding with an object locating specific depth is imaged with the same interval on the imaging plane as described in Fig. 2(b). Thus, a lenslet array in an integral imaging system may be regarded as the convertor which converts depth information of 3D space changes into 2D periodic information and vice versa.

The recorded elemental images do not have the characteristic of the shift invariance. That is, the projected small images in elemental images are scale-changed if the 3D object is located at different locations. For this reason, we apply the sub-image transform to the recorded elemental images. The sub-image array can provide both shift invariance and the whole projected image in elemental images [5]. The subimage transform can be performed based on either single pixel extraction or multiple-pixel extraction. In this paper, our intention is to apply the concept of conventional CPPF to sub-images for the proposed 3D image correlator. Therefore, the conventional CPPF technique can be modified for subimages.

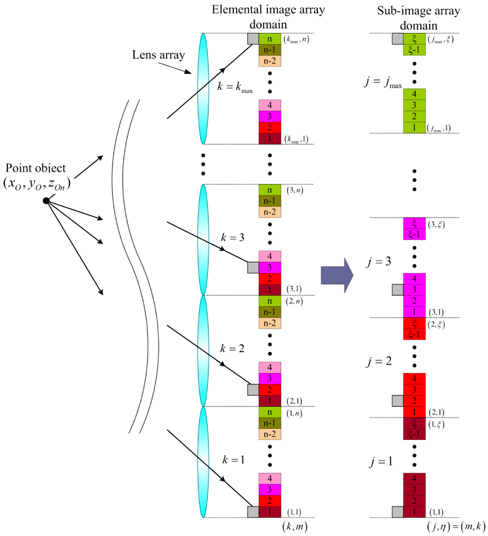

We explain the principle of the proposed MCPPF method for sub-image array. The lateral resolution of the elemental image array

where

To show the periodic property of sub-image array adequately, let us consider the condition

A pixel position on the sub-image array can be represented as the ordered pair (

Hence, the 1D form of spatial period in sub-image domain is given by |

For example, in Fig. 3, suppose

In this paper, the multiple-pixel extraction method is used to increase the resolution of sub-images [4]. Since the multiple-pixel extraction is based on the block combination from each elemental image, the spatial period of each pixel within the block is the same with that of the single pixel extraction method. Therefore, we can apply Eq. (7) to sub-images generated by multiple-pixel extraction.

2.3. Computational Reconstruction using MCPPF

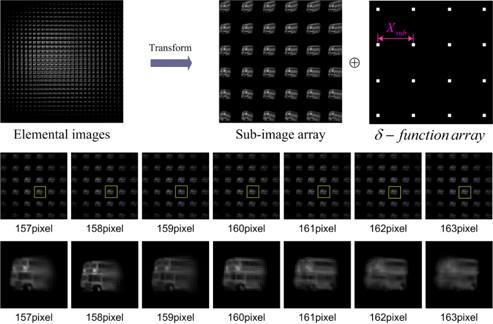

In the third process of the proposed method, we apply the MCPPF technique to the recorded sub-images in order to generate 3D PSAs for object recognition. In the transformed sub-image array, the sub-image has a spatially periodic property, and the spatial period is dependent on the depth of an object.

Mostly, depth extraction or recognition process in various methods is followed in the reverse process of obtaining multiple 2D images from 3D scenes. On the other hand, the MCPPF is to use the superimposing property of convolution between a set of periodic functions and periodic

The intensity of 2D image may be written as

Although the object intensity is continuously distributed along the

The 3D image reconstruction process using MCPPF is illustrated in Fig. 4. The pickup condition of the elemental image is as follows. The focal length and the diameter of an elemental lens are 15 mm and 5 mm, respectively. The size of the lenslet array is 30×30. The resolution of the elemental image is 900×900 pixels. The 3D object is located along the

From the process of computational reconstruction based on MCPPF, we can reconstruct the reference and target PSAs. First, the reference PSA is reconstructed on the known

Once a target PSA

where * denotes the complex conjugate. It is seen that the correlation peak

III. EXPERIMENTAL RESULTS AND DISCUSSIONS

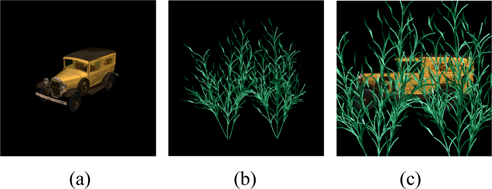

To demonstrate the feasibility of our proposed 3D image correlator, the preliminary experiment on the 3D objects in space was performed. In the experiment, a 3D object (car) is employed as reference test object as illustrated in Fig. 5(a). And, the occlusion (a bundle of plants) is shown in Fig. 5(b). Figure 5(c) shows the camera view image as the object and the occlusion are arranged inthe object field.

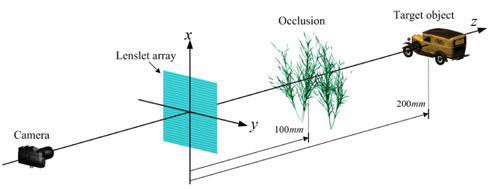

The experimental structure is shown in Fig. 6. The 3D object and occlusion are located at 200 mm and 100 mm from the lenslet array, respectively. Here the lenslet array is composed of 30×30 lenslets, and the lenslet diameter and the focal length are 5 mm and 15 mm, respectively.

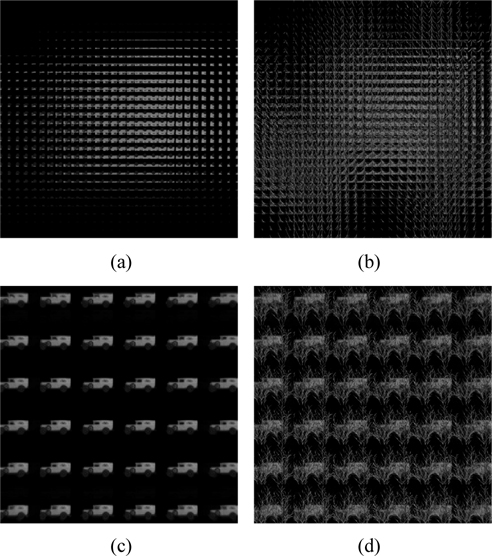

Using the experimental setup of Fig. 6, we first recorded the elemental images of reference objects which were located at a known position

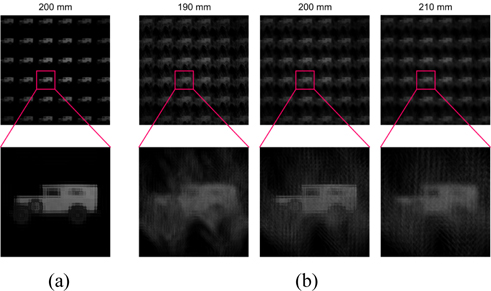

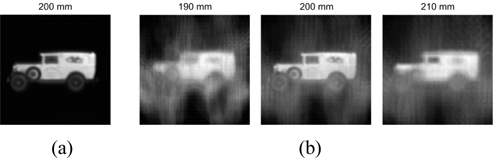

Figure 8(a) shows the reconstructed PSA of reference object using the computational reconstruction based on MCPPF for sub-images of Fig. 7(c), which the reconstruction depth was 200 mm. Using the sub-images of the partially occluded object shown in Fig. 7(d), we generated their target PSAs according to the distances from 170 mm to 230 mm with the step of 5 mm. Some examples are shown in Fig. 8(b).

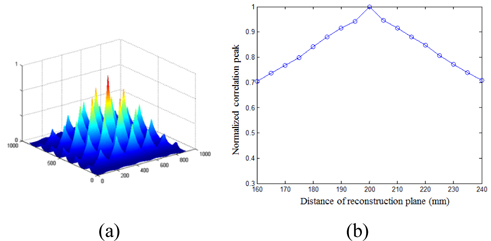

With the reference PSA and target PSAs, the correlation performances of the proposed method were measured using the correlation operation of Eq. (9). Correlation results were calculated by conducting the correlation between the reference PSA and a series of the target PSAs. The correlation results are shown in Figs. 9 and 10. Figure 9 shows the auto-correlation results of the reference object shown in Fig. 5(a). The correlation array was obtained due to the image array structure of PSAs. Among them, the maximum correlation peak was measured for object recognition. The curve of correlation peaks from the proposed method along the distance

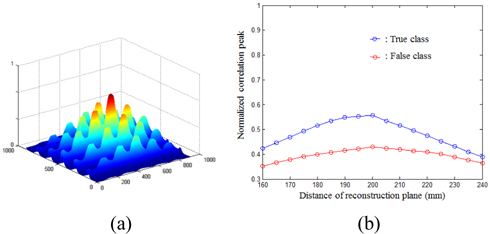

And the correlation result for the partially occluded objects as shown in Fig. 5(c) was shown in Fig. 10(a). The curve of maximum correlation peaks for two different cars (True car and False car) were calculated and presented in Fig. 10(b).

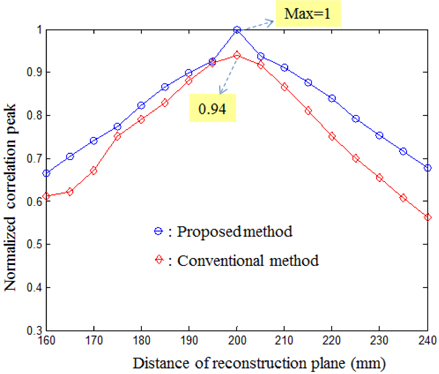

In addition, we compared the proposed method with the conventional method based on CIIR [7]. The comparison experiments were performed under the same condition. The recorded elemental images were used to generate the CIIR images. The CIIR images for the reference object and the unknown target object were shown in Fig. 11. We compared correlation peaks of partially occluded object between proposed and conventional methods as shown in Fig. 12. Each correlation peak was normalized using the value of the maximum correlation peak obtained in the proposed method. For the conventional method, the maximum value of correlation peak was approximately 0.94. It is seen that the proposed method provides not only higher correlation peak but also sharper characteristic of correlation peaks than the conventional method. This is because our method can remove the blurring effect due to the magnification and superimposing processes of the CIIR process.

In conclusion, we proposed a new 3D image correlator using the computational reconstruction based on MCPPF for partially occluded object recognition. In the proposed correlator, PSAs generated from MCPPF were used for 3D object recognition and thus we can recognize the partially occluded 3D object using the maximum peaks correlated with the reference object. Some preliminary experiments show the usefulness of the proposed method by comparison with the conventional method using CIIR.