Serious games are computer games designed not only for entertainment but also for achieving a special purpose [1]. In comparison with other computer games, they have a short history, yet their popularity is quite apparent. Various fields are currently in the process of developing serious games. Further, the market volume and demand for serious games increases each year. In 2012, the world market volume of serious games is expected to reach US $51.5 billion [2].

Today, serious games have diverse applications in fields such as the military, government, education, business, and health care, and in the future, their application is expected to expand to even more fields. A wide variety of terms have been used to describe learning programs based on games, including “edugame,” “edutainment,” and “game learning.” The current trend is to represent these concepts under the term “serious game.”

Educational serious games were first developed to increase the effectiveness of student learning using the properties of conventional computer games, namely, that they are interesting and fun and have flow. Among the many types of serious games, educational serious games currently occupy the highest market share. According to 2009 Statistics, educational serious games occupied over 30% of the United States serious game market and comprised more than 50% of South Korea’s market [2].

Nevertheless, quality evaluation standards for educational serious games have not yet been established, either internationally or within Korea. As a result, users are inconvenienced in the process of selecting educational serious games. Additionally, game developers frequently design serious game products without reflecting on specific quality characteristics or quality evaluation metrics. This can be a major cause of weakening market competitiveness of the products.

Although some existing software quality evaluation standards have been developed, they are not suitable for use in the quality assessment of educational serious games because they fail to take into account specific characteristics of such games.

Thus, we considered it necessary to develop a quality assessment standard for educational serious games. To this end, we designed a development framework and used it to identify quality evaluation elements [1]. The objectives of this study are as follows: Firstly, it seeks to provide criteria of quality assessment in order to aid users in their selection of educational serious games. Secondly, it aims to supply developers with standardized quality criteria for the production of high-quality educational serious games in a highly competitive market. The remainder of this paper is organized as follows. In Section II, we present an overview of related works and existing standards of software quality assessment. Section III explains the design of the quality evaluation elements. Then, in Section IV, we describe the development of our quality assessment standard model. In Section V, we state metrics of the quality evaluation. Finally, in Section VI, we discuss the final conclusions of our study.

This chapter presents an overview of serious games and the previous literature that has addressed them. It also gives a summary of the existing quality assessment standards.

Serious games are designed for a special purpose in training or education. By playing serious games, users can experience both fun and effective learning of specific content material. The software design includes elements of both simple entertainment and pedagogy [3]. Serious games can be classified according to various criteria depending on the application purpose, market, game modes, target users, etc. Zyda [4] notes that serious games can be applied in fields such as health care, public policy, strategy, communications, defense, training, and education. Michael and Chen [1] categorize them into military, government, educational, corporate, or healthcare games, or “politics, religion, and art games” as a single category, according to the market majority. Now, a variety of serious game types have been developed, and positive awareness of and demand for them continue to increase.

>

B. Standards of Quality Assessment

Software quality management has been classified into two types. The first type considers that if a software organization’s development method and procedure are appropriate, it can produce high-quality products. Therefore, this perspective emphasizes quality assessment of the development process itself. Yet, appropriate development methods and procedures do not guarantee product quality. Therefore, the second viewpoint emphasizes quality assessment of the product. Based on these viewpoints, two kinds of software quality evaluation models have been developed and applied in IEEE [5]. The former viewpoint is represented by international standards such as the Capability Maturity Model (CMM), ISO/IEC 15504 (Software Process Improvement & Capability Determination [SPICE]), and ISO/IEC 19796-1. Examples of the latter are ISO/IEC 9126, ISO/IEC 25051, ISO/IEC 25040, and ISO/IEC 25000. The CMM consists of five levels: Initial level, Repeatable level, Defined level, Managed level, and Optimizing level [6]. ISO/IEC 15504 standards identify five process categories: customer/supplier, engineering, supporting, management, and organization [7].

The ISO/IEC 19796-1 standard is a method of quality assessment concerning learning, education, and training. This process model consists of a needs analysis, framework analysis, conception/design, development/production, implementation, learning process, and evaluation/optimization. Each process is divided into 38 sub-processes [8].

The ISO/IEC 9126 standard includes 6 main characteristics and 27 sub-characteristics [9-11]. ISO/IEC 25051 is used for evaluation of software package quality; it conforms to the quality model of the ISO/IEC 9126 standard. ISO/IEC 25051 classifies quality requirements for product description, user documentation, and programs and data [12]. ISO/IEC 25040 covers steps in establishing evaluation requirements as well as specifying, designing, and executing the evaluation. Use of this standard model is appropriate for the quality evaluation of software products by developers, acquirers, and evaluators [13]. The ISO/IEC 25000 is a software evaluation model for integrating and enhancing existing international standards that have been developed for use in place of the ISO/IEC 9126 and ISO/IEC 25040 standards [14].

The TTAS.KO-11.0078 is for mobile game software, and TTAS.KO-11.0059, for Web-based software. These standards are representative of Korean quality evaluation standards for software.

The TTAS.KO-11.0078 categorizes elements as technical or non-technical. For technical elements, it provides a quality model composed of 6 main characteristics and 25 sub-characteristics. The technical quality model considers both general software attributes and mobile software attributes. For non-technical elements, the TTAS.KO- 11.0078 provides a quality model composed of 4 main characteristics and 11 sub-characteristics. The non-technical quality model can be used to evaluate both multimedia properties and service properties [15].

The TTAS.KO-11.0059 differs from other standards in that it considers security properties of the software. The model lists 6 main characteristics such as usability, stability, interoperability, efficiency, security, and maintainability, and 23 sub-characteristics [16].

III. DESIGN OF THE QUALITY EVALUATION ELELMENTS

The major purpose of our work is to develop quality evaluation standards for educational serious game products. Therefore, in this chapter, we describe the necessary elements for development of quality evaluation standards for educational serious game products.

Educational serious games include features that are associated with multiple factors, including factors related to software, content, gaming, and pedagogy. Thus, the development of quality elements necessary to educational serious game quality evaluation standards should distinguish between technical and non-technical evaluation areas.

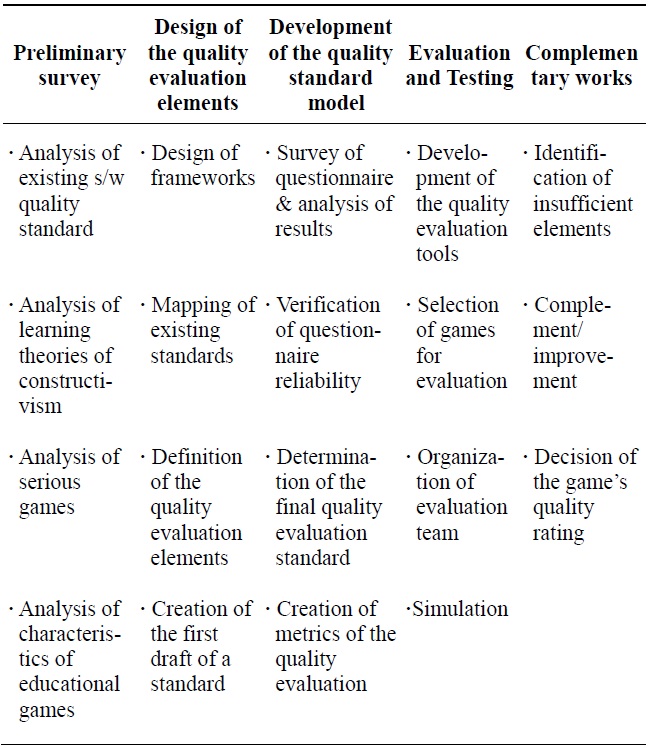

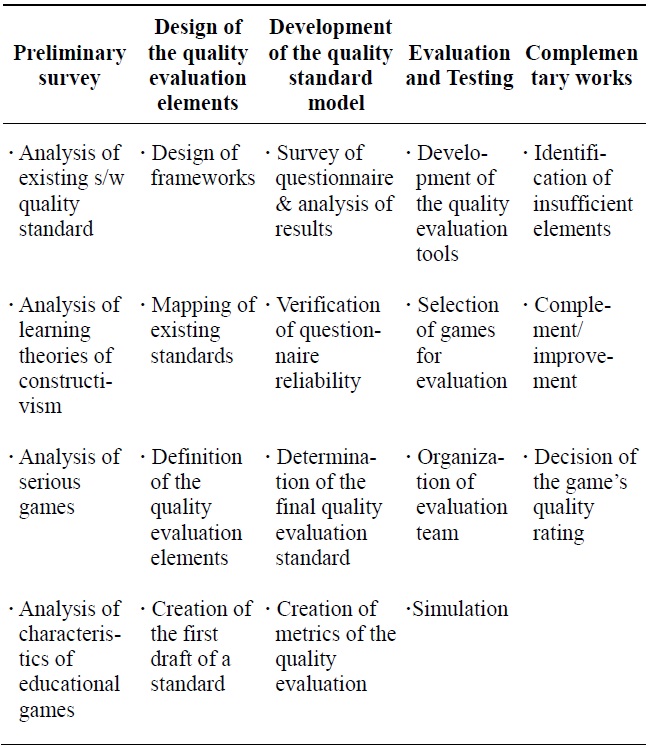

[Table 1.] Frameworks of development

Frameworks of development

Technical evaluation areas should cover the necessary quality evaluation elements for functional or technical elements of educational serious game software. In contrast, non-technical evaluation areas should include quality assessment elements for features of contents, game, and pedagogy. We adopt the distinction between technical and non-technical in our development of quality evaluation elements.

Table 1 shows a procedure required for developing a standard of quality assessment of educational serious games.

The required procedure for development for our standard consists of all five steps; however, in this paper, we discuss only the first three stages of research. We leave the remaining two steps of the procedure to be described in our next study.

>

B. Mapping of Existing Standards

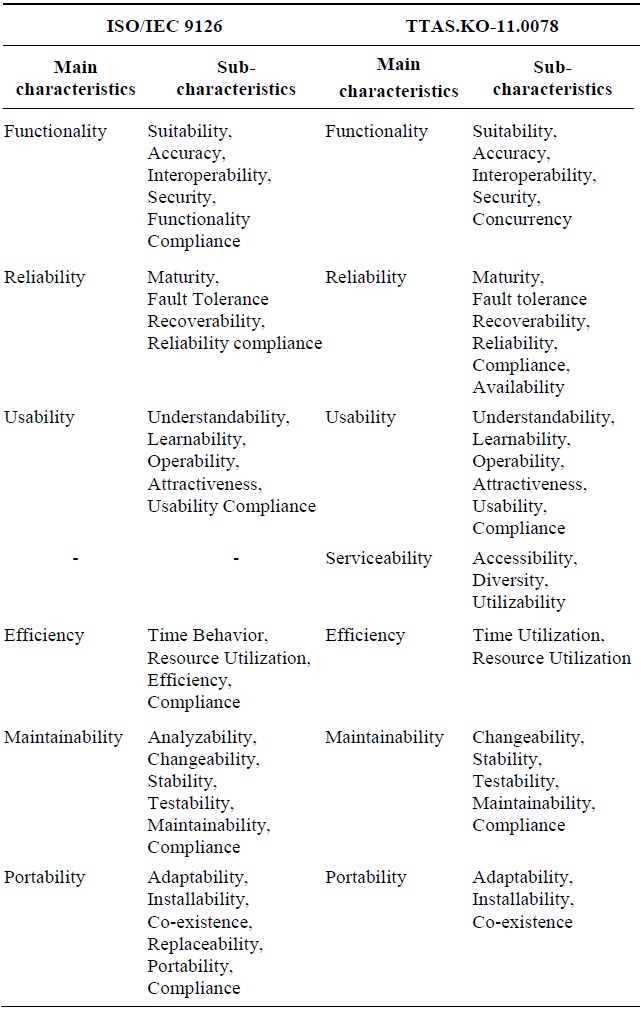

For development of the quality evaluation standard, we began by mapping the existing standards, namely, the international standard ISO/IEC 9126 and the Korean standard TTAS.KO-11.0078, to ensure the objectivity and reliability of the elements used. We extracted the necessary elements based on the results of mapping and accepted the extracted elements into our standard. These elements were used to evaluate technical areas of educational serious games. The main concern of our research is to develop evaluation standards for the quality of educational serious game software products. Thus, the reasons behind our selection of ISO/IEC 9126 and TTAS.KO-11.0078 among several existing standards of quality assessment are as follows. First, we selected ISO/IEC 9126 because three other standards comply with it, and it is currently the most widely used as an international standard. We chose TTAS. KO-11.0059 because it contains many enhanced elements of security.

For this reason, we regarded it as a more reasonable choice than TTAS.KO-11.0059. Moreover, many elements of the TTAS.KO-11.0078 standard are consistent with the elements of the ISO/IEC 9126 standard. Second, we considered that the developed standard model will be used mainly in Korea, so it is practical to choose a Korean standard. Further, we hope to expand our standard into an international standard or, at least, to use it as a guide in the development of an international standard model. Therefore, it is wise to comply with one of the international standards. Table 2 shows the results of mapping of the two standards. These results will be used as elements for evaluating the technical quality of educational serious games.

[Table 2.] A result of mapping for ISO/IEC 9126* and TTAS.KO-11.0078 [11, 15]

A result of mapping for ISO/IEC 9126* and TTAS.KO-11.0078 [11, 15]

As shown in Table 2, there are six main elements common to both standards: functionality, reliability, usability, efficiency, maintainability, and portability. Additionally, the standards contain 19 sub-elements that are the same or similar. We extracted these 6 main elements and 19 sub-elements and incorporated them into our standard. In addition, we combined the Time Behavior of ISO/IEC 9126 and the Time Utilization of TTAS.KO- 11.0078. We combined these terms as Behavior Utilization, which we arranged as a sub-element of Efficiency in our standard.

We added a new extensibility element as a sub-element of Efficiency in our standard. This element considers whether the software allows users to change the language settings of the game. An extensibility element is required for the following reasons. Some types of education are not limited to a specific country; for example, there is an international demand for environmental education, math, and science. Educational serious games that can be played in different languages may be easily extended to different countries. Consequently, they accomplish the effect of One Source Multi-Use in a wide sense. Accordingly, we expect an increase in the game’s global marketability as well as savings in development costs due to content reusability.

Additionally, we incorporated all of sub-elements of Service in the TTAS.KO-11.0078 standard by re-labeling them as Serviceability and placing them in our model as sub-elements of Usability.

>

C. Definition of the Quality Evaluation Elements

The proposed standard in this paper contains 6 main elements and the 19 sub-elements derived from the results of previous mapping of ISO/IEC 9126 and TTAS.KO- 11.0078. These elements are intended to be used in the evaluation of technical quality of educational serious games. We used the original definitions of elements extracted from the existing standards. They are as follows.

1) Serviceability: the product’s ability to provide additional services, including answers to user questions and resolution of inconveniences

2) Behavior Utilization: the product’s ability to handle a specific function (without problems) within a limited time

3) Extensibility: the product’s ability to extend to various language versions easily

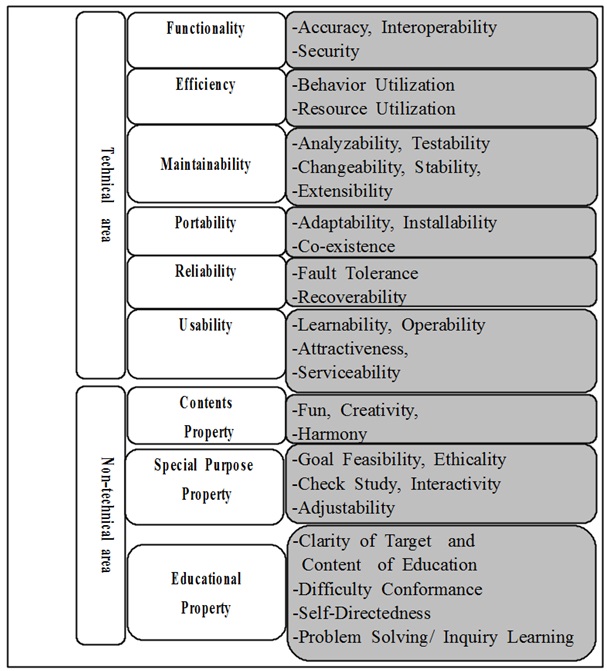

Elements of the non-technical evaluation areas should reflect appropriate characteristics of educational serious games. Therefore, to establish these elements, we referred to the learning theory of constructivism, international elearning quality standards, and the curriculum of the Korean Ministry of Education, Science and Technology.

As the result, we determined the 3 main elements and 12 sub-elements of our standard. The elements of non-technical quality evaluation are defined as follows.

4) Content Property: used to evaluate the quality of multimedia factors and content factors of the educational serious game

Fun: how many fun (i.e., entertainment) factors are included in the educational serious game; for the purpose of encouraging repeated play

Creativity: whether the scenario, characters, and objects in the game are unique or innovative

Harmony: whether the game contents are appropriate for the game goals

5) Special Purpose Property: used to evaluate the quality of common game elements (e.g., example estimation, control, and ethics)

Goal Feasibility: the user’s satisfaction level, goal achievement level, and learning effectiveness persistence after playing the game

Ethicality: whether the game material and contents do not pose moral or ethical problems

Interactivity: whether the functions for bidirectional interaction in the game are provided among users or between the user and system

Adjustability: whether the game has functions to prevent user’s flow controllable by the supervisor or system.

Check Study: whether the game has functions to check the effects of learning or training.

6) Educational Property: used to evaluate the quality of elements associated with pedagogy

Clarity of Targets and Content of Education: whether the game conforms exactly with the standards set by the Korean Ministry of Education, Science and Technology for purpose, contents of education, and learners’ selection criteria

Difficulty Conformance: how the game’s level of difficulty and amount of learning compare to the Korean Ministry of Education, Science and Technology standard

Self-Directedness: whether the game contains the selfdirected elements that encourage experienced users to play again

Problem Solving/Inquiry Learning: whether the game offers to variety of problem-solving methods and inquiry functions for users to solve a specific problem.

Accordingly, we proposed the first draft of a standard for the quality evaluation of educational serious games. The draft standard was composed of 9 main elements and 31 sub-elements.

IV. DEVELOPMENT OF THE QUALITY EVALUATION STANDARD MODEL

Before determining the final quality evaluation standard for educational serious games, we first verified the need for each element in the proposed a draft standard. To this end, we carried out a survey on the need for each element. Participants were asked to rate each element on a five-point Likert scale as follows: 1, very unnecessary; 2, unnecessary; 3, normal; 4, necessary; and 5, very necessary.

The survey participants were divided into an expert group and general users group. The expert group included instructors, game developers, and teaching-learning designners who had at least three years of experience in their field.

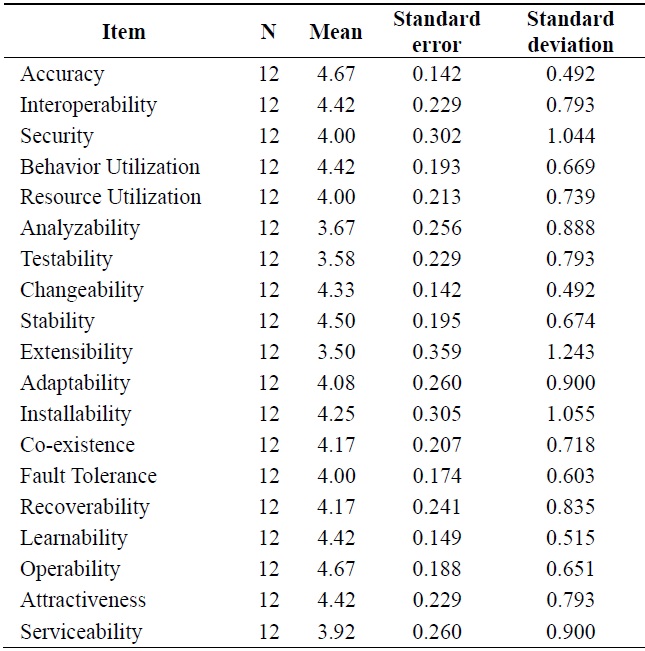

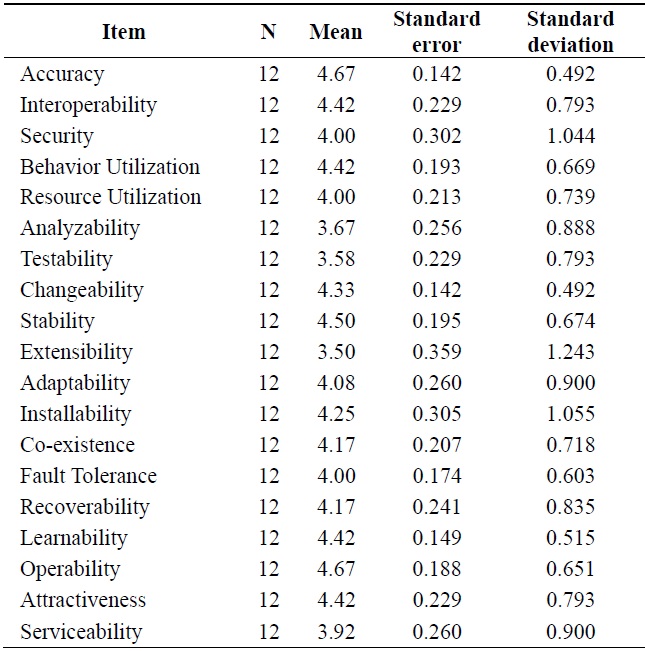

[Table 3.] Statistics on the technical quality evaluation elements

Statistics on the technical quality evaluation elements

The expert group was surveyed on all elements of the draft standard, including both technical and non-technical elements. They were also provided space at the end of the survey form to suggest additional elements for the quality evaluation.

The general users group was surveyed only on the nontechnical elements, as we judged that they might have difficulty distinguishing specialized technical factors.

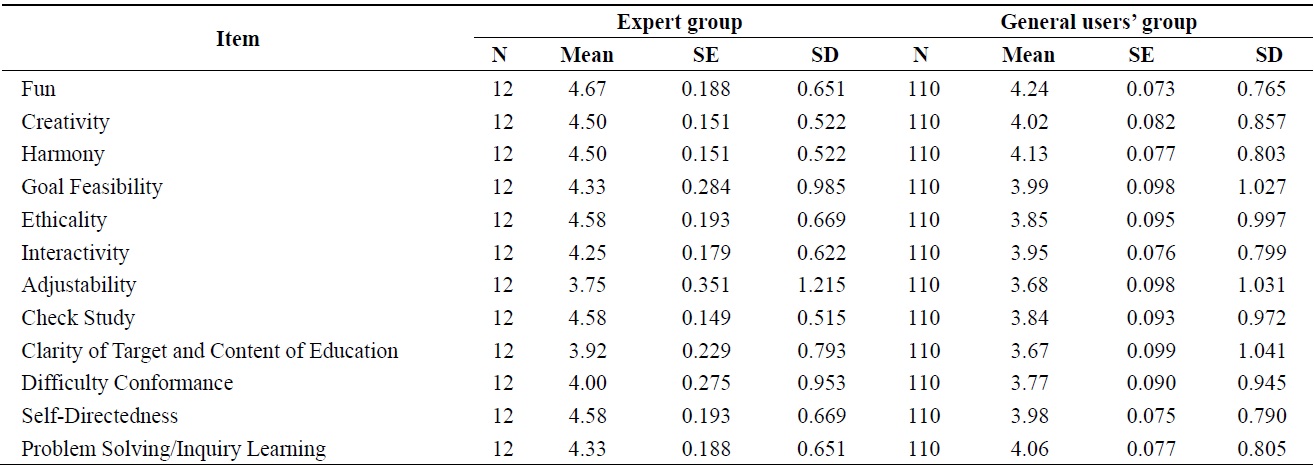

We used the SPSS statistical software program to analyze the survey data and calculate descriptive statistics, in order to determine which quality evaluation elements should be included in the final standard. Table 3 shows the descriptive statistics of the expert group responses to technical quality evaluation elements. As can be seen in Table 3, the mean statistics representing the need for technical elements are all higher than 3.50. This means that all technical quality evaluation elements of the draft standard were accepted into the final standard. No elements were eliminated. Table 4 lists the descriptive statistics of the expert group and general users’ group responses to the non-technical quality evaluation elements.

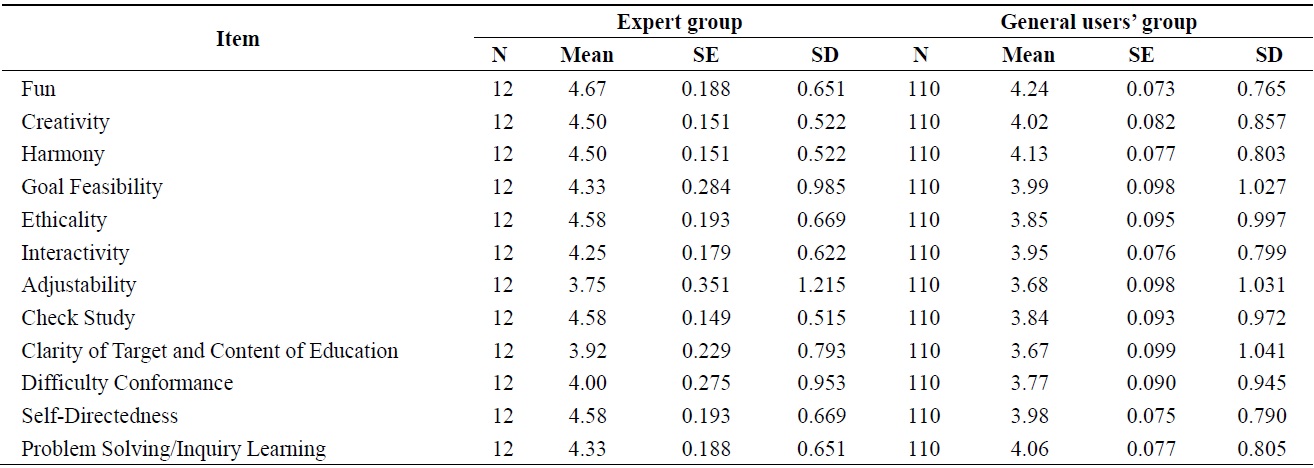

As Table 4 shows, the mean statistics representing the need for non-technical elements are all higher than 3.75 in the expert group and higher than 3.67 in the general users group. These results indicate all the proposed non-technical elements are required; therefore, they were all accepted into the final quality evaluation standard.

Consequently, the proposed final quality standard is composed of 9 main elements and 31 sub-elements. Some members of the expert group suggested additional quality

[Table 4.] Statistics on technical quality evaluation elements

Statistics on technical quality evaluation elements

elements. First, they proposed generalization and metastatic elements to evaluate whether a user is able to apply the contents of learning to daily life after playing the game. Second, they proposed a satisfaction element for product price, and lastly, an automatic control function element to evaluate whether the game provides a function to automatically prevent excess use of inappropriate language in the user chat system. We will consider these suggested elements in greater detail in a later work. In this paper, we propose elements of the final quality evaluation standard, as shown in Fig. 1.

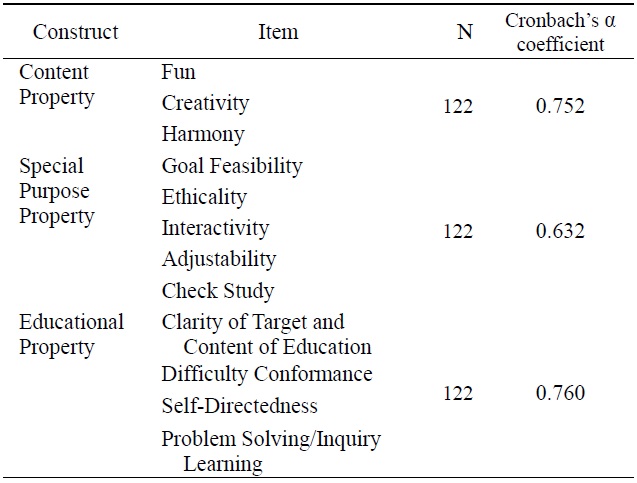

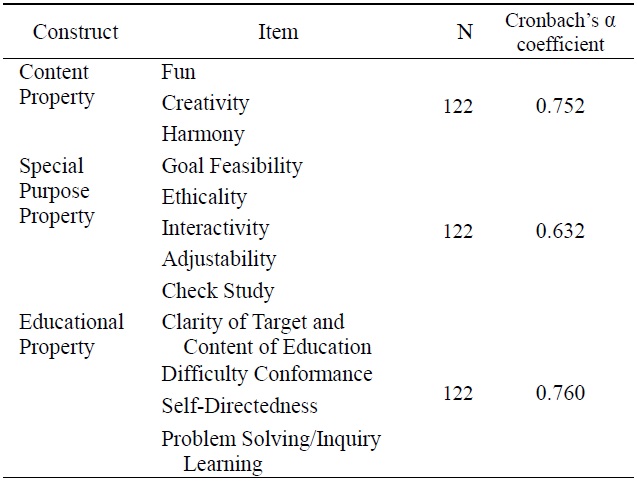

The most common method of verifying questionnaire reliability is to calculate internal consistency and the Cronbach’s alpha coefficient. In general, a Cronbach’s alpha value of greater than 0.6 indicates an acceptable level, and greater than 0.7 indicates the questionnaire is reliable [17-19].

We used Cronbach’s alpha coefficient to confirm the reliability of our survey results. Here, we omitted the verifycation process for reliability of the technical evaluation elements. Because most of the technical elements were extracted from existing standards, they had already been verified in the development process of the original standards. Therefore, in this study, we only verified reliability of the non-technical elements.

The content property, special purpose property, and educational property were constructed as multi-item properties. Table 5 presents the results of analysis of reliability for each of the constructed items.

[Table 5.] Results of analysis of reliability

Results of analysis of reliability

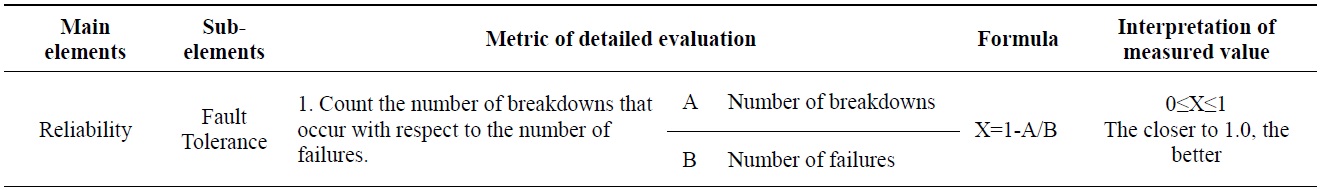

[Table 6.] Metric of Fault Tolerance (ⓒ ISO/IEC 2013. All rights reserved) [11]

Metric of Fault Tolerance (ⓒ ISO/IEC 2013. All rights reserved) [11]

According to the above analysis, the Cronbach’s alpha coefficient of the special purpose property is 0.632, and the Cronbach’s alpha coefficients of the remaining constructs are greater tha n 0.7. Therefore, all items were proven reliable.

V. METRICS OF THE QUALITY EVALUATION

In the quality assessment of software products, the evaluator’s subjective view often has a greater influence on the overall evaluation than objective considerations. To reduce this, we must design the quantitative evaluation metrics in such a way as to achieve objective and reliable

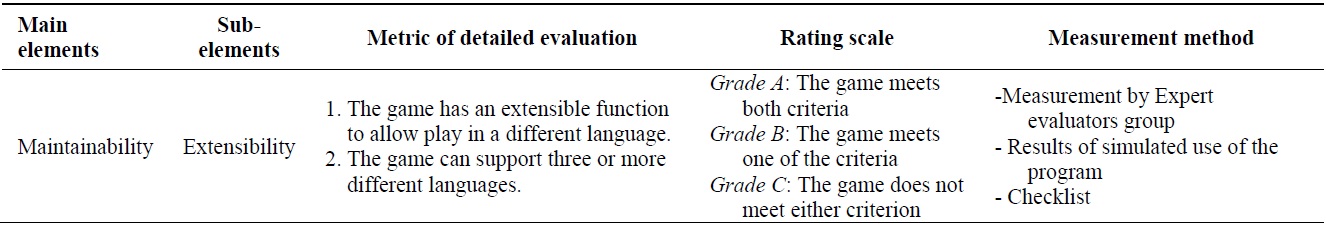

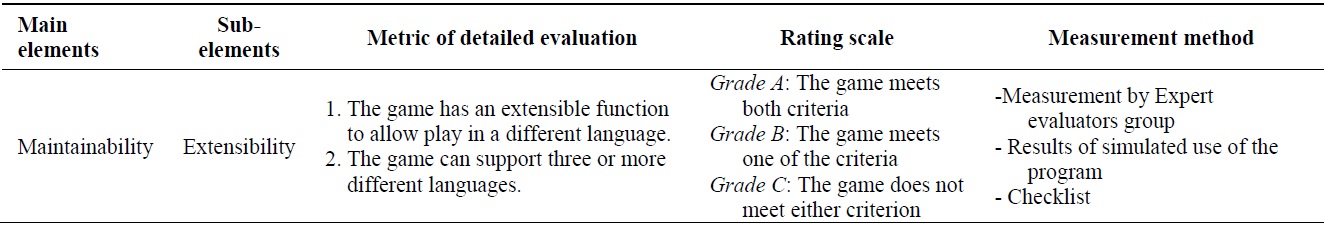

[Table 7.] Metric of extensibility

Metric of extensibility

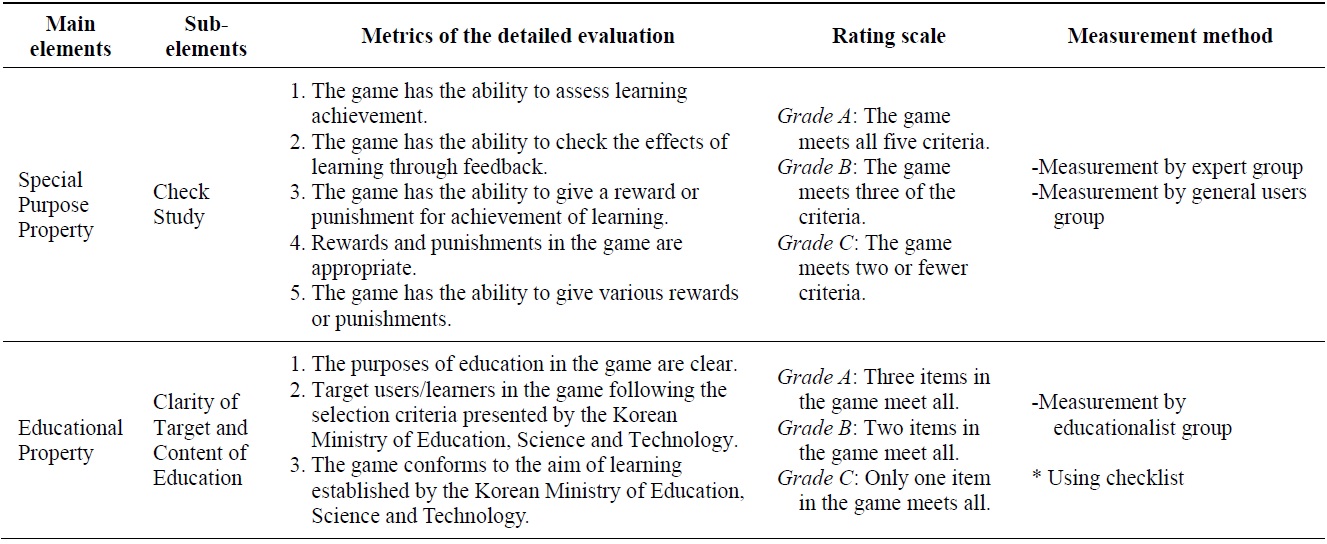

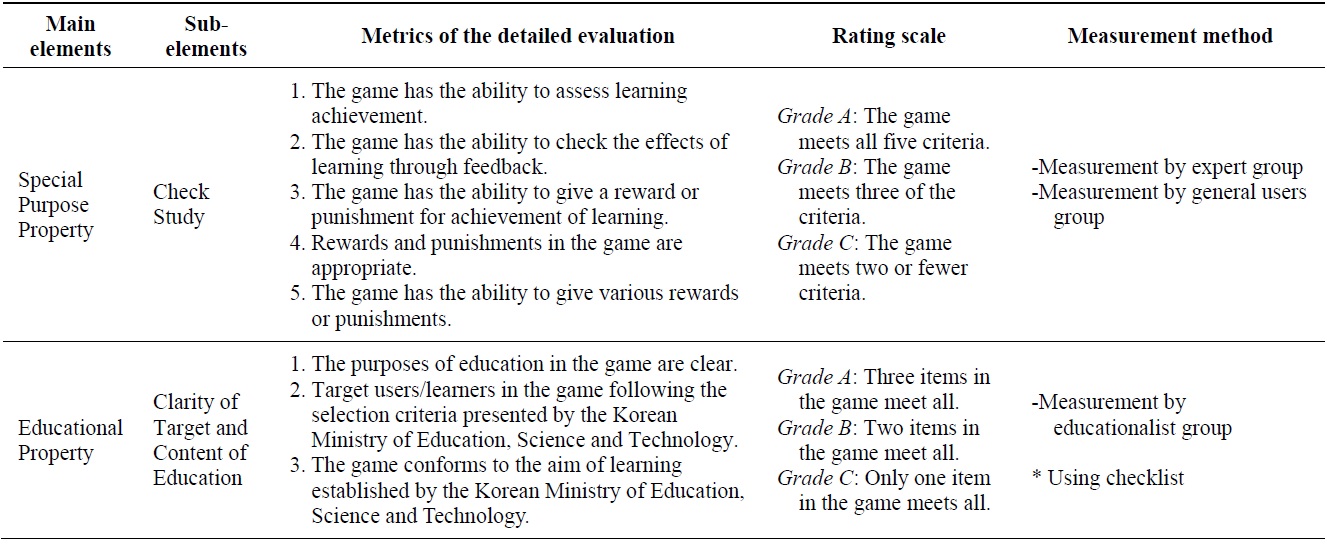

[Table 8.] Metrics of non-technical elements (some cases)

Metrics of non-technical elements (some cases)

product evaluations. With this goal in mind, we establish detailed evaluation criteria of individual elements of the proposed standard. With the exception of the proposed elements in this paper, we adopt, intact, the metrics of the original standards as the evaluation criteria of all elements in our standard. Table 6 shows an example of an ISO/IEC 9126 metric for Fault Tolerance.

For the creation of metrics for both technical and nontechnical elements proposed in this paper, we employ an alphabetical grading system. Each metric contains two to five items used to assess the quality of an element. Each element is assigned one of three grades in the overall evaluation: A (30 points), B (20 points), or C (10 points). Table 7 presents the metric of Extensibility as defined in this paper. Table 8 shows a sample of a metric for educational elements. This metric is used to evaluate the quality of nontechnical game components.

For a metric of evaluation for each sub-element of the main elements, we computed the average value of the results of evaluation.

We propose to express information on the quality of evaluation results for the main elements as follows. If an average value is 27 points or higher (i.e., at least 90% of the full 30 points), the element will receive a grade of A. A score of 24 to 26 points (i.e., 80%) indicates a B, and other point corresponds to a C.

Educational serious games currently occupy the largest portion of the serious game market. Therefore, we proposed quality evaluation standards to objectively and reliably evaluate the quality of educational serious games. In this paper, the proposed quality standards were designed to evaluate all aspects of the quality of both technical and nontechnical elements of educational serious game software. We presented a development framework for quality standards and proceeded to develop the quality evaluation elements in accordance with our proposed procedure. We then added these developed elements into our standard. As a result, we have proposed, for the first time, draft standards for the quality evaluation of educational serious games. The standards are composed of 9 main elements and 31 subelements.

To decide which elements would be used for the final quality standard, we conducted a survey about the need for elements of our draft standard, targeting experts and general users. We determined the necessary elements based on descriptive statistics of the survey results.

Further, we performed a reliability analysis of the survey results to ensure the reliability of the questionnaire. The analysis showed no unnecessary or low reliability evaluation elements in the draft standard. Thus, we accepted intact all the elements outlined in the draft standards as elements of the final quality evaluation standard.

Quantitative and specific metrics are needed to objectively and reliably evaluate the quality of educational serious games products. Therefore, we developed the detailed metrics and presented examples in this paper. One of the main goals of our research is to supply information about the quantitative and quality evaluation of educational serious games to users, in order to aid their selection of educational serious games. Consequently, we expect that users will be able to utilize this information in order to easily and conveniently select high-quality educational serious games.

Our second goal is to provide game developers with quality standards for developing high-quality educational serious games. Accordingly, we anticipate that game developers will be able to produce competitive and userfavored, high-quality products using the information presented here.

In future research, we will carry out the remaining two steps of our framework and attempt to establish a complete quality standard. Additionally, we will devote more effort to expand our standard toward an international standard of educational serious games.

![A result of mapping for ISO/IEC 9126* and TTAS.KO-11.0078 [11, 15]](http://oak.go.kr/repository/journal/12211/E1ICAW_2013_v11n2_103_t002.jpg)

![Metric of Fault Tolerance (ⓒ ISO/IEC 2013. All rights reserved) [11]](http://oak.go.kr/repository/journal/12211/E1ICAW_2013_v11n2_103_t006.jpg)