In this paper, we propose a fuzzy inference model for a navigation algorithm for a mobile robot that intelligently searches goal location in unknown dynamic environments. Our model uses sensor fusion based on situational commands using an ultrasonic sensor. Instead of using the “physical sensor fusion” method, which generates the trajectory of a robot based upon the environment model and sensory data, a “command fusion” method is used to govern the robot motions. The navigation strategy is based on a combination of fuzzy rules tuned for both goal-approach and obstacle-avoidance based on a hierarchical behavior-based control architecture. To identify the environments, a command fusion technique is introduced where the sensory data of the ultrasonic sensors and a vision sensor are fused into the identification process. The result of experiment has shown that highlights interesting aspects of the goal seeking, obstacle avoiding, decision making process that arise from navigation interaction.

An autonomous mobile robot is an intelligent robot that performs tasks by interacting with the surrounding environment through sensors without human control. Unlike general manipulators in a fixed working environment [1,2], intelligent processing in a flexible and variable working environment is required. Robust behavior by autonomous robots requires that the uncertainty in such environments be accommodated by a robot control system. Therefore, studies on fuzzy rule-based control are attractive in this field. Fuzzy logic is particularly well suited for implementing such controllers due to its capabilities for inference and approximate reasoning under uncertainty [3-5]. Many fuzzy controllers proposed in the literature utilize a monolithic rule-based structure. That is, the precepts that govern the desired system behavior are encapsulated as a single collection of if-then rules. In most instances, these rules are designed to carry out a single control policy or goal. However, mobile robots must be capable of achieving multiple goals whose priorities may change with time in order to achieve autonomy. Thus, controllers should be designed to realize a number of taskachieving behaviors that can be integrated to achieve different control objectives. This requires formulation of a large and complex set of fuzzy rules. In this situation, a potential limitation to the utility of the single command fuzzy controller becomes apparent. Since the size of the complete single command rule-base increases exponentially with the number of input variables [6,7], multi-input systems can potentially suffer degradations in real-time response. This is a critical issue for mobile robots operating in dynamic surroundings [8,9]. Hierarchical rule structures can be employed to overcome this limitation by reducing the rate of increase to linear [1,10].

This paper describes a hierarchical behavior-based control architecture. It is structured as a hierarchy of fuzzy rule bases that enables the distribution of intelligence amongst special purpose fuzzy-behaviors. This structure is motivated by the hierarchical nature of the behavior as hypothesized in ethological models. A fuzzy coordination scheme is also described that employs weighted decision making based on contextual behavior activation. Performance is demonstrated by simulation that highlights interesting aspects of the decision making process that arise from behavior interaction.

First, this paper briefly introduces the operation of each command and the fuzzy controller for navigation system in Section II. Section III explains the behavior hierarchy based on fuzzy logic. In Section IV, the experimental results to verify the efficiency of the system are shown. Finally, Section V concludes this work and outlines possible future related work.

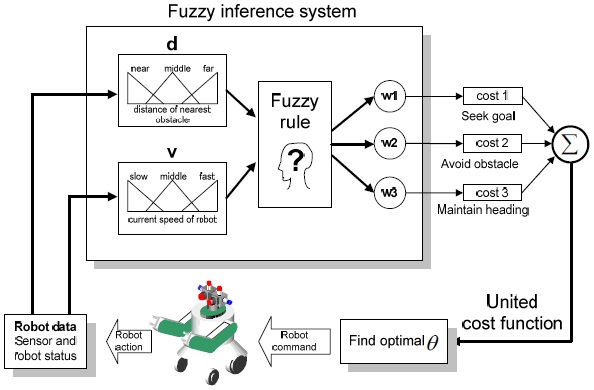

The proposed fuzzy controller is as shown in Fig. 1. We define three major navigation goals: target orientation, obstacle avoidance, and rotation movement. Each goal is represented as a cost function. Note that the fusion process has a structure of forming a cost function by combining several cost functions using weights. In this fusion process, we infer each weight of the command by a fuzzy algorithm, which is a typical artificial intelligence scheme. With the proposed method, the mobile robot navigates intelligently by varying the weights depending on the environment and selects a final command to keep minimum variation of the orientation and velocity according to the cost function [11-15].

The orientation command of a mobile robot is generated as the nearest direction to the target point. The command is defined as the distance to the target point when the robot moves to the orientation, θ, and the velocity,

where

>

B. Avoiding Obstacle Command

We represent the cost function for obstacle-avoidance as the shortest distance to an obstacle based upon the sensor data in the form of a histogram. The distance information is represented as a form of second order energy and represented as a cost function by inspecting it for all θ, as shown in Eq. (2).

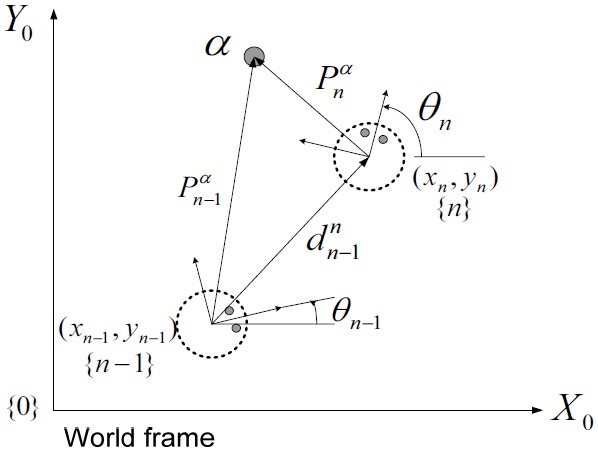

To navigate in a dynamic environment to the goal, a mobile robot should recognize the dynamic variation and react to it. For this, the mobile robot extracts the variation in the surrounding environment by comparing the past and the present. For continuous movement of a robot, the transformation matrix of a past frame

In Fig. 2, the vector,

is defined as the position vector of the mobile robot

is defined as the vector

and

as follows:

Here,

is the rotation matrix from {n-1} to the {n} frame, and

is the translation matrix from the {n-1} frame to the {n} frame.

According to Eq. (3), the environment information measured in the {n-1} frame can be represented as

where

>

C. Minimizing Rotation Command

Minimizing rotational movement aims to rotate the wheels smoothly by restraining rapid motion. The cost function is defined as the minimum at the present orientation and is defined as the second order function in terms of the rotation angle, θ, as in Eq. (5).

The command represented as the cost function has three different goals to be satisfied at the same time. Each goal contributes differently to the command by a different weight, as shown in Eq. (6).

III. BEHAVIOR HIERARCHY BY FUZZY LOGIC

Primitive behaviors are low-level behaviors that typically take the inputs from the robot’s sensors and send the outputs to the robot’s actuator. This forms a nonlinear map between them. Composite behaviors make up a map between the sensory input and/or the global constraints and the degree of applicability (DOA) of the relevant primitive behaviors. The DOA is the measure of the instantaneous level of the activation of a behavior. The primitive behaviors are weighted by the DOA and aggregated to form the composite behaviors. This is a general form of behavior fusion that can degenerate to behavior switching for DOA = 0 or 1 [16,17].

At a primitive level, behaviors are synthesized as fuzzy rule bases, i.e., a collection of fuzzy if-then rules. Each behavior is encoded with a distinct control policy governed by fuzzy inference. If

where

At the composition level, the DOA is evaluated using a fuzzy rule base in which the global knowledge and constraints are incorporated. An activation level (threshold) at which the rules become an application is applied to the DOA giving the system more degrees of freedom. The DOA of each primitive behavior is specified in the consequent of the applicability rules of the form:

where

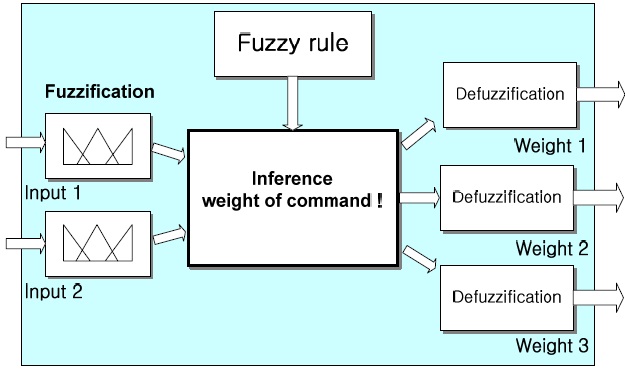

We infer the weights of Eq. (6) by means of a fuzzy algorithm. The main reason for using a fuzzy algorithm is that it is easy to reflect human intelligence into the robot control. A fuzzy inference system is developed through the process of setting each situation, developing fuzzy logic with the proper weights, and calculating the weights for the commands.

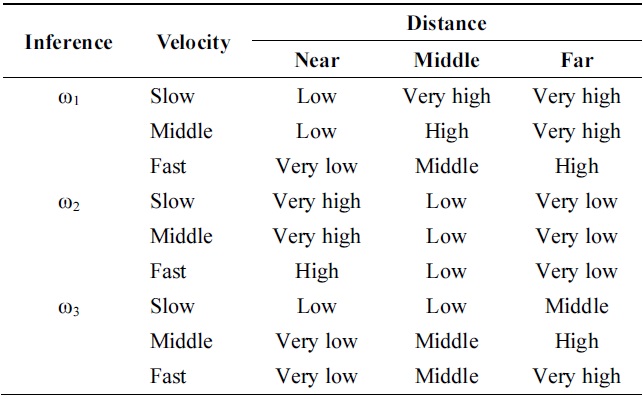

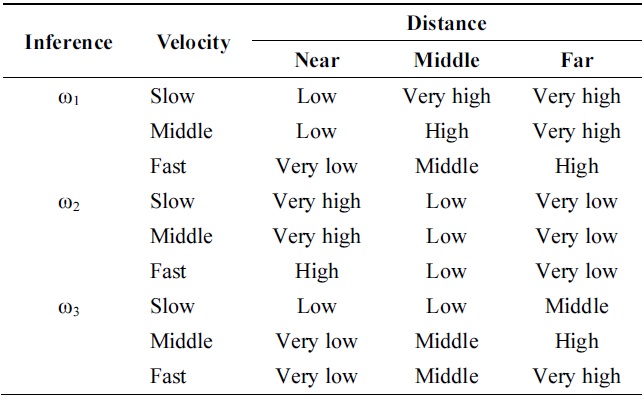

[Table 1.] Inference rule of each weight system

Inference rule of each weight system

Fig. 3 shows the structure of a fuzzy inference system. We define the circumstances and the state of a mobile robot as the inputs of a fuzzy inference system and infer the weights of the cost functions. The inferred weights determine the cost function to direct the robot and determine the velocity of rotation. For control of the mobile robot, the results are transformed into the joint angular velocities by the inverse kinematics of the robot. Table 1 output surface of the fuzzy inference system for each fuzzy weight subset using the inputs and the output. The control inference rule is:

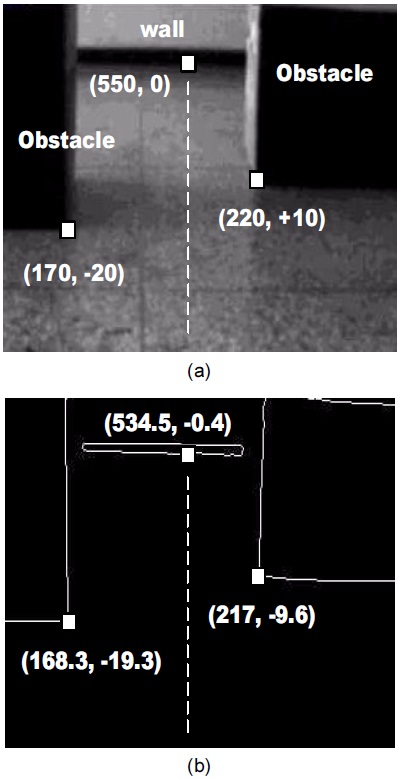

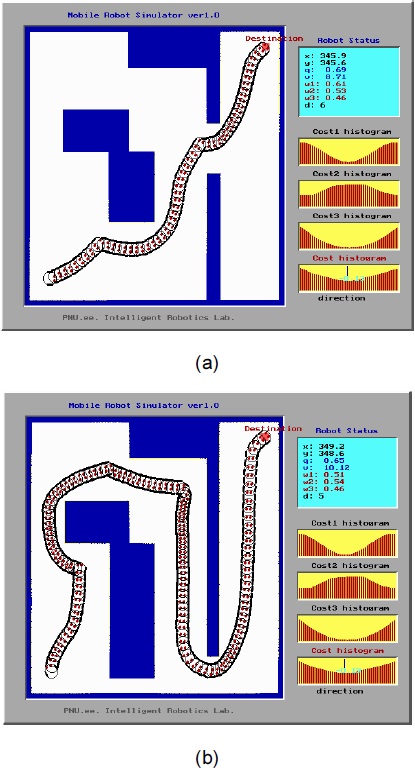

Fig. 4a is the image used in the experiment. Fig. 4b shows the values resulting from matching after image processing. Fig. 4 shows that the maximum matching error is within 4%. Therefore, it can be seen that our vision system is feasible for navigation. The mobile robot navigates along a corridor of a width of 2 m with some obstacles, as shown in Fig. 5a. The real trace of the mobile robot is shown in Fig. 5b. It demonstrates that the mobile robot avoids the obstacles intelligently and follows the corridor to the goal.

A fuzzy control algorithm for both obstacle avoidance and path planning was implemented in experiments. It enables a mobile robot to reach its goal point in unknown environments safely and autonomously.

We also present an architecture for intelligent navigation of mobile robots that determines the robot’s behavior by arbitrating the distributed control commands: seek goal, avoid obstacles, and maintain heading. The commands are arbitrated by endowing them with a weight value and combining them, and the weight values are obtained by a fuzzy inference method. The arbitrating command allows multiple goals and constraints to be considered simultaneously. To show the efficiency of the proposed method, real experiments were performed. The experimental results show that a mobile robot can navigate to the goal point safely in unknown environments and can also avoid moving obstacles autonomously. Our ongoing research endeavors will include validation of more complex sets of behaviors, both in simulation and with an actual mobile robot. Further improvements of the prediction algorithm for obstacles and the robustness of performance are required.