Object recognition’s many applications include its use in autonomous vehicles, artificial intelligence, and defense. Within this field, three-dimensional (3-D) automatic target recognition using integral imaging techniques has been an important topic of research [1-9].

Integral imaging, first proposed by G. Lippmann in 1908 [10], employees lenslet or camera arrays to capture and display 3-D images. Each lenslet provides a different perspective on the subject, generating an elemental image for capture. The resulting array of 2-D images, illuminated from behind and projected through another lenslet array, displays a 3-D reconstruction. The technique provides full parallax, continuous viewing points, and relatively high depth resolution without the need for special glasses [10-15], all desirable characteristics.

The mechanism for image reconstruction, itself, can be used to implement forms of auto target recognition. For example, in [4], the authors propose a method for locating the position of target objects in 3-D space. They use the fact that computational integral imaging reconstruction (CIIR) can output a range of 3-D reconstructions, each at a different reconstruction depth [14]. Then, computers apply advanced correlation filters to the range of reconstructed images created by CIIR and complete the automatic target recognition task.

In practice, such methods are difficult to apply in real-time applications. The computation time and resources required for 3-D reconstruction limit its usefulness [6]. To address the limitation imposed by the computational cost of 3-D reconstruction, an all-optical structure for 3-D automatic target recognition offers an alternative.

This paper proposes such an all-optical integral imaging approach. In our method, we optically reconstruct 3-D reference and target images. We then perform automatic target recognition, applying a nonlinear correlation filter to the reference and target images. To assess this proposed method, we discuss optical experiments performed and present recognition results obtained in these experiments.

II. REVIEW OF CIIR-TYPE 3-D OBJECT CORRELATION METHOD

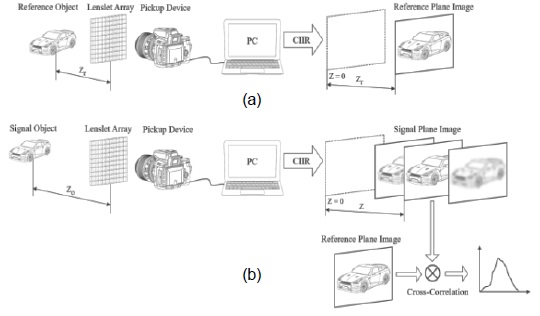

Fig. 1 illustrates the principle of the conventional CIIR-type 3-D object correlation method. The process is separated into two main stages: pickup and recognition. In the pickup stage, we assume that

Fig. 1(a) depicts the reference object method, and Fig. 1(b) the steps in the target object method. Fig. 1(b) also depicts the automatic target recognition step, implemented using cross-correlation between a single, reference 3-D image and the various target 3-D images.

When recognition is implemented with this method, a high correlation peak can be obtained only at

While the method works, CIIR is very slow, computationally, and requires substantial computing resources. Therefore, if real-time processing is required, an alternative method may be superior.

III. PROPOSED 3-D CORRELATION METHOD BASED ON OPTICAL INTEGRAL IMAGING RECONSTRUCTION

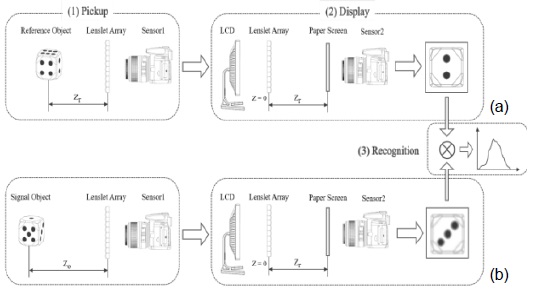

Fig. 2 illustrates our proposed 3-D object correlation method. In the conventional CIIR-type 3-D object correlation method presented above, 3-D images are reconstructed by computer simulation. In our proposed method, an optical display system reconstructs the 3-D images. The all-optical structure allows us to implement automatic target recognition in real-time.

Our system has three main parts: pickup, display, and recognition. For pickup, let us assume that the reference object has known position

In the display part of the process, 3-D image reconstructtion begins when these elemental images are reproduced on a liquid crystal display (LCD). Light illuminating the LCD passes through the lenslet array, generating a 3-D optical image for capture by the second image sensor (Sensor 2) at the speed of light. This design choice produces a system that is much faster than those used to implement the conventional CIIR-type correlation method. The 3-D, optically-created images have relatively high 3-D resolution (lateral and depth) since all elemental images are superimposed by optical magnification.

For the reference object, Sensor 2 records its reconstructed 3-D image at the known distance

To record the reconstructed image of the target object, Sensor 2 is positioned at

Recognition proceeds by means of a correlation process comparing

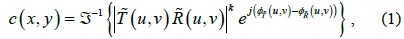

where are the Fourier transform and phase of the reconstructed reference image,

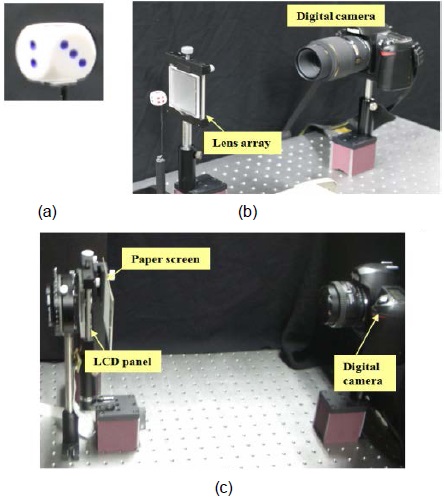

We carried out optical experiments to assess our proposed 3-D object recognition method. A ‘die’, shown in Fig. 3(a), was used as the reference and target object in this experiment. Its size was 2 cm × 2 cm × 2 cm (W × H × D). When serving as the reference object, the ‘die’ was positioned at (

A set of recorded elemental images is shown in Fig. 4(a). We used a paper screen and a digital camera to form Sensor 2. The paper screen was placed

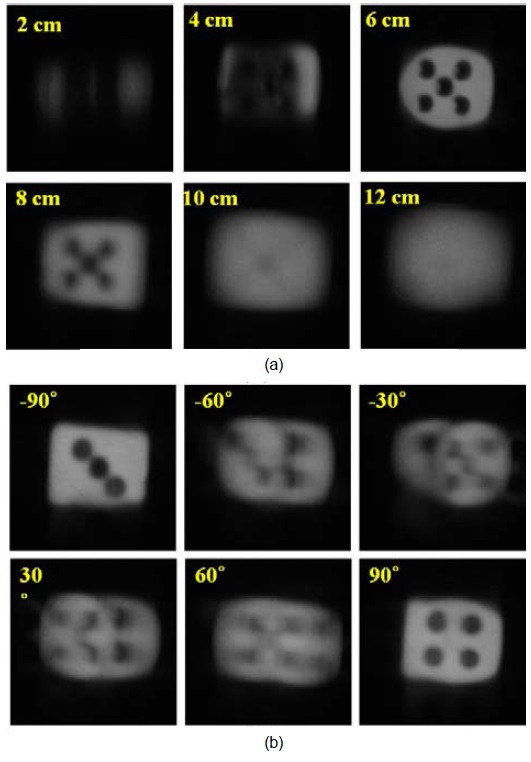

When used as a target object, the ‘die’ was shifted along the z-axis or rotated by an arbitrary angle on the y-axis. Elemental images for each case were recorded using the optical pickup apparatus shown in Fig. 3(b). Then, with a set of elemental images for each case, reconstructed 3-D images at various reconstruction depths could be obtained using the optical display system shown in Fig. 3(c). Examples of reconstructed 3-D images at different reconstruction depths are shown in Fig. 5(a). The figure clearly shows that the 3-D image reconstructed at

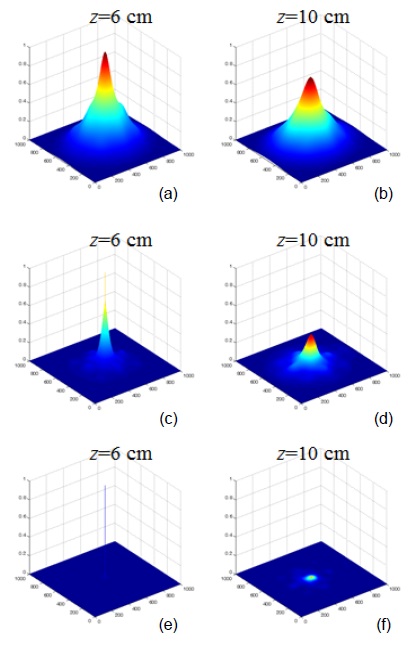

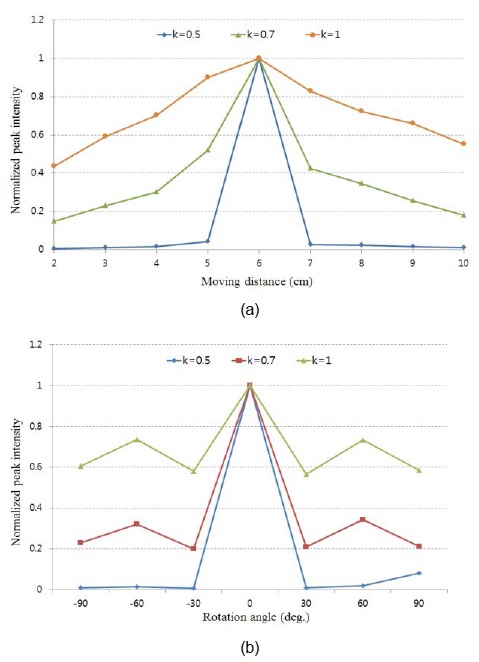

Finally, we present the cross-correlation between the reference image and the reconstructed 3-D images of the target object at various depths and rotation angles. We computed correlation using the

We repeated the analysis for the other reconstructions of the target object image shown in Fig. 5. Applying the correlation test to the reference and each reconstructed target image produced the results shown in Fig. 7. Thus, we conclude that our proposed method is robust when the reconstruction plane shifts along the z-axis and when the target object is rotated.

In this paper, we presented a 3-D automatic target recognition system based on integral imaging and using an all-optical structure to provide real-time processing.

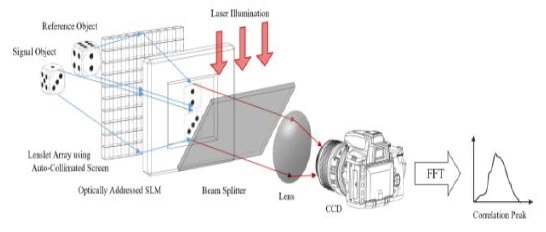

Fig. 8 shows a practical application of our method. The two main processing stages in Fig. 2 may be combined into one imaging system using an auto-collimating screen [16]. In this system, the reconstructed 3-D images are generated at light speed and displayed on a spatial light modulator (SLM) to convert these images into coherent images. Then, a joint transform correlator (JTC) could serve as a correlation filter. This design may produce a real-time, compact 3-D object recognition system.

In future work, we will investigate practical real-time systems that use our proposed method.