One of the advantages of the computational integral imaging (CII) is the capability of applying digital manipulation on the picked-up elemental images. Due to the digital manipulation, it is possible to reconstruct the 3-D image at a reconstruction plane of any desired depth. Furthermore, the recorded elemental images can be digitally post-processed.

This property enables the CII to reconstruct partially occluded 3-D objects for 3-D visualization and recognition [1-19]. Several occlusion removal methods have been studied in [15-19] to solve the problem of the degraded resolution of the computationally reconstructed 3-D image, which occurs due to the partial occlusion. In these studies, the occlusion region is detected by calculating the depth map. Then, the region corresponding to the depth map with relatively small depth values is regarded as the occluding region, and is removed by occlusion removal methods. However, even though the occluding object is removed, there remain data missing holes which prevent us from getting better visual qualities of the reconstructed images. Therefore, in [20], a linear inpainting based computational integral image reconstruction (LI-CIIR) method has been proposed, which fills in the data missing region caused by the occluding object by the linear inpainting technique [21] using the information of neighboring pixels.

It is shown experimentally in [20] that the LI-CIIR shows good results for images with objects having smooth surfaces. However, for the case that the object has a textured surface, the LI-CIIR cannot recover the textured data in the data missing region well. Furthermore, the LI-CIIR does not fully utilize the property that the same object region appears in several neighboring integral images.

Therefore, in this paper, we propose a neighboring elemental image exemplar based inpainting (NEI-exemplar inpainting) method which utilizes the original exemplar based inpainting method [22]. The exemplar based inpainting technique suits well the integral imaging application, since the source for the exemplar based inpainting can be found not only in the elemental image of interest but also in neighboring elemental images. By using neighboring exemplars, the textures can be recovered in the data missing region.

In addition, we also propose an automatic occluding region extraction method which can automatically segment the occluding region based on the use of the mutual constraint using depth estimation (MC-DE) [23] and the level set based bimodal segmentation [24]. The MC-DE performs a stable calculation of the depth map based on the mutual constraint that exists between neighboring elemental images. The level set bimodal segmentation automatically segments the object and the background regions based on the competition between the depth values obtained by the MC-DE. Experimental results show the validity of the proposed system. The quality of the 3-D image reconstructed from the elemental image array with the occlusion removed by the proposed method is almost identical to that reconstructed from the elemental image array without occlusion.

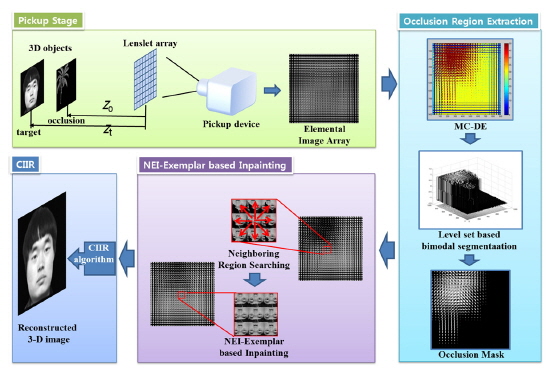

Figure 1 shows the overall diagram of the proposed system. First, the partially occluded 3-D object is picked up and recorded through a lenslet array to form the digital elemental image array. Then, the occluding region is segmented out by the mutual constraint using depth estimation (MC-DE) and the level set based bimodal segmentation. After that, we apply the proposed neighboring elemental image exemplar based inpainting (NEI-exemplar inpainting) to fill in the missing data inside the occluding region. Finally, the 3-D image is reconstructed using the CIIR. We explain the details of each step in the next sub-sections.

2.1. Occluding Region Segmentation

One of the major problems in occlusion artifact removal is the automatic extraction of the occluding region. Recently, several methods have been proposed for the extraction of the occluding region. One of the most successful methods is that which computes the depth map based on the mutual constraints between the elemental images [23]. Hereafter, we call this method the mutual constraint using depth estimation (MC-DE).

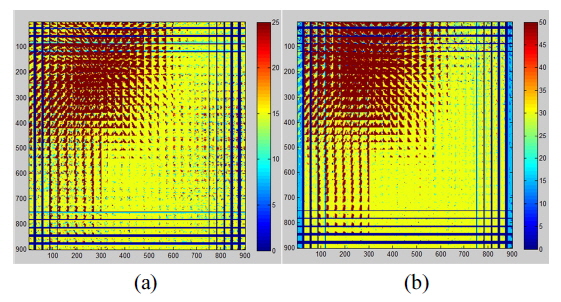

In the MC-DE method, it is assumed that the translation of the same object is constant between neighboring elemental images. Using the constraint between (2

where

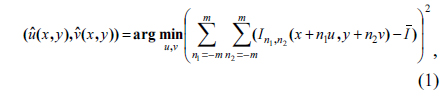

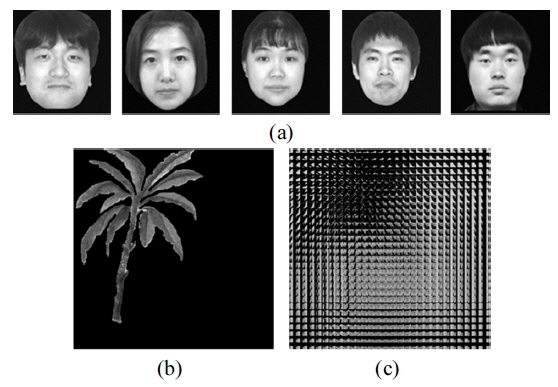

Figure 2 (a) and (b) show the and components of the disparity vector obtained by applying (1) on the elemental image obtained by the optical settings shown in the pickup-stage of Fig. 1. The elemental image is composed of two objects (‘tree’ and ‘face’ objects) with different depths. It can be seen in Fig. 2 that the depth values of the ‘tree’ region and the ‘face’ region are well obtained by the MC-DE method.

Applying a thresholding process on the figures in Fig. 2 with a suitable threshold value can discriminate the occluding region from the object region. However, it is not clear which threshold value discriminates the two regions well. Therefore, in this paper, we propose to utilize the level set based bimodal segmentation method. This method has been proposed by us in [24] to automatically segment target regions without using a pre-defined threshold value. The bimodal segmentation performs a two-phase segmentation based on the competition between the brightness values in the image.

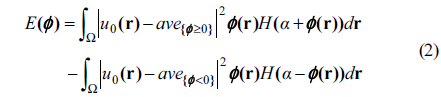

The level set based bimodal segmentation performs a two level segmentation by minimizing the following energy functional with respect to the level set function

Here,

For the problem of segmenting the object region based on the depth map, we set

where and are the components of the disparity vector calculated by (1), and r = (

The level set value at the r, i.e.,

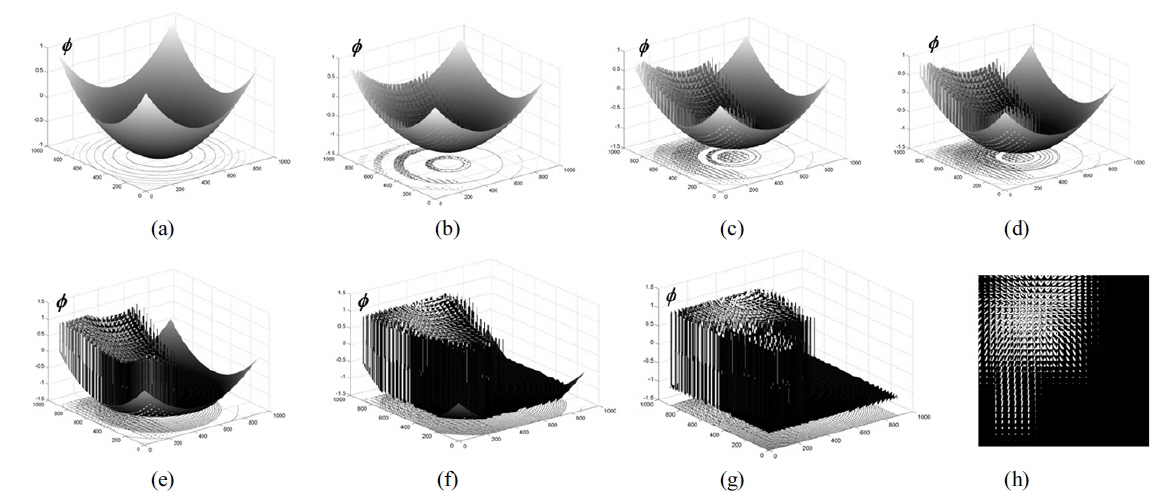

Figure 3 shows the application of the level set bimodal segmentation on the depth map

It can be seen in Fig. 3 that the level set function automatically converges to the state of 1 or -1, since

Thus, collecting the pixels with positive values of the level set function, we can make a weight mask image which indicates the occluding region. The resulting weight mask image is shown in Fig. 3 (h).

2.2. Data Reconstruction with Neighboring Elemental Image Exemplar based Inpainting

Image inpainting is a technique which fills in missing values with reliable data obtained from the boundary of the missing region. In the smoothing based inpainting technique the data from the boundary is smoothed into the missing region to fill in the inpainting region. In [20], we proposed an occluding region removal method based on this inpainting technique to fill in the missing data in the occluding region.

However, if the original data contains many textural data, they cannot be recovered with the smoothing based inpainting. To fill in the inpainting region with textured data, an exemplar based inpainting technique has been proposed for normal images in [22].

Normally, the textured region in the background appears better with exemplar based inpainting than with the smoothing based inpainting. However, there is no guarantee that the textured regions recovered by the original exemplar based inpainting technique are the original data, because no one knows what was behind the occluded region. In comparison, in the case of integral imaging, there exists the additional advantage that the same data appears across several neighboring elemental images.

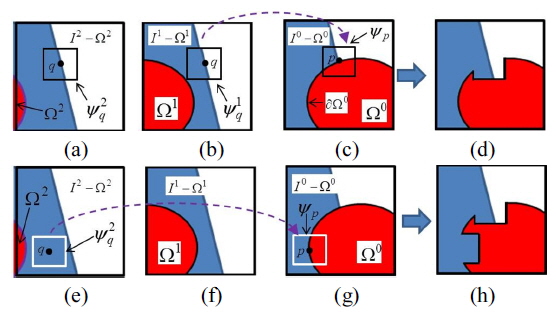

In this work, we modify the original exemplar based inpainting technique to recover the textured regions in the occluding region using the above-mentioned advantage. We call the modified exemplar based inpainting technique the neighboring elemental image exemplar based inpainting (NEI-exemplar inpainting). Figure 4 shows the concept of the proposed NEI-exemplar inpainting technique. For consistency, we use similar notations as in [22].

Figure 4(c) illustrates the elemental image (

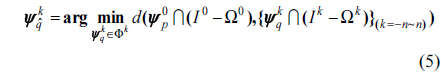

As can be seen in Fig. 4, unlike the original exemplar based inpainting technique, the best-match sample is found from the neighboring elemental images and not from the elemental image under consideration. In other words, the source region lies in neighboring elemental images. Therefore, the problem is formulated as

where

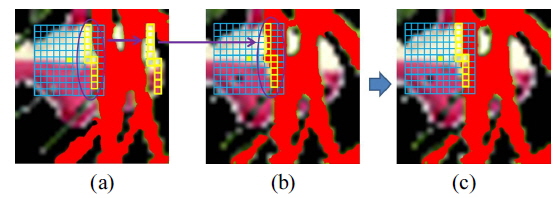

Figure 5 is an exemplary image which shows how the proposed method fills in the data missing region. Figure 5(b) is the elemental image under consideration, where the red region shows the data missing region. An 11 × 11 window is applied on Fig. 5(b), which searches for the window which has the most similar region in the neighboring elemental image (Fig. 5(a)). Then, the non-occluded region in the window in Fig. 5(a) is copied to the region which corresponds to the occluding region in Fig. 5(b). Figure 5(c) shows the copied result. Thus, a part of the occluding region has been recovered by the NEI-exemplar based inpainting. This procedure is iteratively applied to the elemental image in Fig. 5(b) until all the occluding regions are recovered.

After all the occluding regions are recovered, the CIIR is applied on the recovered elemental image array to result in the reconstructed 3-D image.

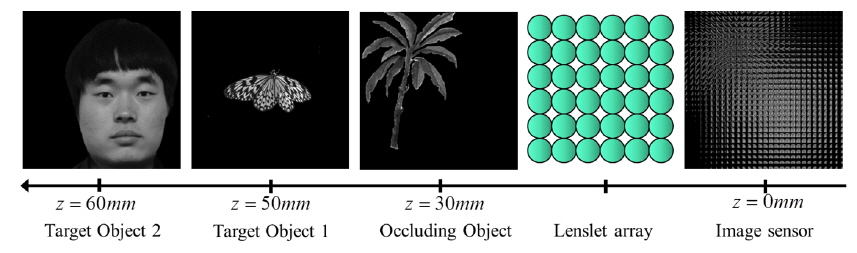

The setup of the pickup stage in our experiments is as shown in the ‘pickup stage’ diagram shown in Fig. 1. The target 3-D objects are the ‘faces’ which are occluded by a ‘tree’. The target and the occluding objects are located

The lenslet array used in the experiments has 30 × 30 lenslets where each lenslet has a diameter of 5 mm. Each lenslet has a resolution of 30 × 30 pixels, and therefore the total number of pixels in the lenslet array becomes 900 × 900 pixels. Using this lenslet array, the partially occluded ‘face’ object was recorded to result in the 900 × 900 pixel elemental image array shown in Fig. 6(c).

First, the MC-DE method is applied to the recorded elemental images to obtain the depth field image. This is shown in Fig. 2 for the case of using the fifth face image in Fig 6(a). Then, the level set bimodal segmentation is applied to the depth map of Fig. 2 to obtain the mask map. Here, we let the parameter

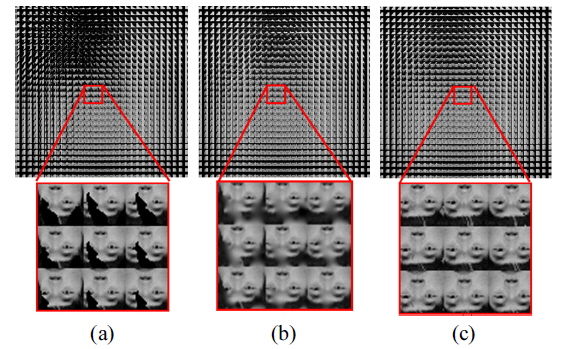

Finally, we applied the proposed NEI-exemplar inpainting method using the mask map as the indication map of the data missing region. Figure 7 compares the inpainting result of the linear inpainting method and the proposed method. Figure 7(a) shows the elemental image array where the occluding object has been removed but the occluding region is left as a data missing region. Figure 7(b) and (c) show the elemental image arrays where the data missing region is filled in by the original linear inpainting and the proposed NEI-exemplar inpainting method, respectively. It can be seen that the linear inpainting method can fill in the missing data, but also results in some blurry artifacts. In comparison, the proposed NEI-exemplar inpainting method reconstructs the textural data in the missing region better than the linear inpainting method.

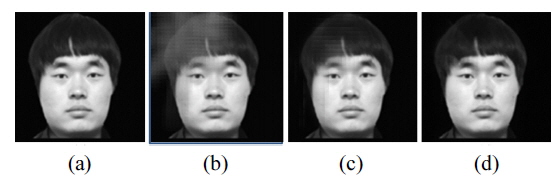

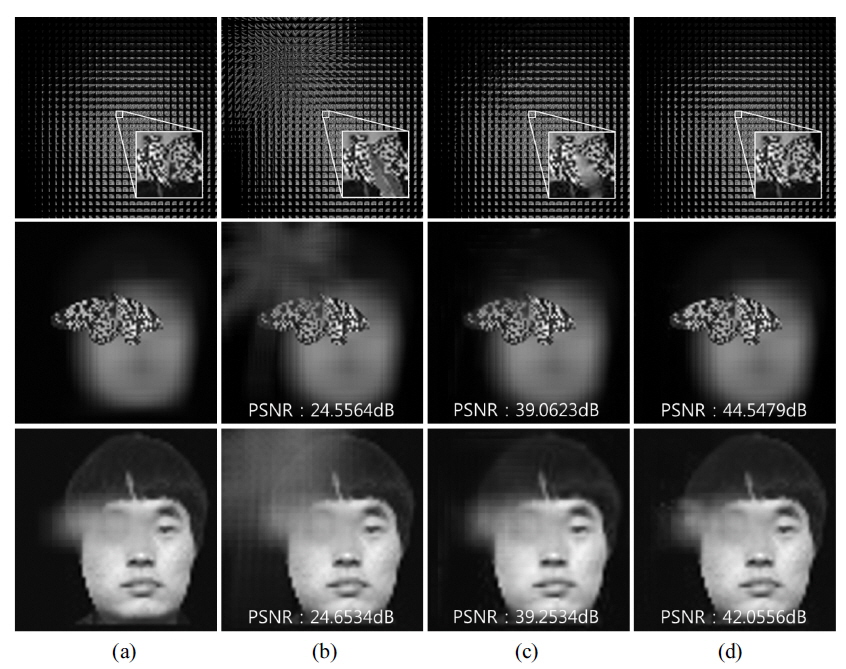

Next, we reconstructed the 3-D plane images using the CIIR method as shown in Fig. 8. Figure 8 shows the 3-D plane images of the fifth ‘face’ image reconstructed by different methods. It can be observed from Fig. 8(b), that the occlusion produces serious noises in the reconstructed image. The LI-CIIR can relieve the problem to a large extent as can be seen in Fig. 8(c). However, there still remain some errors in the brightness values in regions where the occluding object has occluded the target object. This is due to the blurring artifact in the elemental images caused by the linear inpainting. In comparison, the proposed method can improve the visual quality of the reconstructed image as can be seen in Fig. 8(d).

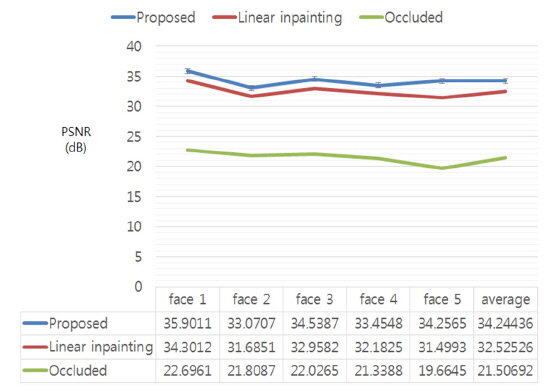

We measured the peak signal-to-noise ratio (PSNR) for all the reconstructed plane images for a quantitative comparison between the different methods. Figure 9 shows the PSNR results for the five face images used in the experiment. The linear inpainting based CIIR and the proposed NEI exemplar based inpainting using CIIR show high PSNR improvements over the conventional CIIR method. While both of the inpainting based CIIR methods show large improvements over the conventional CIIR, the proposed method shows a higher PSNR improvement than the linear inpainting based CIIR, thus revealing the superiority to the linear inpainting based CIIR.

Finally, we performed an experiment on an elemental image array which is obtained by a pickup setup as shown in Fig. 10. There are two target objects with different depths where ‘target object 1’ lies at

Again, we removed the occluding ‘tree’ object from the elemental image array and reconstructed the 3-D image at planes with depths of

This is due to the fact that ‘target object 1’ has a textured surface which the proposed method can recover much better than the linear inpainting based CIIR.

We proposed a method which can recover not only smooth data but also textured data in the data missing region caused by the partial occlusion of the occluding object. To this aim, the proposed method fills in the data missing region with exemplars obtained from neighboring elemental images. It has been shown experimentally, that the proposed method can recover textural regions in the elemental images, while other conventional CIIR methods including the linear inpainting based CIIR method cannot. This results in a 3-D image reconstruction better than those using conventional methods. It is expected that the proposed method can be used in several applications where the target object has to be clearly visualized and recognized in spite of a partial occlusion.