Object tracking is an important task for many applications, such as for robot navigation, surveillance, automotive safety, and video content indexing. Based on trajectories obtained through tracking, some advanced behaviour analysis can be applied. For instance, a pedestrian’s trajectory can be analysed to warn a driver if the trajectories of the vehicle and of the pedestrian are potentially intersecting.

For multiple object tracking, tracking-by-detection methods are the most popular algorithms. A detector is used in each image frame to obtain candidate objects. Then, with a data-association procedure, all the candidates are matched to the existing trajectories as known up to the previous frame. Any unmatched candidate starts a new trajectory. Since there is no perfect detector that detects all objects without any false positives and false negatives, sometimes objects are missed (i.e., they appear in the image but are not detected), or background windows are incorrectly detected as being objects. Such false-positive or false-negative detections increase the difficulty of tracking.

Occlusion by other objects or the background is one of the main reasons for detection to fail and it also increases the difficulty of tracking (e.g., identity switch). Some algorithms [1,2] propose tracking objects in the 2D image plane. The occlusion problem is handled either using part detectors and tracking detected body parts, or adopting instance-specific classifiers to improve performance of data assignment. However, tracking in the 2D image plane increases the ambiguity of data association. A tall person nearby, and a small person far away, for example, may appear very close to each other in the image, and, possibly, in some frames the tall person occludes the small person. But they are actually several meters away from each other. Thus, often, and also in this paper, stereo information is adopted to improve the tracking performance [3-5], and multiple pedestrians are tracked in 3D coordinates.

Tracking objects with irregular movements in 3D space is a challenging task due to the totally unknown speed and direction. In this paper, the application of an unscented Kalman filter (UKF), which can also handle nonlinear?in fact, fully irregular trajectories in 3D space, is demonstrated. For the original paper on UKF see [6]. Similar work is proposed in [5]. However, instead of modelling the motion of the vehicle and the pedestrians separately, we straightforwardly model the relative motion between them, and no ground plane is assumed, so that objects moving with 6 degrees of freedom can be tracked properly. Different types of models are tested and compared in both simulation and real sequences.

Multiple object tracking has attracts a great deal of attention recently in computer vision research. Today, an update of the review [7] from 2006 should also include work such as in [2-4,8-13].

Kalman filters (KF) have been extensively adopted to deal with tracking tasks. A KF is a recursive Bayesian filter, firstly, using motion information to predict the possible position, followed by fusing the observation (detection) and predicted position. A linear KF is used for tracking (e.g., [7]) when movement is such that linear models may be used for approximation. Obviously, a linear model is not suitable for most cases. The extended Kalman filter (EKF) was designed [14] for handling a nonlinear model by linearizing functions using the Taylor expansion extensively. For example, an EKF has been used for simultaneous localization and mapping [15], and for pedestrian tracking [16]. A particle filter was used to handle the task in [17]. Performance similar to an EKF is reported in [3].

The UKF can handle a nonlinear model by using the unscented transform to estimate the first and second order moments of sigma points, which represent the distribution of a predicted state and predicted observations, and it appears that the UKF does this better than the EKF [18]. Thus, in this paper, a UKF is used for tracking multiple, irregularly moving objects in 3D space, which is a highly nonlinear problem.

The unscented transform (UT) is the core component that enables the UKF able to be able to handle nonlinear models. Let

and called

The UT works by sampling

be the state covariance matrix (i.e., describing dependencies between components of a state χ ). Formally,

where λ is a positive real, used as a scaling parameter. These sigma vectors can be passed through a nonlinear function (e.g., f or h) one by one, thus defining trans-formed (i.e., new) sigma vectors such as

Means x

are obtained as follows; take h for example:

with constant weights

The UKF is illustrated as follows. At first we initialize the state x=x0 and state covariances P(xx) = P0(xx). For the augmented vectors, let

where Q denotes the process-noise covariance matrix. Details about Q are given in Section IV. For

The process update is as follows:

We update the sigma vectors using

and update the measurement covariance matrix as follows:

where R is the assumed measurement noise covariance, depending on the observation model selected. Details are given in Section IV.

Altogether, the UKF is defined by

Following tracking-by-detection methods, which are popular for solving multiple-object tracking tasks, a detector is applied in each frame to generate object candidates which are outputs of the detector. One UKF is adopted for tracking one object separately; thus a group of detected pedestrians defines a family of UKFs to be processed simultaneously. Each UKF tracks one detected object. The predicted state of a UKF is used for data association; when an observation (of the tracked object) is available in the current frame then we update the predicted state by using the corresponding UKF.

Detection-by-tracking methods rely on evaluating rectangular regions of interest, and we call them object boxes if positively identified as containing an object of interest. For pedestrian tracking, we adopt the popular histogram of oriented gradients (HOG) feature method and a support vector machine (SVM) classifier, originally introduced in [19]. HOG features describe the human profile by an oriented gradient histogram. An SVM classifier is able to handle high-dimensional and nonlinear features (such as HOG features). It projects sample features into a high

dimensional space, and then finds a hyperplane to separate two classes. Instead of using a sliding window, regions of interest (i.e., inputs to the classifier) are selected by analysing calculated stereo information (depth and disparity maps), as proposed in [20].

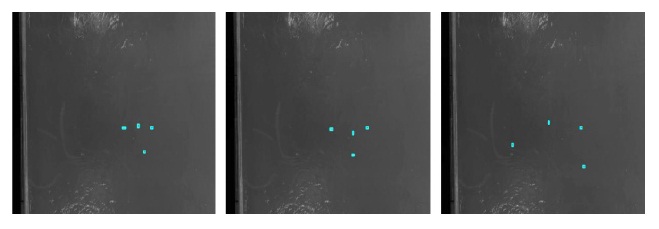

Fig. 1 shows several detection results in pedestrian sequence, dots (cyan) denote the boxes’ centre that are recognized as pedestrians, and the red rectangles denote the final detection results. As can be seen in the results, the object boxes may contain background, shift from the object, or miss the pedestrians.

For the detection of

>

B. UKF-based Object Tracking

As there is an unknown number of objects in a scene, the state-dimensionality would expand significantly if we would have decided to track all pedestrians in one UKF; in this case, the speed of tracking reduces dramatically when the scene is crowded with many detected objects. Thus, we decided on one UKF for each detected object for tracking.

Choosing a proper model is important. In this subsection we offer three models for possible selection:

1) The Two 3D Models

In the 3DVT model, the object is tracked in 3D world coordinates. Its 3D position (

a) Process model: We assume constant velocity between adjacent frames, with Gaussian distributed noise acceleration na ? N (0, Σna). The diagonal elements in Σna are set to be equal and denoted by

Thus,

whereΔ

b) Observation model: An observation consists of the position (i0, j0) (say, the centroid of the detected object box in the left camera), disparity d of the detected object, and velocity (vox, voy, voz) in 3D coordinates. The usual pinhole camera projection model is used 4to map 3D points onto the image plane,

where

For the disparity

As it is difficult to obtain high-quality scene flow as required for 3DVT, 3DT simplifies the 3DVT model by excluding the scene flow from the observation, and has the same process model as 3DVT. In this case,

2) The 2D Model

If only monocular recording is available, the object is tracked in the 2D image plane only. The state x=(

a) Process model: The process model is the same as for the 3D models. We assume a constant velocity between subsequent frames with a Gaussian noise distribution for acceleration na:

C. Data Association

As each object is tracked independently, data association by matching candidates to existing trajectories becomes important. If no match is found then we decide to initialize a new tracker.

Since object movements are continuous, the estimated velocity in the UKF can be used as a cue to localize the search area in order to find the match object. For each trajectory, the possible location (i.e., (

One candidate might be matched with several trajectories if the Euclidean distance is below

No object appearance description is used here for assigning an object to a trajectory. In general, the inclusion of appearance representation (e.g., a colour histogram, or an instance-specific shape model) improves the performance. However, this is out of the scope of this paper, where we are focusing on the combination of different data association methods.

In this section, first, our three models (3DVT, 3DT, and 2DT) are tested in a simulated environment with different parameter sets. Second, our multiple-object tracking method is tested on real video sequences where (3D example) pedestrians are walking in inner-city scenes, or (2D example) larvae are moving on a flat culture dish.

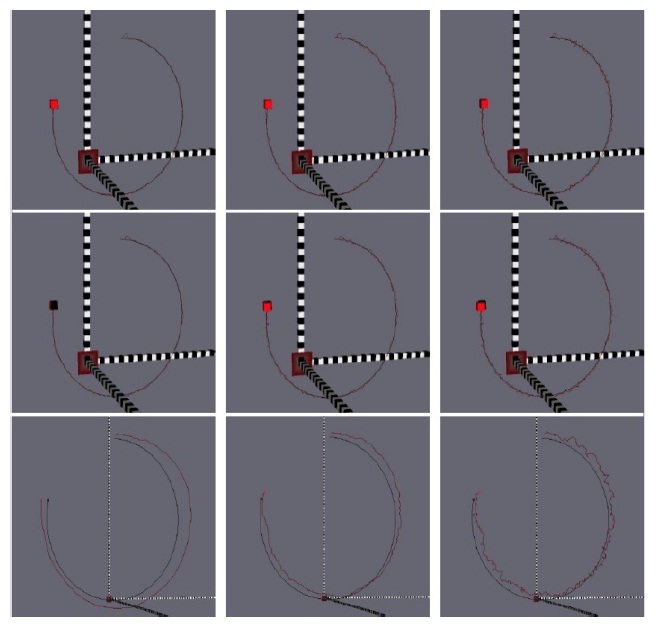

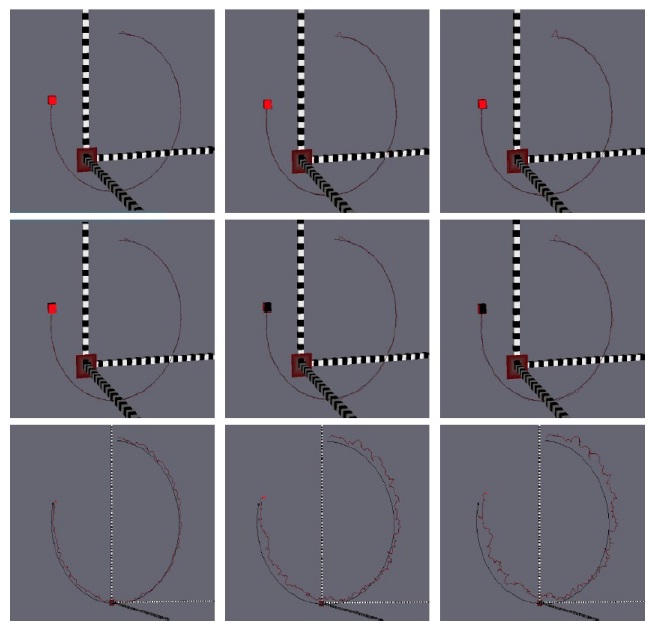

The three models defined in Section IV are tested in a simulation environment in OpenGL (SGI, Fremont, CA, USa). A cub is moving on a circular path around a 3D point with constant speed, as shown in Figs. 3 and 4. Acceleration noise na with different covariance (e.g., σ2

Fig. 3 demonstrates the effect of σ2

A larger σ2

Fig. 4 shows results for our models for different covariance values σ2

>

B. Multiple Object Tracking in Real Data

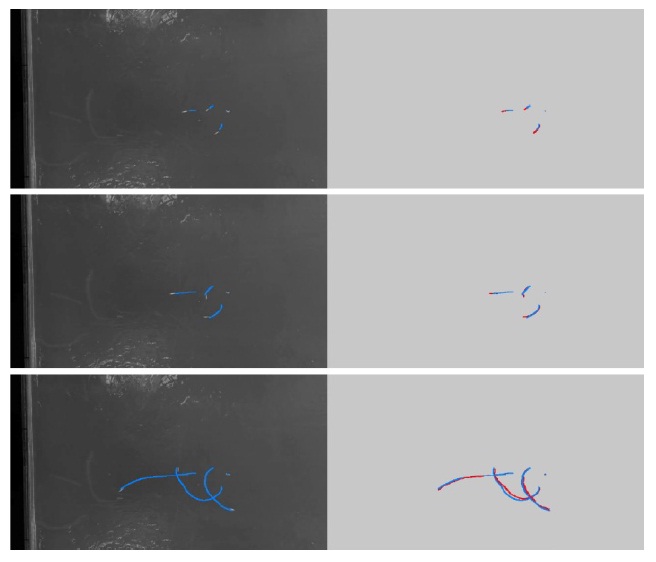

In this section we report on the performance of UKFsupported tracking for multiple larvae using the 2DT model, and for multiple pedestrians in traffic scenes using the 3DT model. The larvae and pedestrian sequences are recorded at 30 and 15 frames per second, respectively.

Results for larvae tracking are shown in Fig. 5. As the velocity in the model is initialized by (0,0), the UKFestimation is “ slower” than the real speed of the larvae in the first 30 frames. The speed of convergence can be improved by increasing σ2

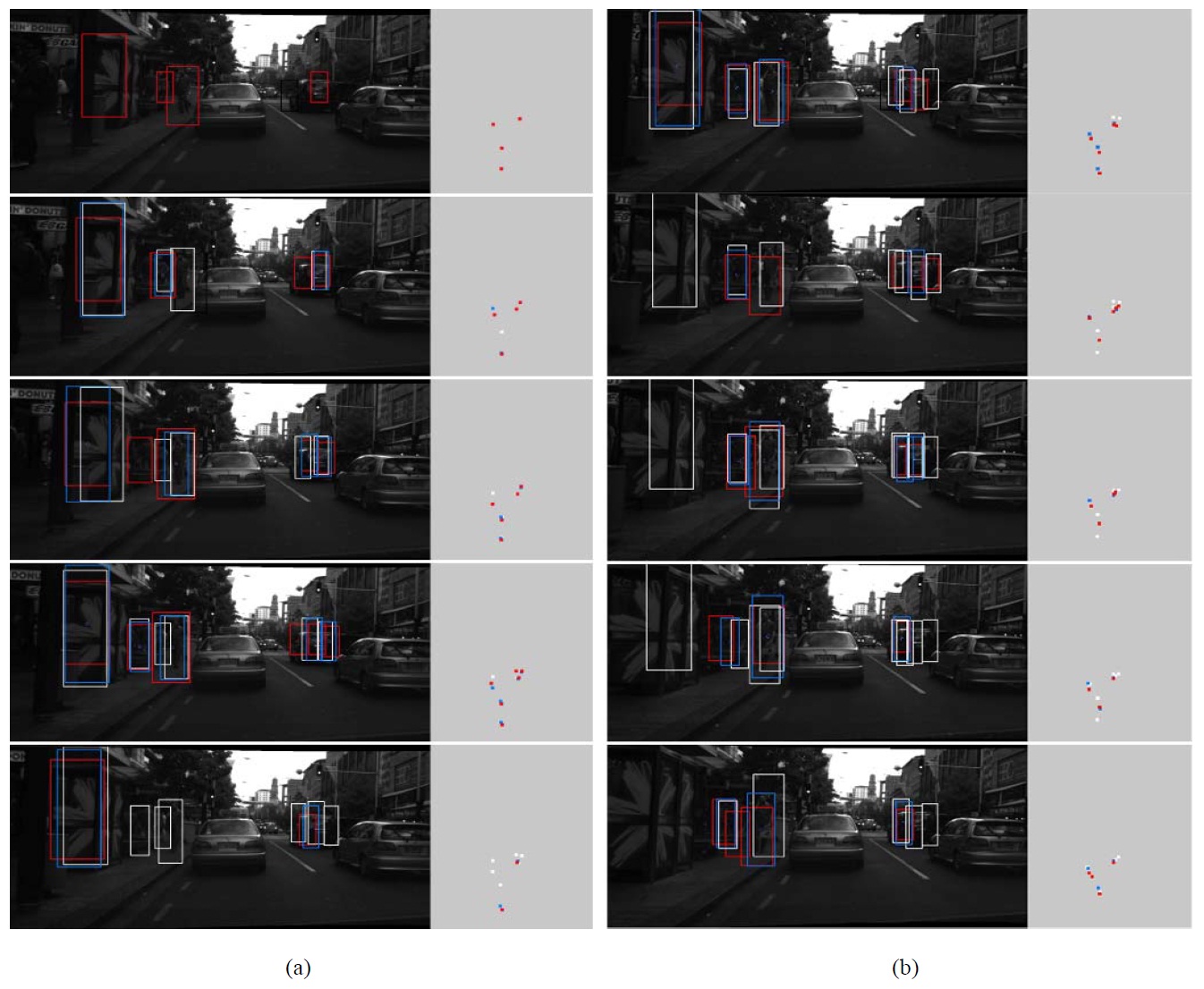

The results for pedestrian tracking are shown in Fig. 6. Objects are missing or shifting from time to time due to the clustered background (e.g., the car in the traffic scene detected as a pedestrian), illumination variations leaving some pedestrians undetected, or internal variations between objects (i.e., unstable detections). Our experiments verified that UKF predictions are able to follow irregularly moving pedestrians when detection fails for a few frames, and can even correct unstable detections.

The second frame in Fig. 6 shows that the undetected pedestrian is predicted correctly in the white object box and is successfully matched to a detected position in the third frame. The last frame in Fig. 6 demonstrates that displaced detections are corrected by the UKF. Using only the defined distance rule for data assignment, this appears to be insufficient, especially for the given detection results. A small threshold may lead to a mismatch (i.e., the detection fails to satisfy the rule), and a large threshold may lead to an identity switch (i.e., a pedestrian is matched to another pedestrian).

Assigning one UKF to each detected (moving) object simplifies the design and implementation of UKF prediction of 2D or 3D motion. Experiments demonstrate the robustness of the chosen approach. This tracker only generates shortterm tracks when detection is not reliable; long-term tracking should be possible by also introducing dynamic programming.

For evaluating the performance in real-world (either 2D or 3D) applications, more extensive tests need to be undertaken, especially for the design and evaluation of quantitative performance measures. For example, the measures discussed in [22] for evaluating visual odometry techniques might also be of relevance for the tracking case.